KarpathyLLMChallenge

The content below is generated by LLMs based on the Let’s Reproduce GPT-2 video by Andrej Karpathy

Advanced Model Configuration and Training Optimization

Training large language models like GPT-2 and GPT-3 involves a complex interplay of factors including data preparation, model architecture, training dynamics, and evaluation. This article delves into the advanced techniques and best practices that can significantly enhance the performance and efficiency of training these sophisticated models.

Table of Contents

- Advanced Model Configuration and Training Optimization

- DataLoader and Model Initialization

- Optimization Loop

- Ensuring Efficient Memory Usage

- Leveraging Kernel Fusion with FlashAttention

- Hyperparameter Alignment with GPT Papers

- Hyperparameter Tuning in Line with GPT-3

- Adam Optimizer Configuration

- Explicit Epsilon Value - Gradient Clipping

- Learning Rate Schedule

- Efficient Training with Full Context Windows

- Test Set Contamination Studies

- Performance Metrics and Debugging

- Wrapping Up

- Advanced Learning Rate Scheduling

- Cosine Decay Learning Rate Schedule

- Batch Size Scaling

- Regularization with Weight Decay

- Test Set Contamination Avoidance

- Gradient Clipping

- Training Efficiency with Full Context Windows

- Implementing the Learning Rate Scheduler

- Learning Rate Scheduling in Practice

- Implementing the Learning Rate Scheduler

- Learning Rate Scheduler Visualization

- Model Specific Learning Rates

- Insights into Training GPT-3

- Efficient Sequence Packing

- Further Technical Details

- Terminal and Training Metrics

- Understanding the Learning Rate Scheduler Code

- Training Log Insights

- Dataset Examples

- Training and Debugging

- Diving Deeper into Learning Rate Scheduling

- The Learning Rate Scheduler in Detail

- Key Points to Remember

- Model Training and Optimization

- Insights from the Training Loop

- Tackling Training Data Quality

- Elaborating on GPT-3 Training Details

- Understanding Batch Size Ramp-Up

- Sampling Data Without Replacement

- Implementing Weight Decay

- Training Loop Enhancements

- GPT-3 Training Strategies

- Fine-Tuning the Weight Decay Parameter

- Leveraging Fused AdamW for Performance

- Kernel Fusion Optimization

- Conclusion and Next Steps

- Refining the

configure_optimizersMethod - Performance Improvements with Fused AdamW

- Emphasizing the Importance of Weight Decay Selection

- Learning Rate Scheduling and Optimization

- Advanced Optimizer Configurations

- Evaluation of GPT-3 on NLP Tasks

- Model Size and Learning Rate Adaptations

- Gradient Accumulation: A Solution for Limited Resources

- Implementing Gradient Accumulation in the Training Loop

- Model Architectures Across Different Scales

- Hyperparameters Across Model Sizes

- Conclusion

- Customizing the Learning Rate Schedule

- Optimizer Configuration and Learning Rate Application

- Understanding Gradient Accumulation Mechanism

- The Impact of Reduction on Loss Calculation

- Fine-tuning the Learning Rate Function

- Implementing and Applying the Learning Rate Schedule

- Gradient Accumulation and Loss Normalization

- Real-time Training Metrics Output

- Adjusting Loss Calculation for Gradient Accumulation

- Correcting Gradient Values with Loss Scaling

- Detailed Example of Loss Scaling with Gradient Accumulation

- Implementing a GPT Model Class

- Loss Calculation with Cross Entropy

- Learning Rate Scheduling

- Scaling Loss for Gradient Accumulation

- Detailed Loss Calculation with Gradient Accumulation

- Training Loop with Scaled Loss and Learning Rate Scheduling

- Real-time Performance Metrics

- Accumulating Loss for Gradient Descent

- Understanding Loss Calculation with Gradient Accumulation

- Real-time Training Metrics

- Gradient Scaling and Accumulation

- Real-time Training Metrics and Performance

- Ensuring Accuracy with Batch Size and Gradient Accumulation Steps

- Selecting the Right Device for Training

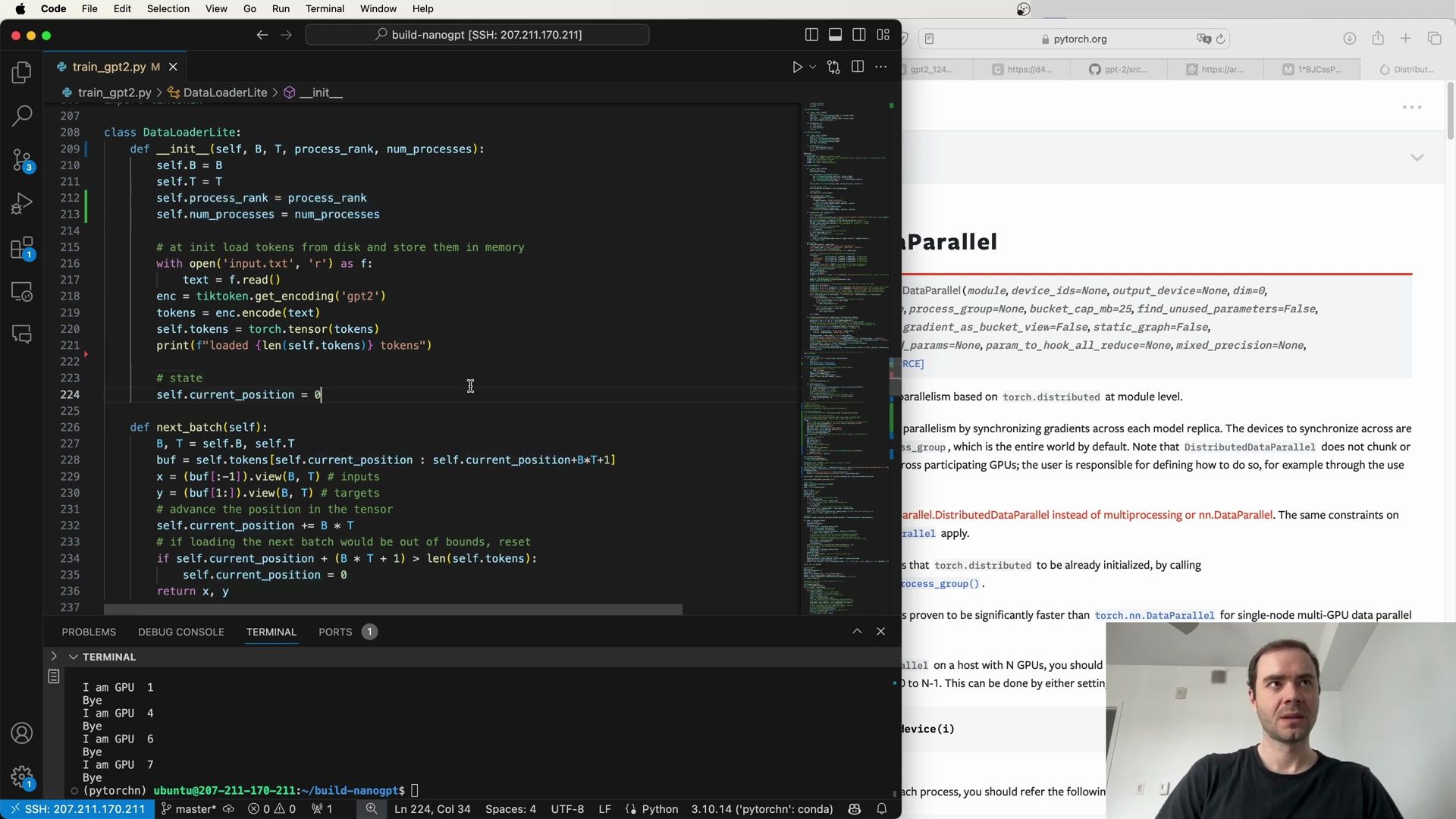

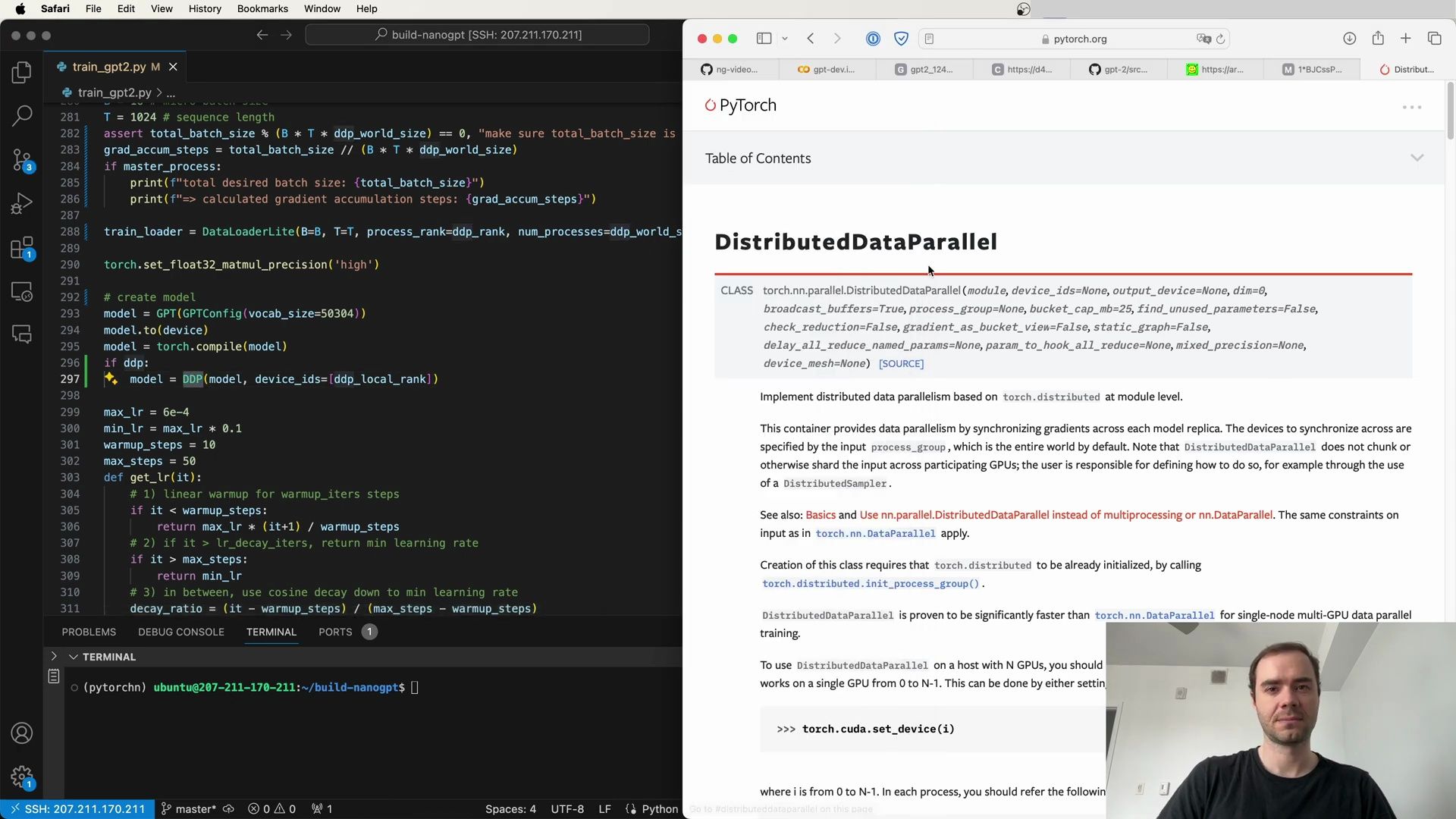

- Distributed Training with PyTorch

- Monitoring GPU Utilization

- Putting GPUs to Work with Distributed Data Parallel

- Understanding DistributedDataParallel

- Setting Up Distributed Training

- Real-time GPU Monitoring

- Collaborative Gradient Computation

- Initiating Distributed Training

- Detecting Distributed Training Environment

- DataLoader Adjustments for Distributed Training

- Coordinating Distributed Processes

- Master Process in Distributed Training

- Handling Non-DDP Runs

- Initializing the Training Loop

- Distributed Training Configuration

- Handling Device Autodetection

- Gradicum Steps Adjustment

- Model Compilation and Logging

- DataLoader Initialization

- Handling Output in Distributed Systems

- Master Process and Device Autodetection

- Launching Training With Distributed Data Parallel

- DataParallel Configuration

- Training Loop and Device Handling

- Seed Setting and Batch Size Configuration

- Output Management in a Multi-GPU Setup

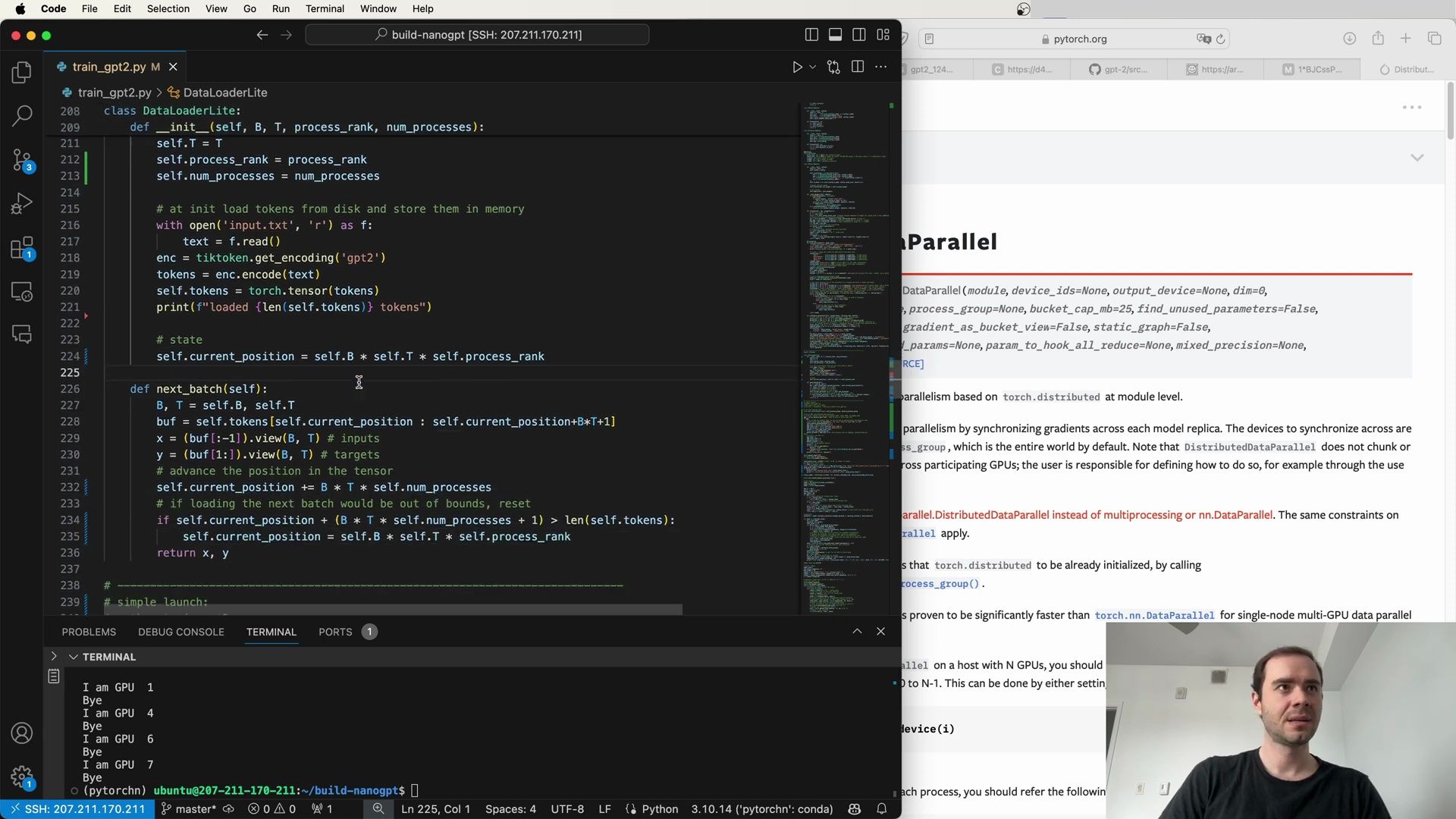

- DataLoaderLite Example

- Advanced Model Parallelism with DistributedDataParallel

- Final Remarks on DDP Training

- Cleanup Procedures in Distributed Training

- DataParallel Extended Configuration

- DataLoaderLite Implementation

- Advanced DistributedDataParallel Configuration

- Striding Across Processes with DataLoaderLite

- Distributed DataLoader Implementation

- Device and Model Initialization

- Model Compilation and Learning Rate Scheduling

- Parallelism and DistributedDataParallel Configuration

- Setting Up the Training Loop

- Optimizing the Training Process

- Model Compilation and Learning Rate Adjustment

- Distributed Training Efficiency

- Advanced DDP Features

- Synchronizing Across Multiple GPUs

- Backend Initialization and Process Spawning

- Understanding DistributedDataParallel in Depth

- Optimizing Batch Sizes for DDP

- Diving Deeper into the DDP Mechanism

- DataLoader and Training Loop Adjustments for DDP

- Synchronizing Gradients with Gradient Accumulation

- Cautionary Note on DistributedDataParallel

- Detailed Parameters for DistributedDataParallel

- Advanced Gradient Management with DDP

- Disabling Gradient Synchronization

- Communication Hooks in DDP

- Gradient Division by World Size

- Monitoring Training Performance

- Problems and Debugging

- Granular Control of Gradient Synchronization

- Dynamic Gradient Synchronization

- Direct Toggling of Backward Synchronization

- Fine-Tuning the Backward Pass

- Handling Gradient Averaging

- Distributed Averaging of Loss

- Gradient Accumulation and Averaging

- Scaling Loss for Gradient Accumulation

- Synchronization of Gradients

- GPT Neural Network Architecture

- DataLoader Simplification

- Learning Rate Scheduling

- Handling Gradient Accumulation

- Distributed Data Parallel (DDP) Considerations

- Gradient Clipping and Learning Rate Adjustment

- Synchronizing and Measuring Performance

- Learning Rate Scheduler

- Final Debugging and Launching

- Optimizing the Learning Rate Schedule

- Distributed Training Considerations

- Fine-Tuning the Optimization Loop

- Adjusting for DDP Model Configuration

- Configuring Distributed Training

- Adjusting Learning Rate and Model Parameters

- Output During Training - Optimizer Configuration in DDP

- DataLoader and Gradient Accumulation

- Debugging and Problem Solving

- Enhancing the DataLoader for Effective Batching

- Terminal Output for Monitoring Training Progress

- Implementing Gradient Accumulation with

no_sync - Device Auto-detection and DDP Configuration

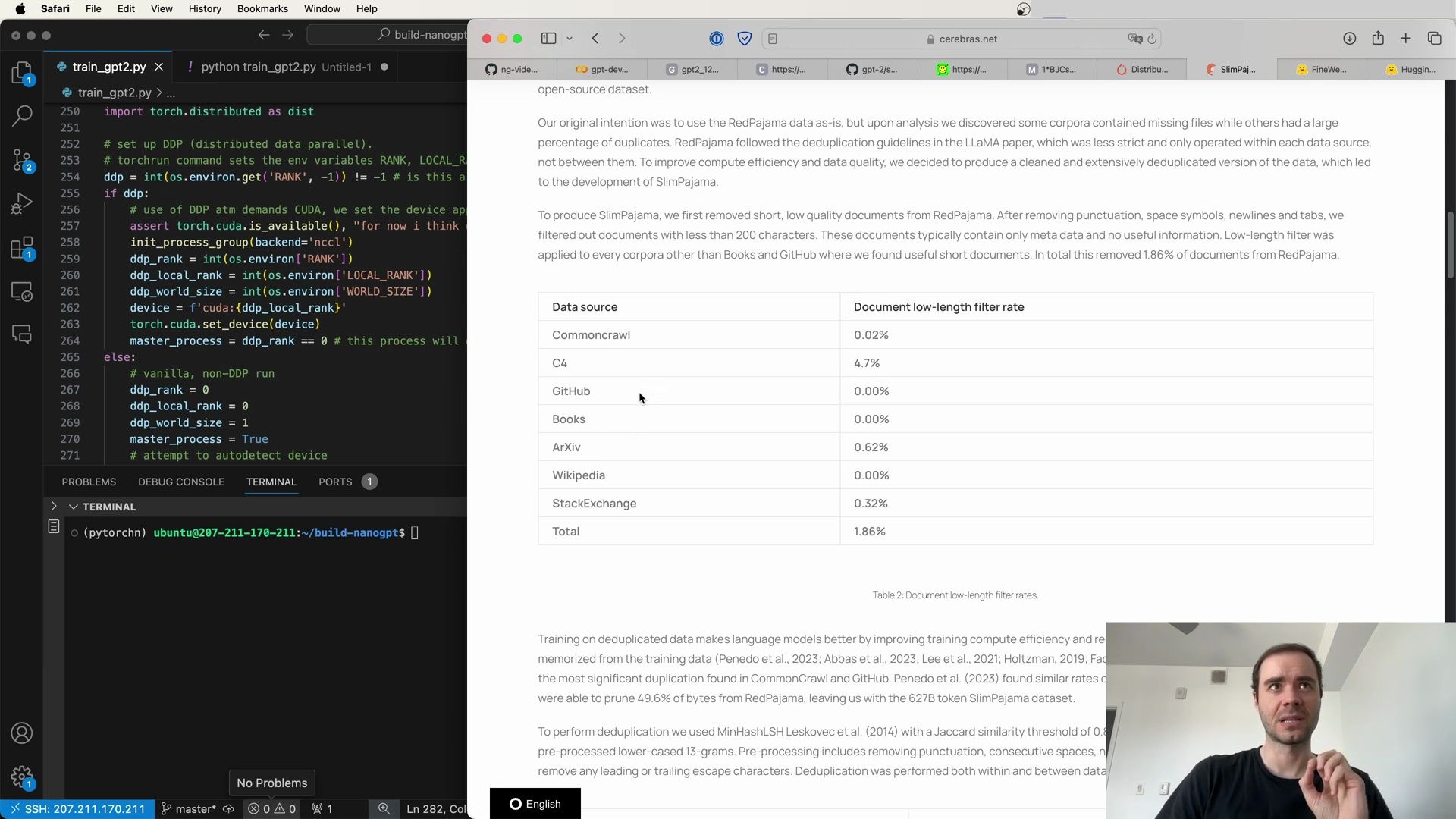

- Dataset Considerations for GPT-2 and GPT-3 Training

- Deduplication and Data Cleaning

- Dataset Diversity and Quality in LLM Training

- Fine-Tuning Dataset Composition - The Creation of SlimPajama Dataset

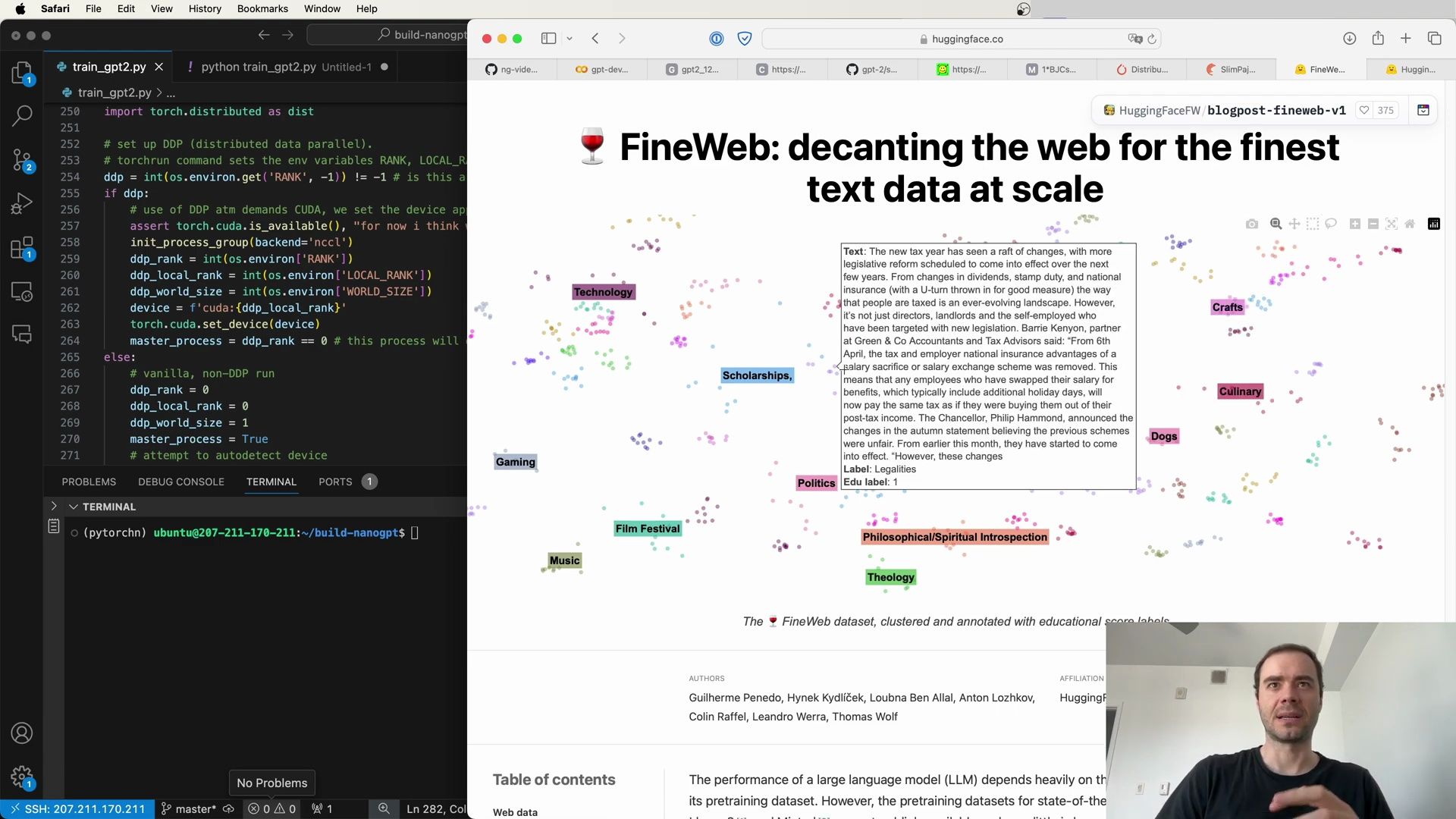

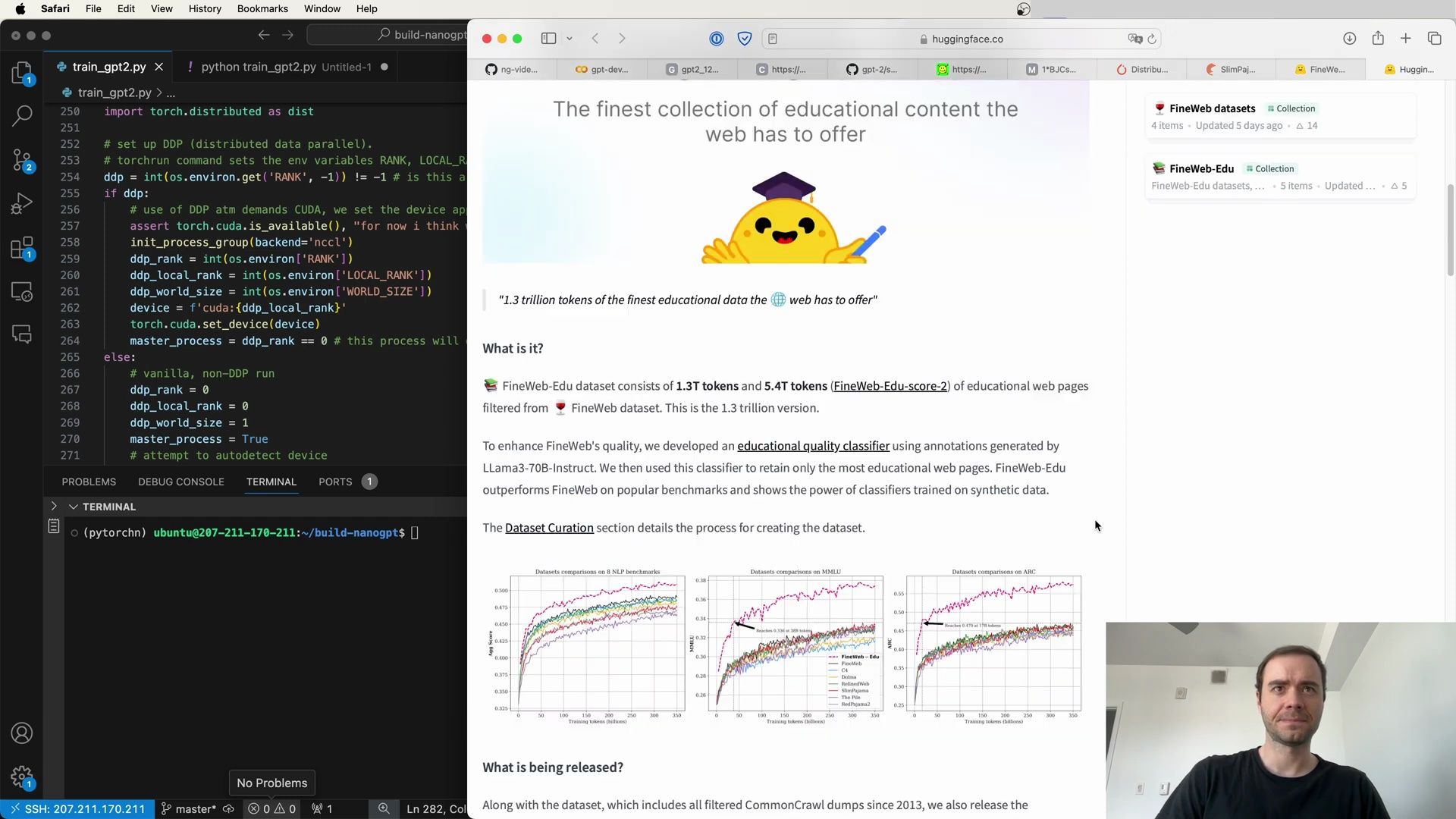

- Steps to Enhance Data Quality - Introducing FineWeb Dataset

- FineWeb’s Contributions

- The FineWeb-Edu Subset - Distributed Data Parallel (DDP) Configuration

- Processing Web Data at Scale

- Harnessing Educational Content for LLM Training

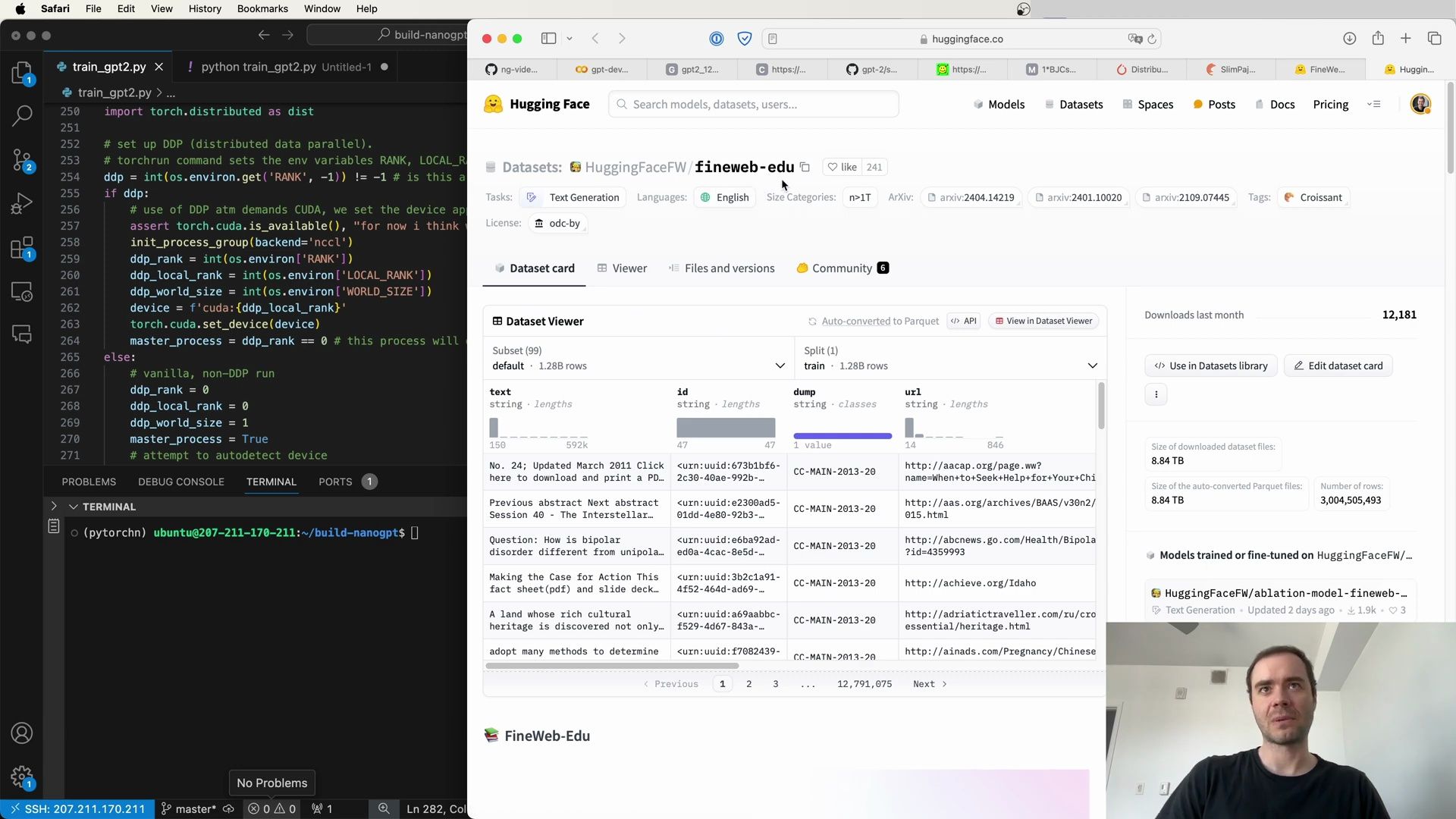

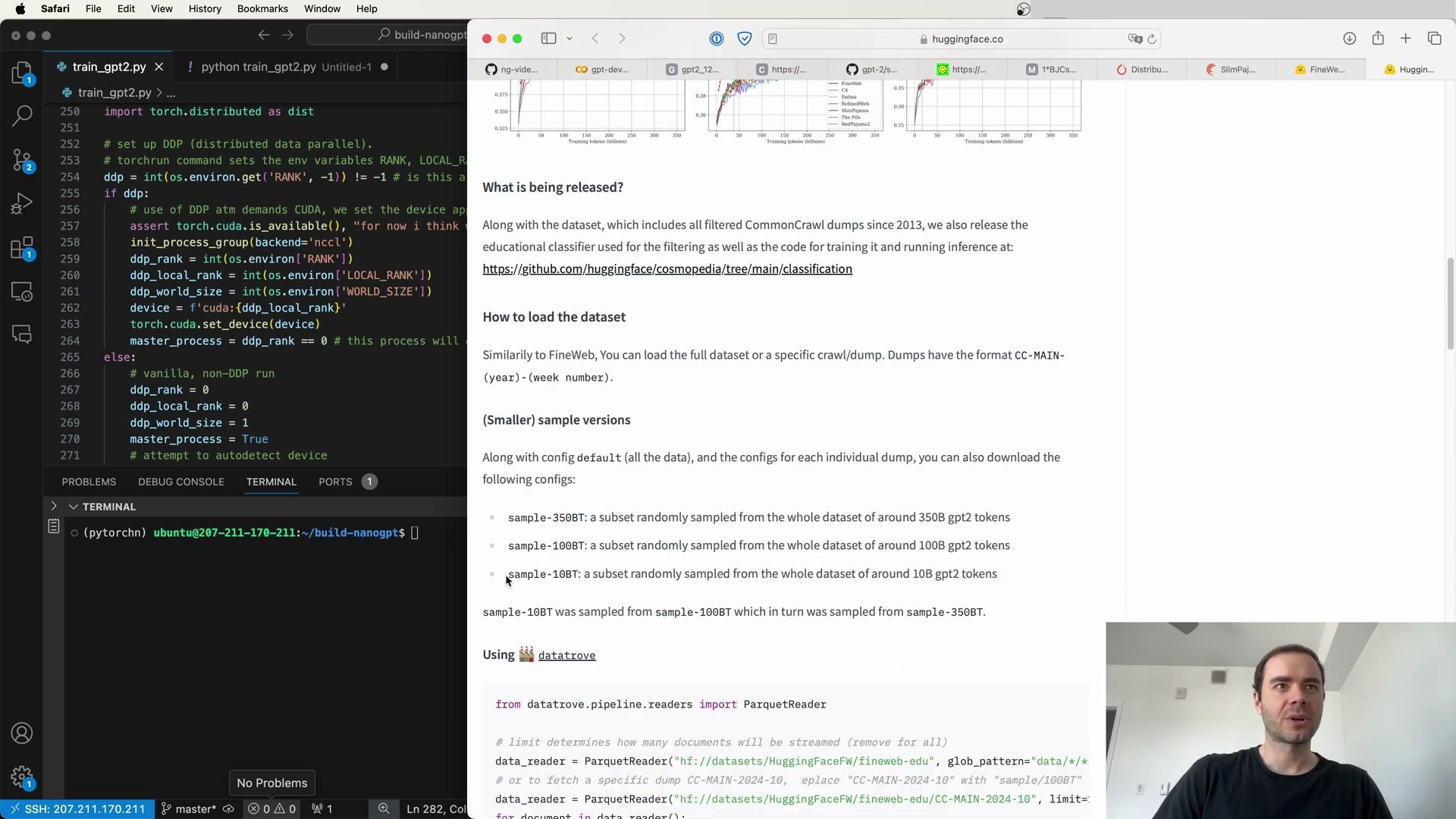

- Sampling the FineWeb-Edu Dataset

- Accessing and Utilizing the Data

- Distributed Data Parallel Setup

- Data Processing with Datatrove

- Simplified Data Access and Tokenization

- Filtering Content with LLMs

- Running the Tokenization Script

- Tokenization Process Explained

- Multiprocessing for Efficient Tokenization

- Shard Management

- Tokenization and Sharding Process

- Continued Training Preparation

- Loading Tokens and Initializing Data Loaders

- Managing Shards and Batches

- Configuring the GPT-2 Model

- Initializing the Training Process

- Progress Through Training

- Batch Size and Sequence Length Configuration

- Advanced Training Techniques in GPT Models

- Setting Up the Training Environment

- Learning Rate Scheduling - Calculating Steps for Token Processing

- Quality and Deduplication in Data Preparation

- Model Training Details

- Optimizing the Learning Rate

- Batch Size Configuration

- Device Configuration and Seed Setting

- Distributed Training Setup

- Training Loop Execution

- Launching the Training Script

- Monitoring Training Progress

- Training Metrics

- Batch Size Revisited

- Creating and Configuring the GPT Model

- Learning Rate Schedule

- Training Output Analysis

- Training Considerations

- Enhancing Precision and Model Creation

- Dynamic Learning Rate Adjustment

- Monitoring Training Progress with Live Metrics

- Validating Model on a Validation Set

- Setting up the Device for Training

- Data Loader for Efficient Data Handling

- Model Optimization Configuration

- Model Evaluation and Sampling

- Continuous Validation and Model Saving

- Generating Model Samples During Training

- Printing the Generated Text

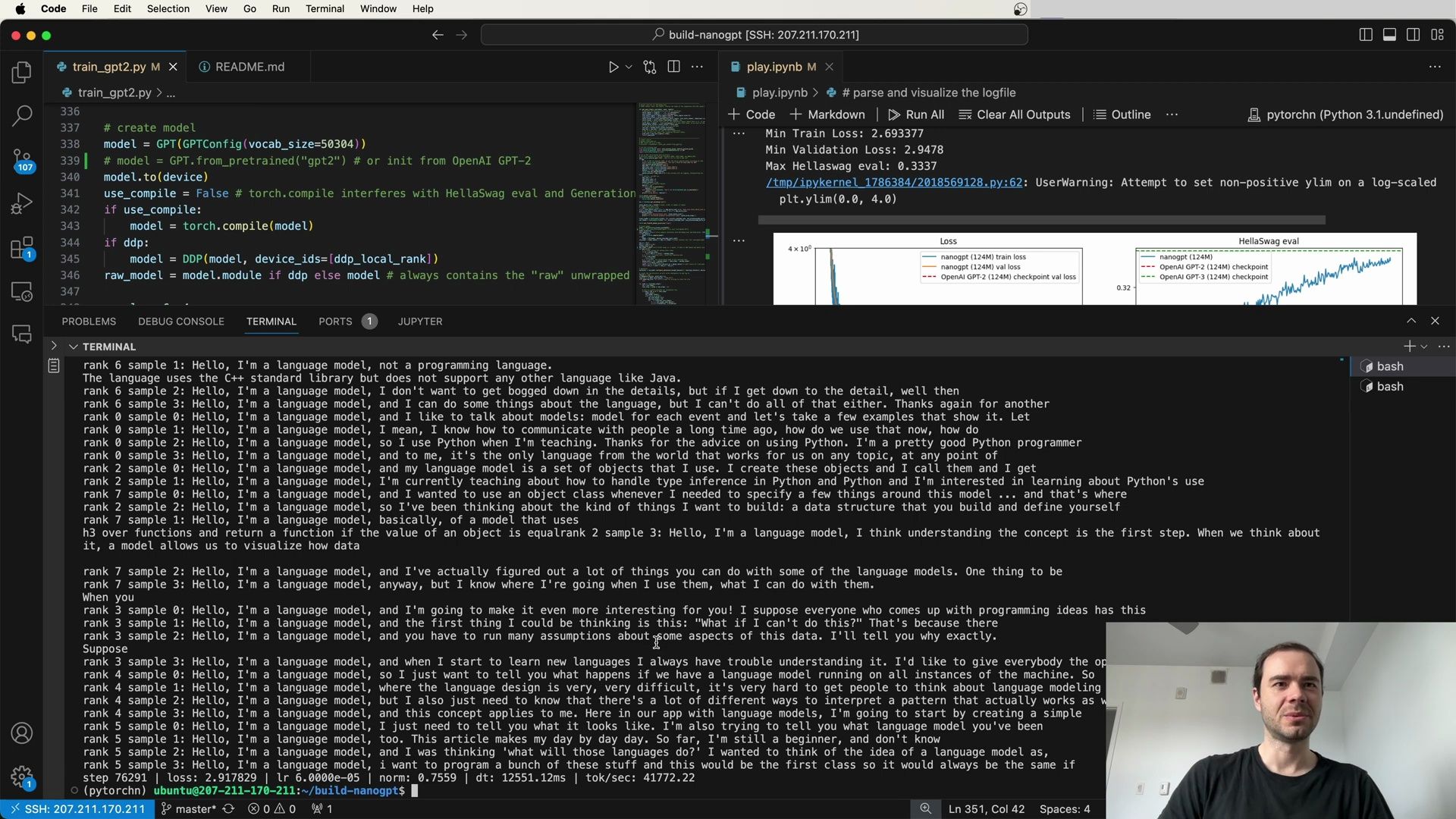

- Generated Text Samples

- Examples of Generated Samples

- Training Loop and Sampling Integration

- Sample Generation with Top-K Sampling

- Managing the Random Number Generator (RNG) State

- Continuous Validation and Saving Best Model

- Handling Distributed Training

- Documenting Model Changes and Fixes

- Adjusting the Tokenization Length

- Handling Validation Loss Across Distributed Systems

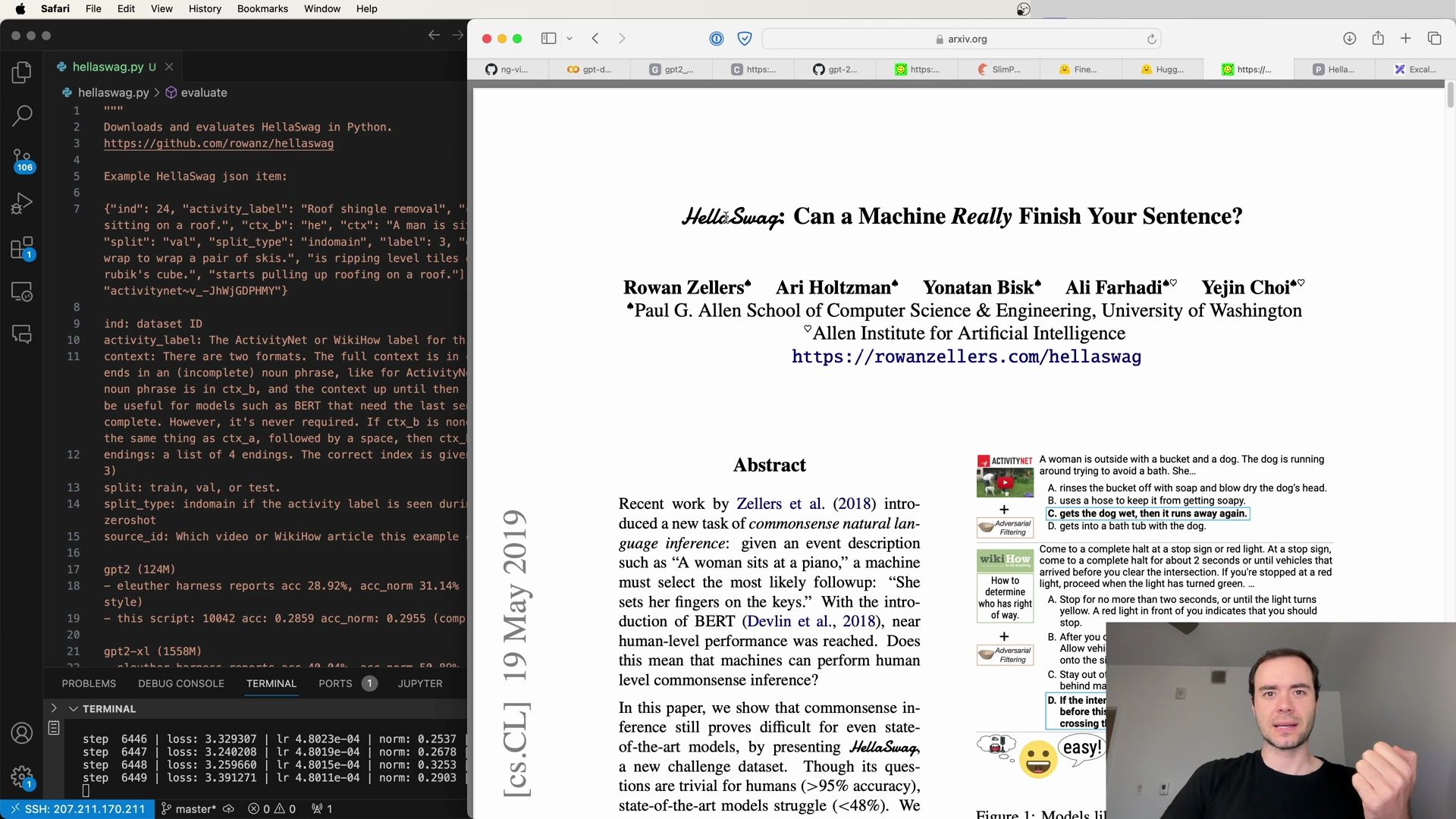

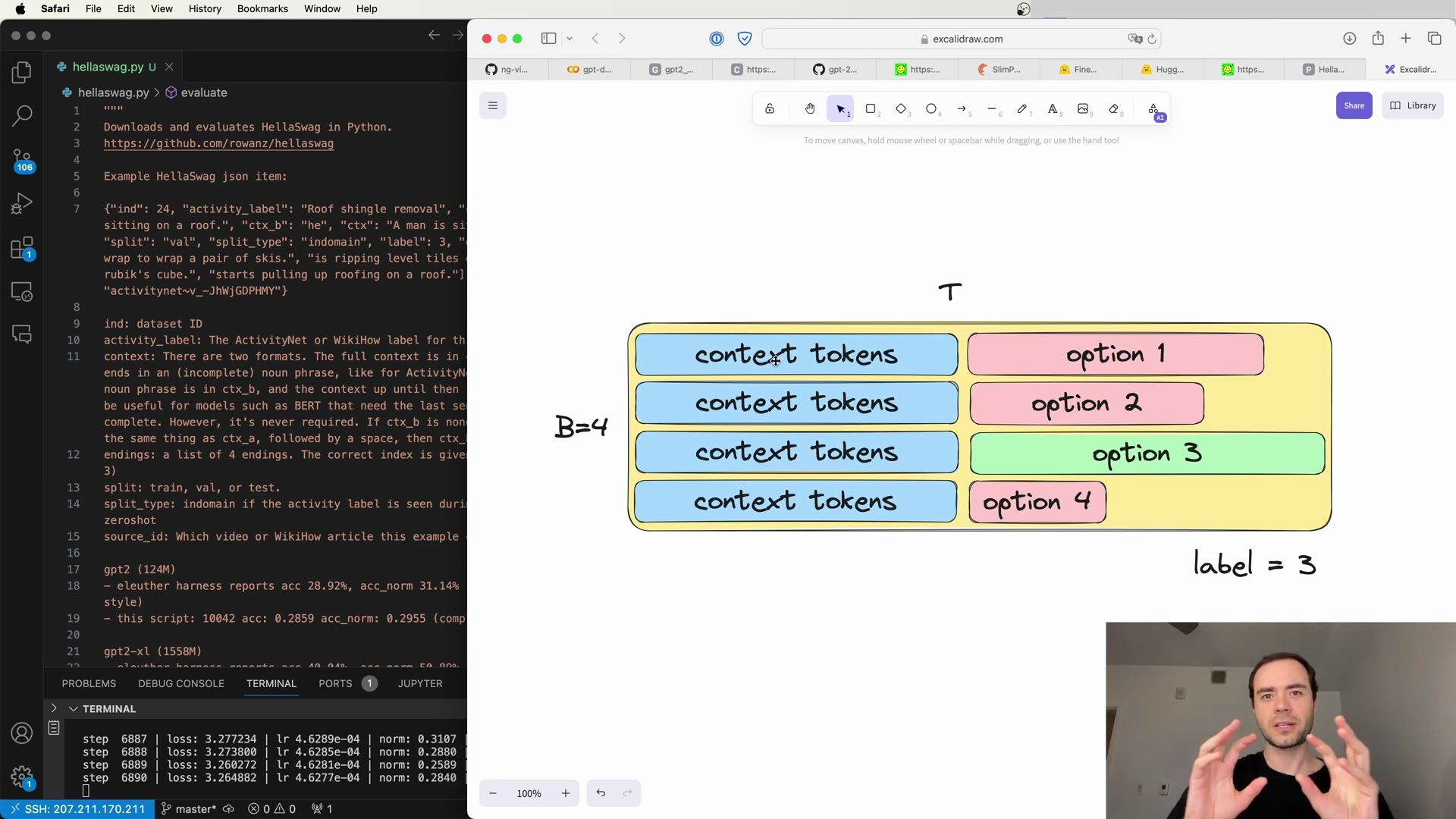

- Introduction to HellaSwag Evaluation

- HellaSwag’s Sentence Completion Task

- Overview of HellaSwag and Adversarial Filtering

- Evaluating Models with HellaSwag

- Implementation of HellaSwag Evaluation

- HellaSwag Token Completion Methodology

- Implementing HellaSwag Evaluation in Python

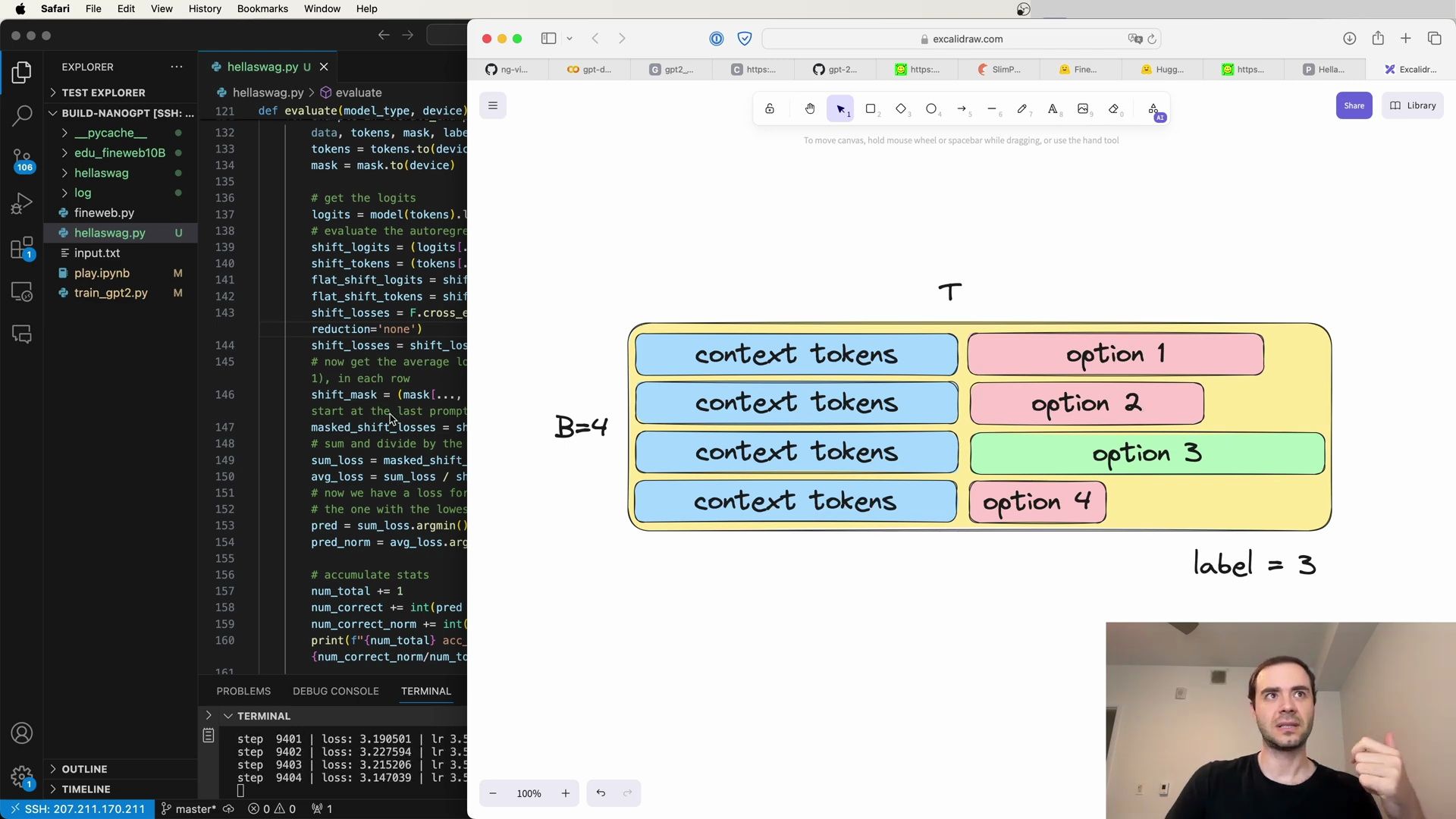

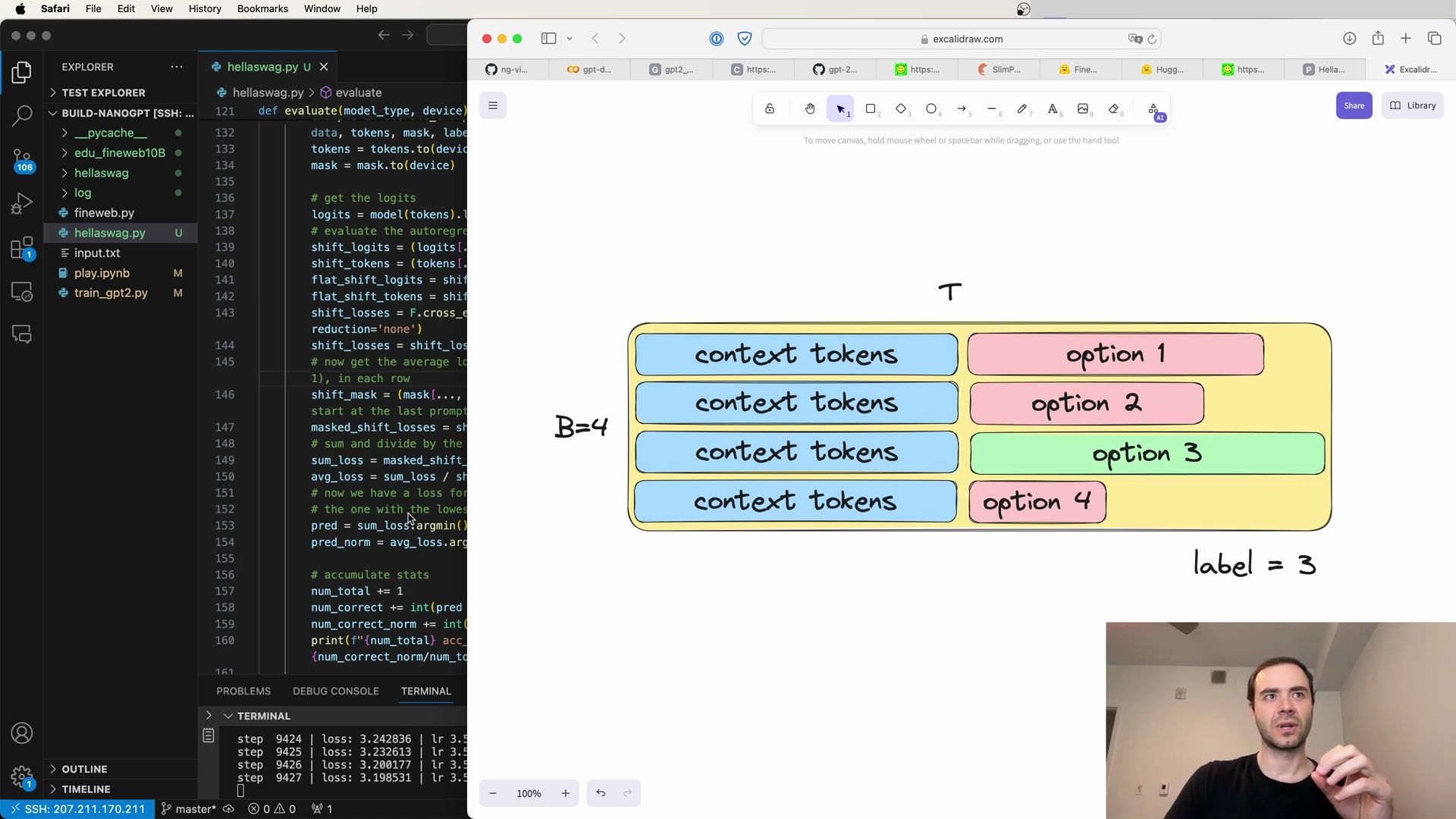

- Tracking Model Performance on HellaSwag

- Detailed Evaluation Process

- Continuous Learning and Optimization

- Cross-Entropy Loss in Model Evaluation

- Evaluating Model Predictions

- Performance Metrics

- ElutherAI Harness

- Integrating Periodic Evaluation

- Changes to Training Script

- Debugging Training Issues

- Learning Rate Scheduling

- Logging and Validation

- Sampling from the Model

- Optimization Step

- Fine-Tuning the Optimization Step

- HLSWag Evaluation

- Tracking Correct Predictions

- Synchronizing Statistics Across Processes

- Logging and Sample Generation

- Sample Generation Insights

- Log Directory Setup

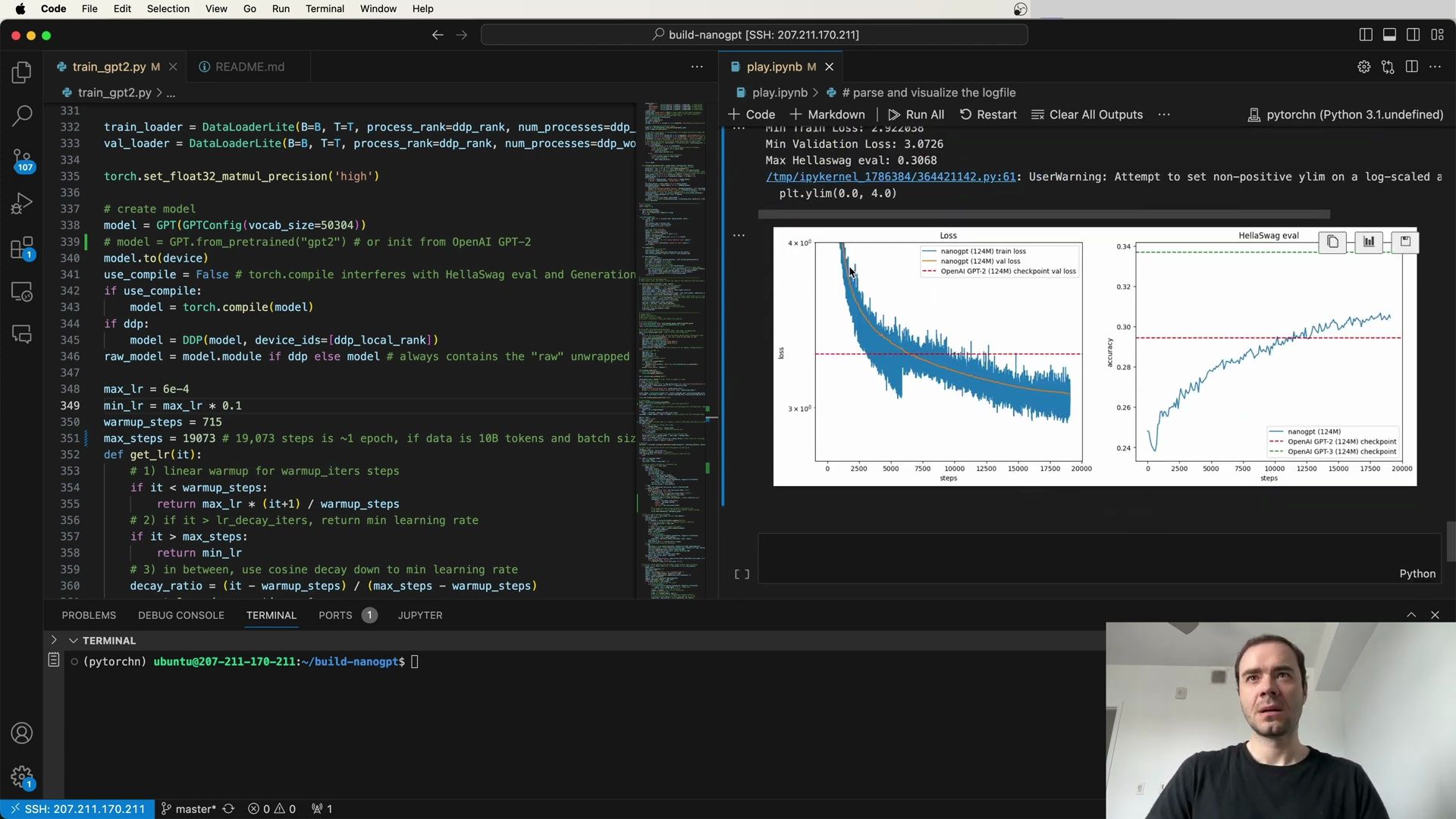

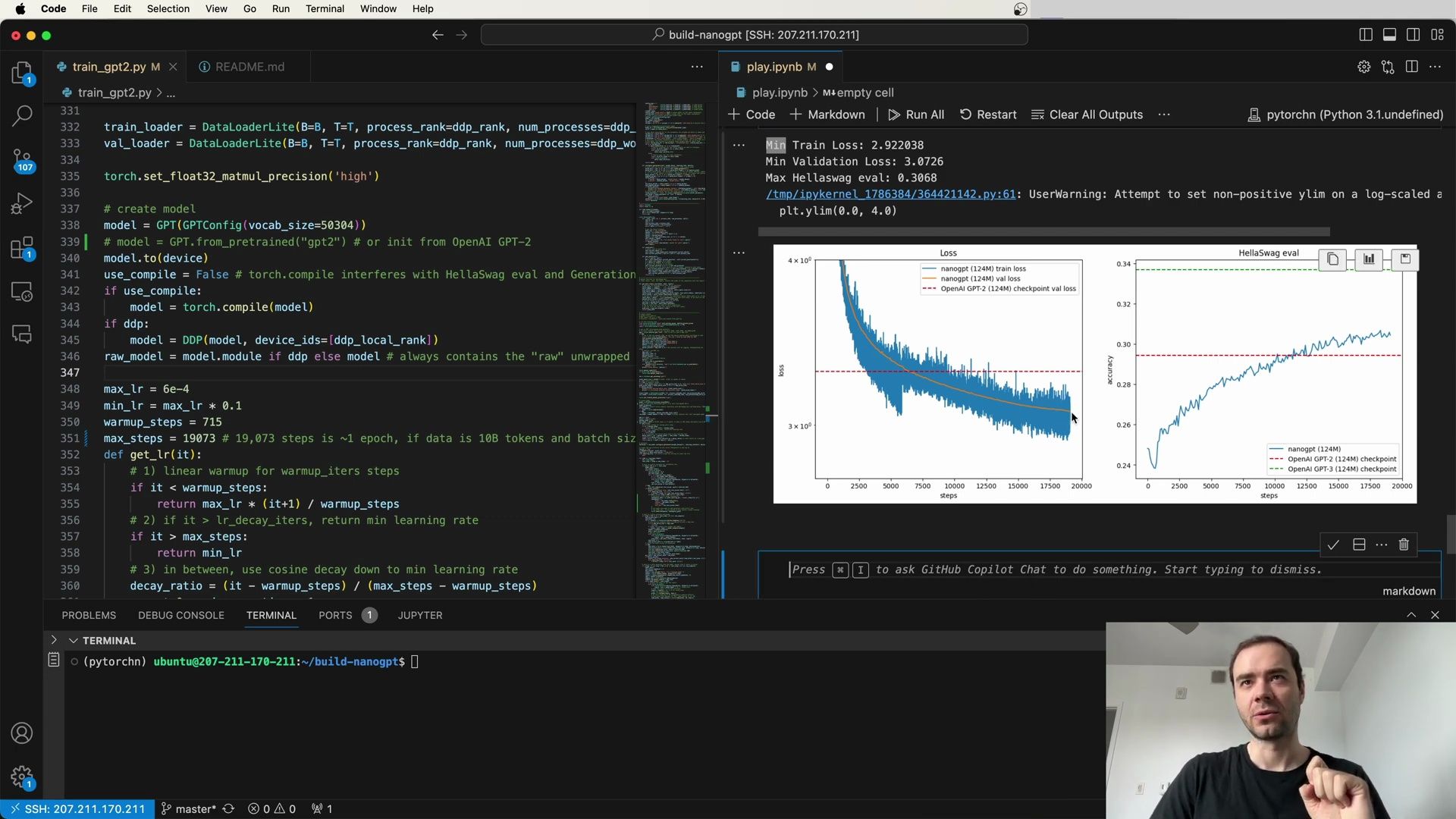

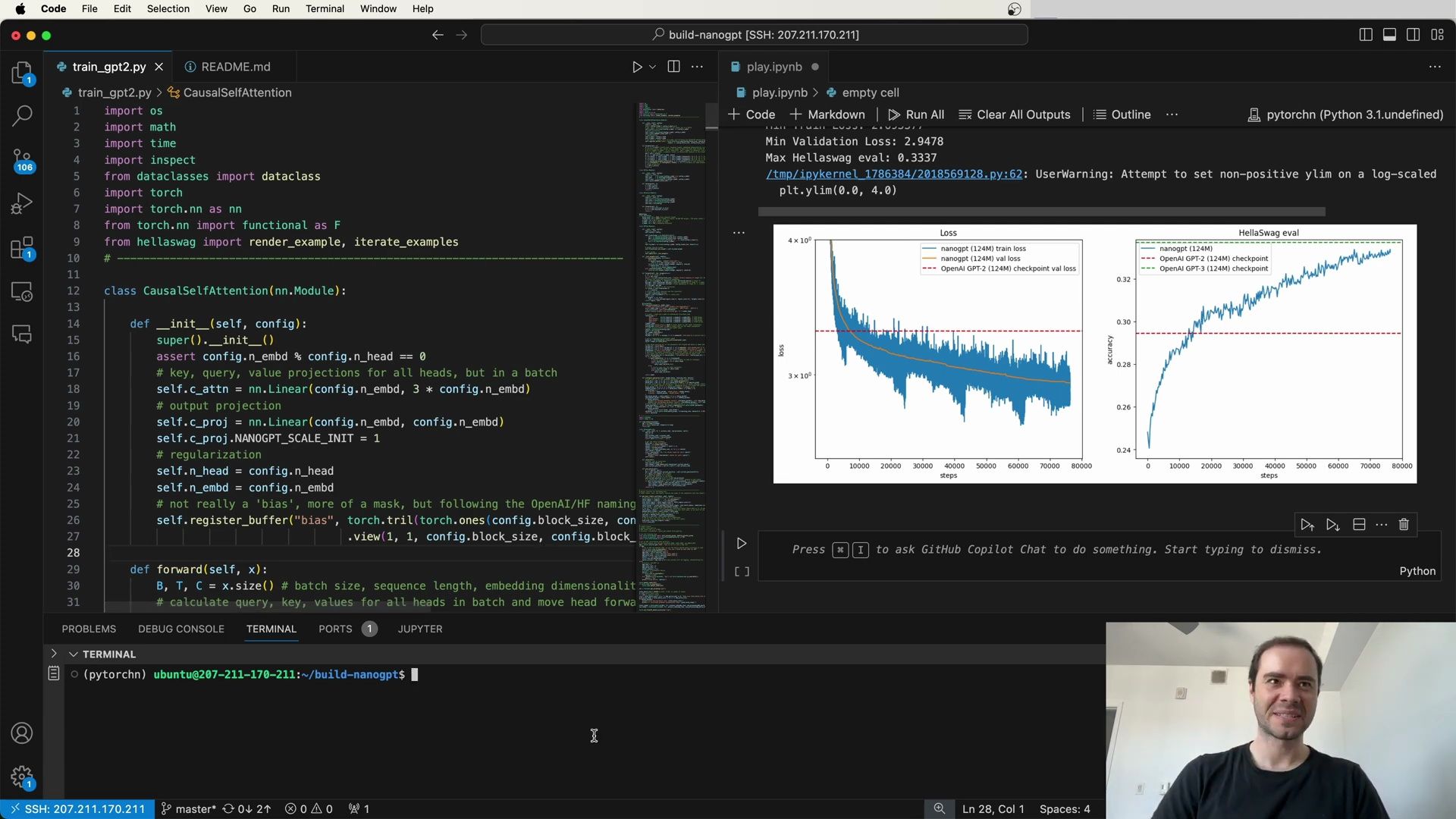

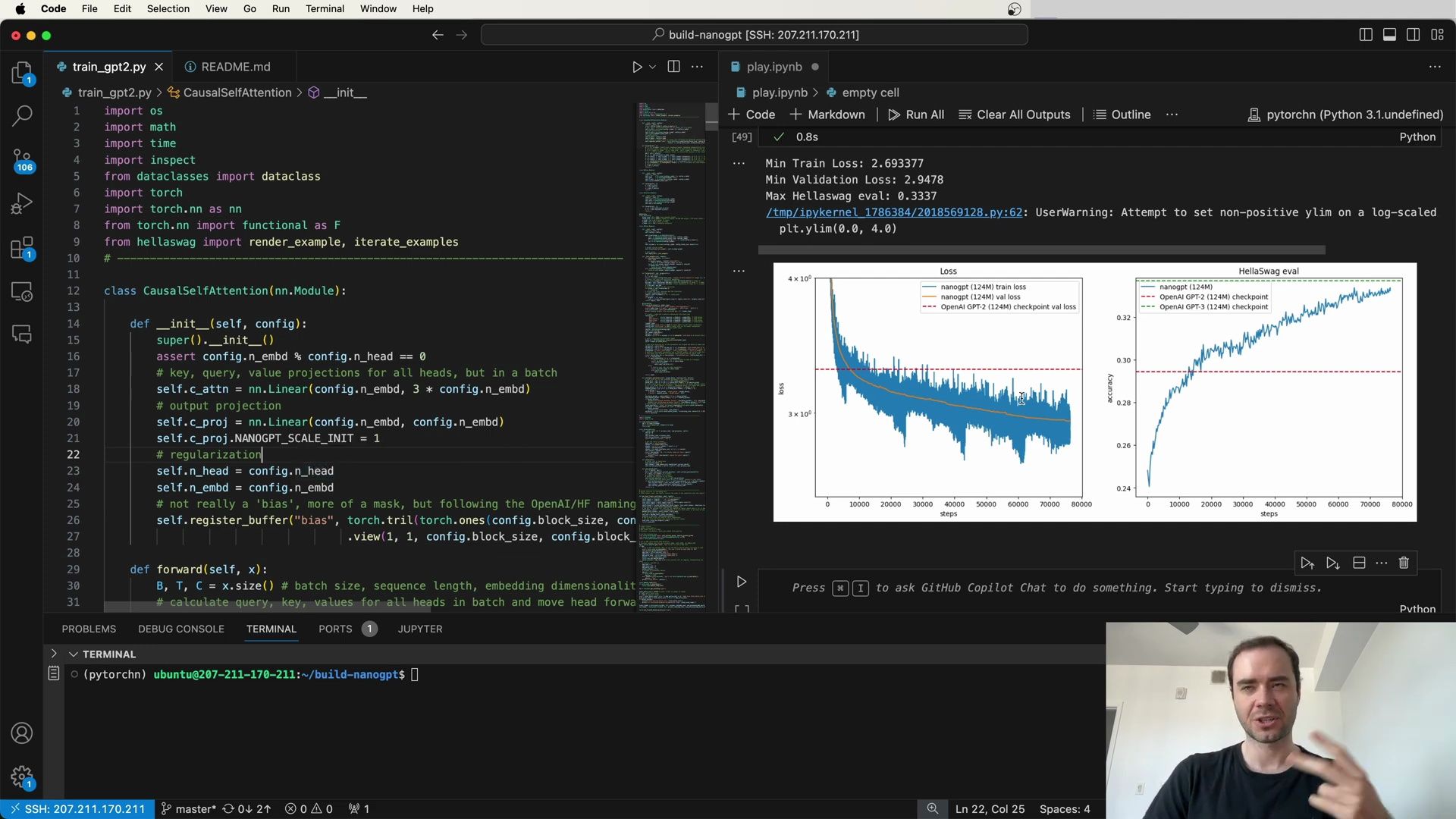

- Visualization of Training Progress

- Model Configuration and Initialization

- Training Loop Adjustments

- HellaSwag Evaluation Outcomes

- Considerations on Data Ordering

- Training Data Considerations

- DataLoaderLite Implementation

- Data Ordering and Shuffling

- Training Batch Size Configuration

- Learning Efficiency Insights

- Considerations for Hyperparameters Adjustment

- Setting Up the Training Environment

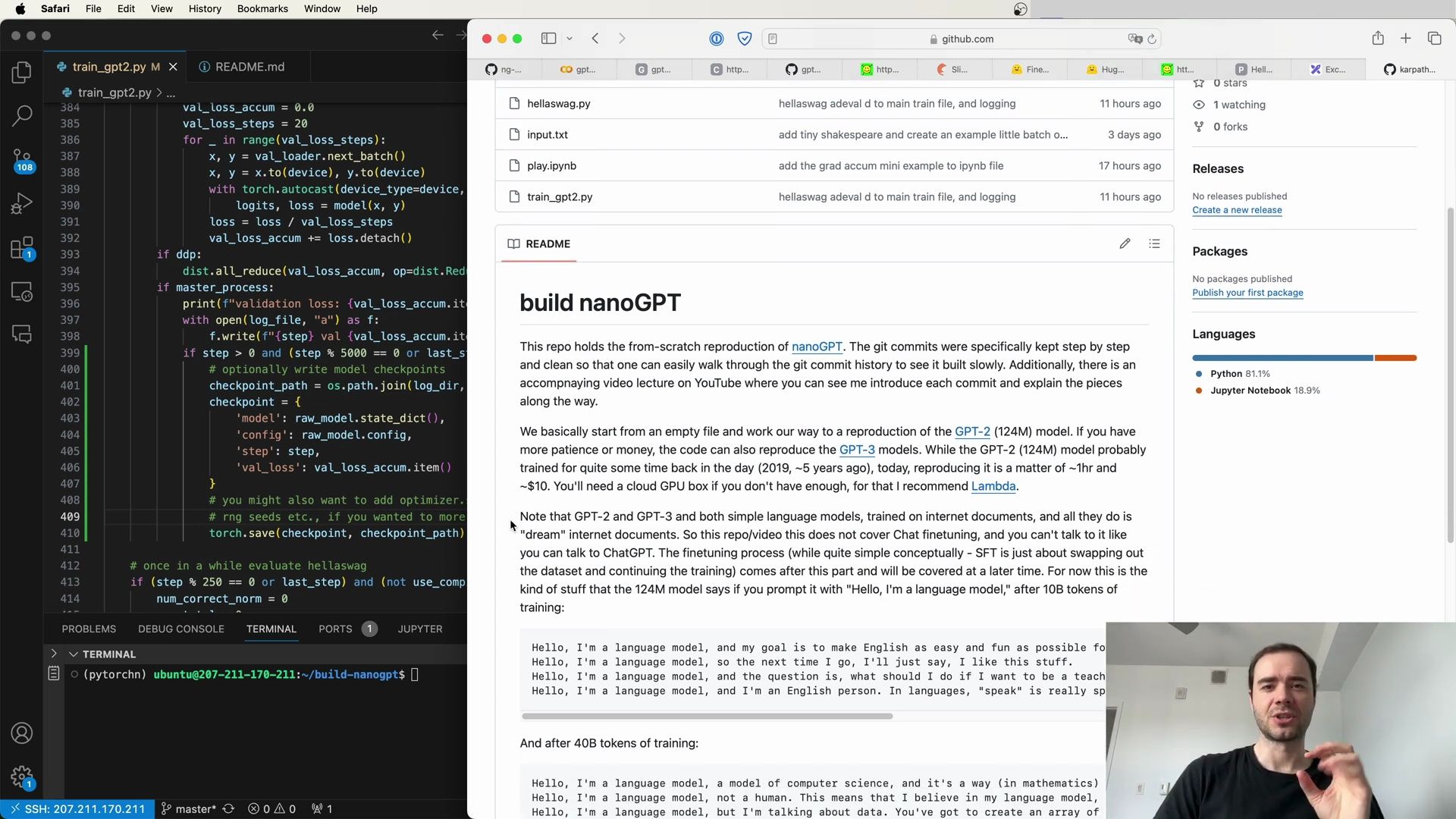

- Visualization of Extended Training Results

- Sequence Length Adjustment for Model Training

- Loss Metrics and Comparison with Other Models

- DataLoaderLite and Training Process

- Model Creation and Configuration

- Sample Generation Insights

- Validation Loss Evaluation

- Master Process Validation Loss Reporting

- Visualization of Training and Evaluation Metrics

- Checkpointing and State Management

- Enhanced Evaluation with Luther

- Language Model Evaluation Harness

- Comparison With Other Models

- Pre-training and Fine-tuning

- Continuing Pre-training and Checkpointing

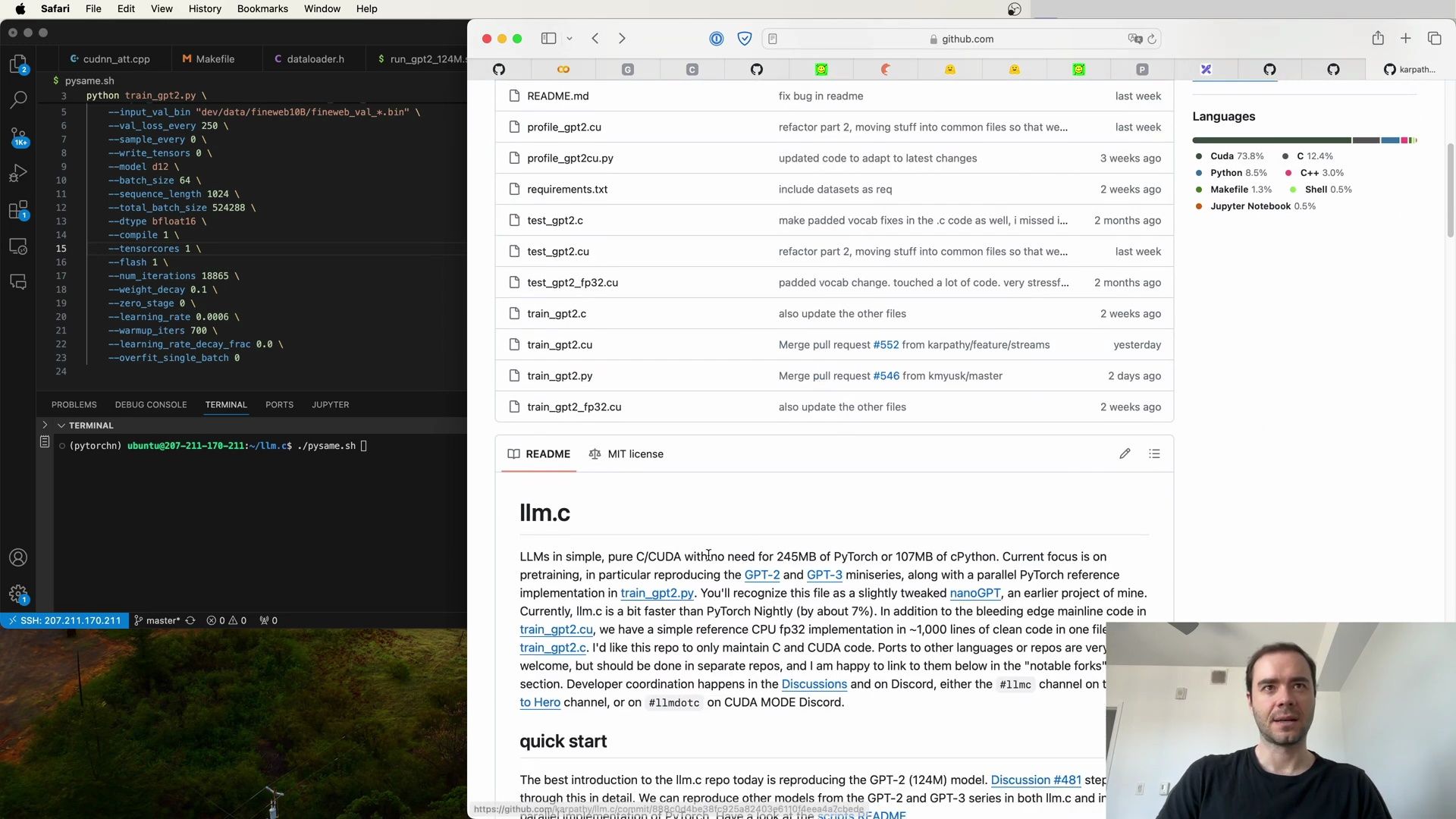

- Exploring Alternative Implementations

- LN.C: A High-Performance C-CUDA Implementation

- Training GPT2 in LN.C

- Side-by-Side Comparison

- Observations and Performance

- LN.C vs. PyTorch: Performance and Space Efficiency

- Command-Line Training with PyTorch

- LN.C: A Parallel Implementation

- LLM.C: Simple and Pure C/CUDA Language Models

- Quick Start for LLM.C

- Training Insights and Tokenization

- Consistency Between Implementations

- Visualizing Model Training and Tokenization

- Addressing Training Anomalies and Optimization

- Building and Understanding nanoGPT

- Community Engagement and Contributions

- Causal Self-Attention in GPT-2 Training

- Reflecting on Progress and Future Directions

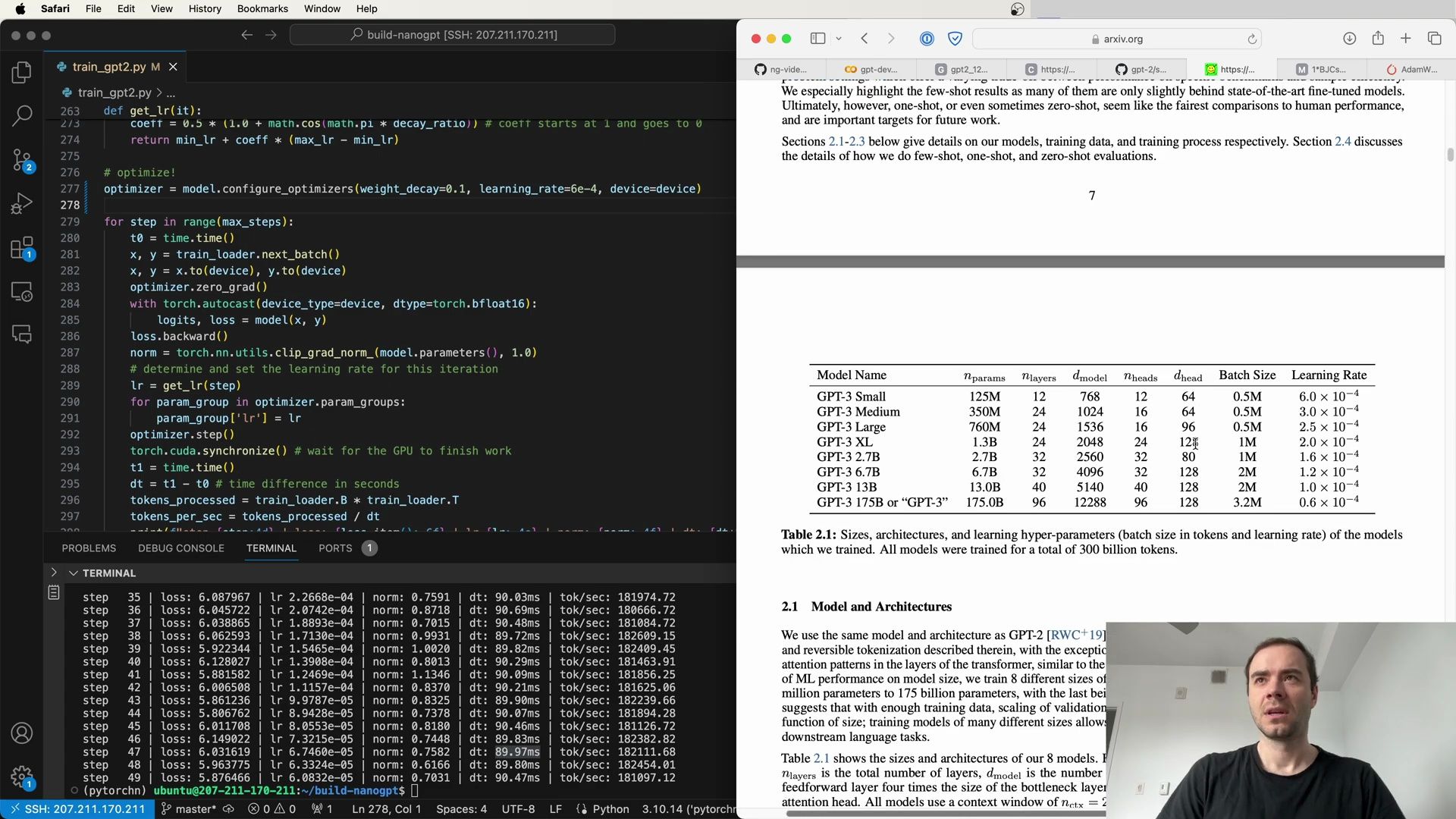

Advanced Model Configuration and Training Optimization

As we progress with the implementation of our GPT-2 model, it’s essential to optimize the training process to utilize our hardware efficiently and align with the best practices outlined in the GPT-2 and GPT-3 papers. Let’s delve into the configuration of our train_gpt2.py script, making key adjustments for performance gains.

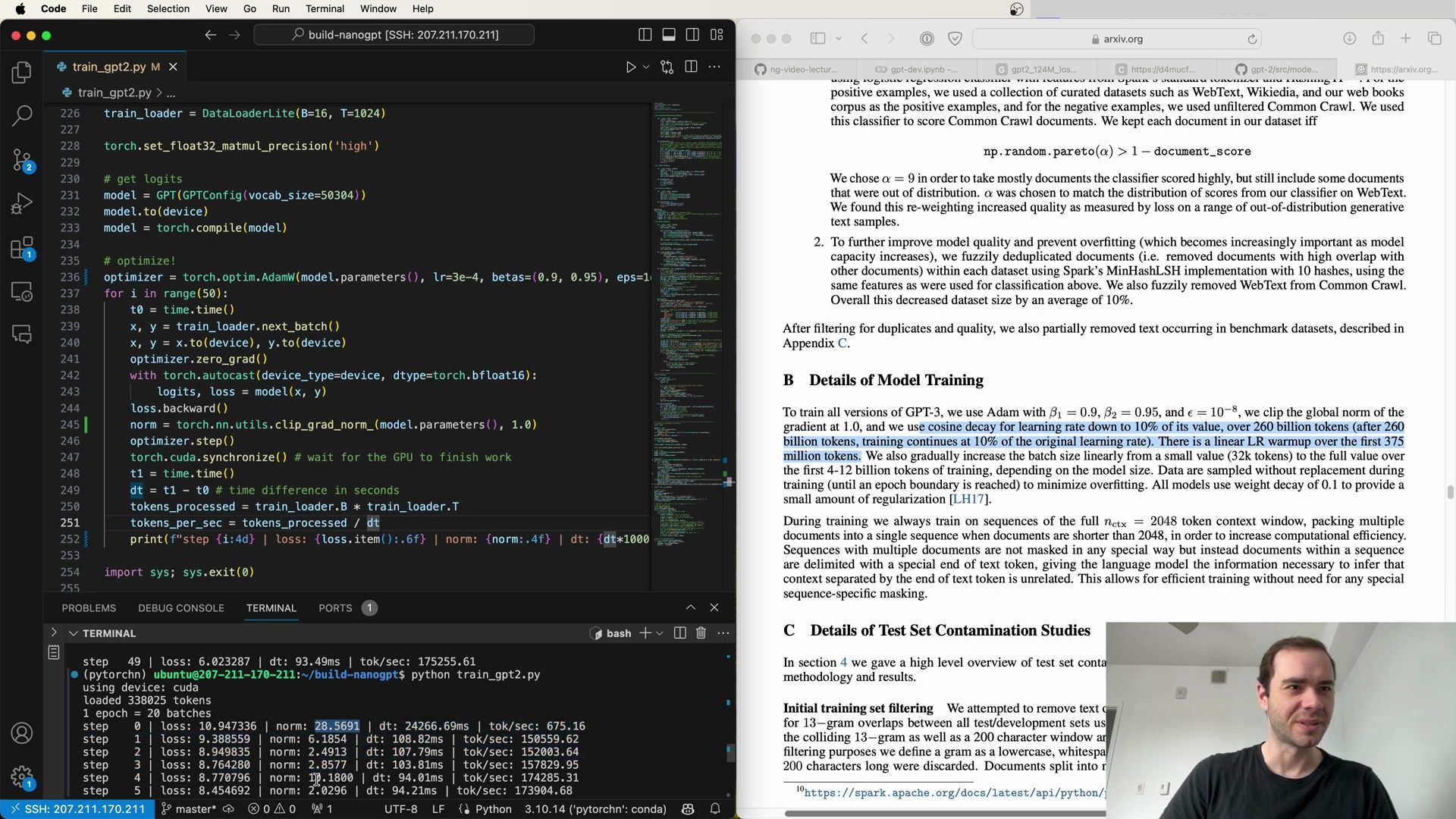

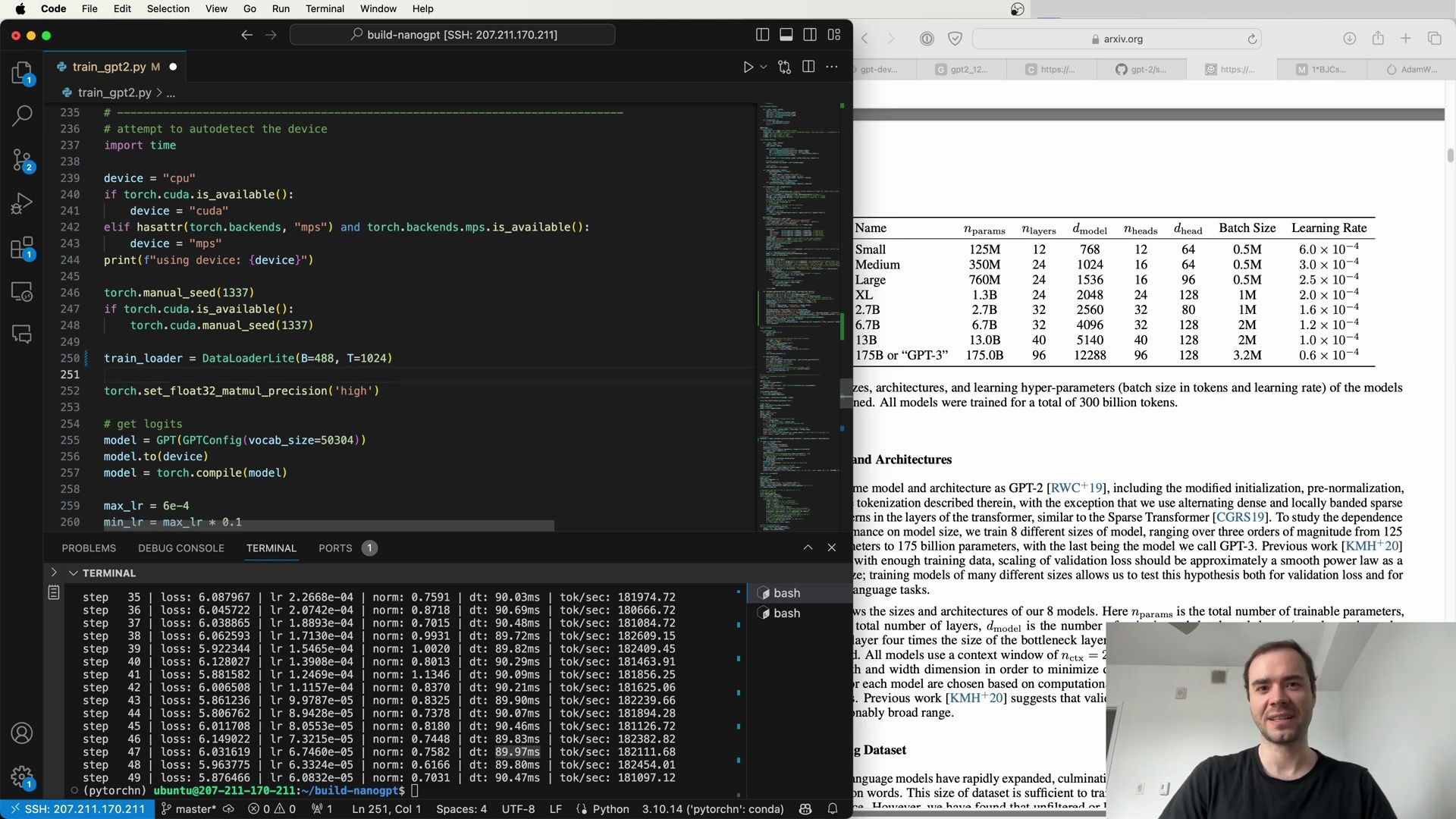

DataLoader and Model Initialization

To start, we initialize our data loader with a batch size (B) of 16 and a maximum sequence length (T) of 1024 tokens:

train_loader = DataLoader(list(B=16, T=1024))

Next, we set the matrix multiplication precision for floating-point operations to high, which is crucial for maintaining model accuracy during training:

torch.set_float32_matmul_precision('high')

We then initialize the GPT model configuration with an adjusted vocabulary size to a more hardware-friendly number, ensuring more efficient computation:

# Adjust vocabulary size for optimization

model = GPT(GPTConfig(vocab_size=53004))

model.to(device)

model = torch.compile(model)

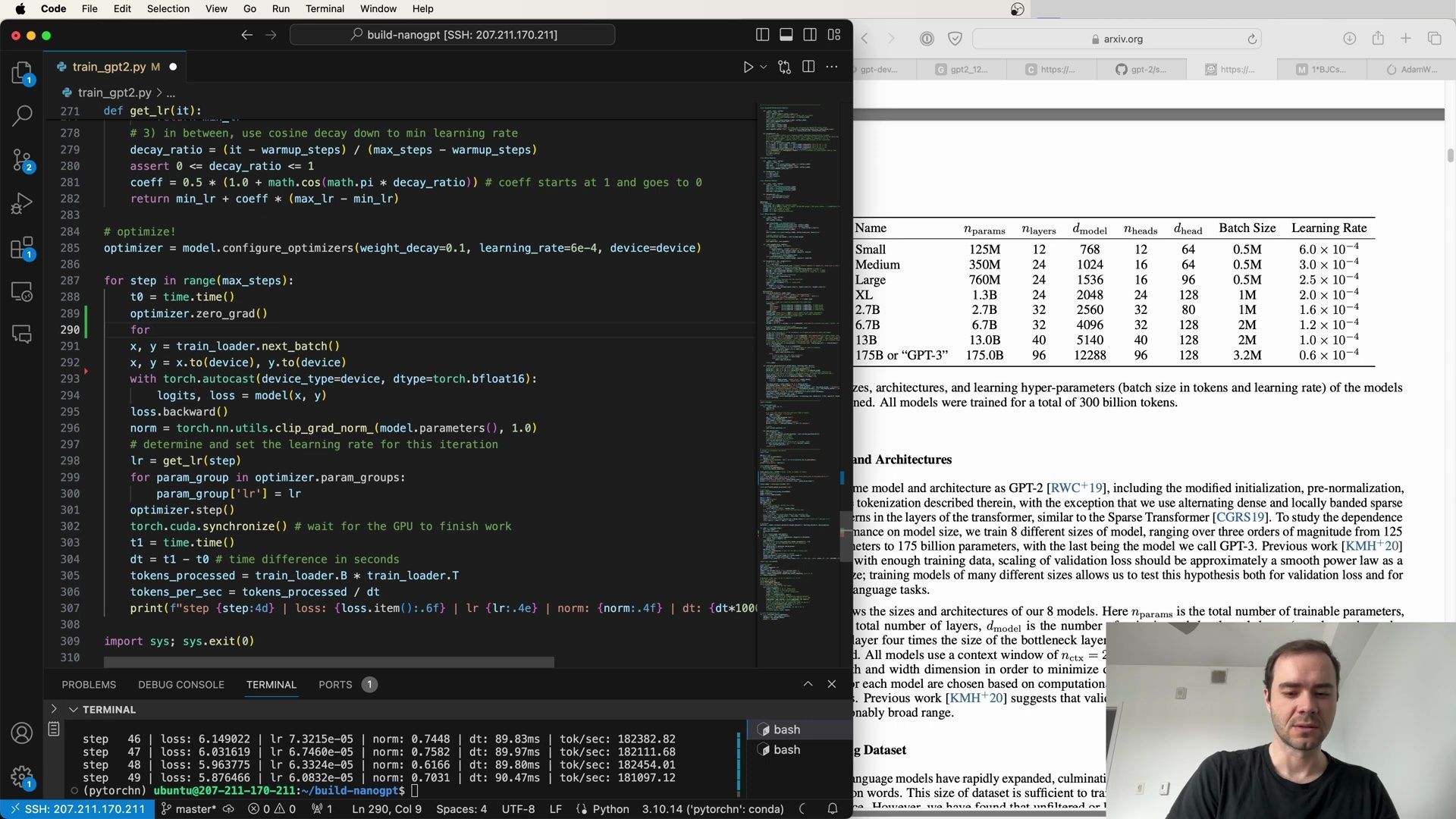

Optimization Loop

With our model and data loader set up, we proceed to the optimization loop. We use the AdamW optimizer with a learning rate of 3e-4, which is a commonly used optimizer for training language models due to its effectiveness in handling sparse gradients:

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4)

During the training loop, we ensure that each batch of data is processed and optimized efficiently:

for i in range(50):

t0 = time.time()

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

# Enable mixed precision training for performance optimization

with torch.autocast(mode='x', device_type=torch.bfloat16):

logits, loss = model(x, y)

loss.backward()

optimizer.step()

torch.cuda.synchronize() # wait for the GPU to finish work

t1 = time.time()

# Calculate tokens processed per second for performance measurement

dt = t1 - t0 # time difference in seconds

tokens_processed = train_loader.B * train_loader.T

tokens_per_sec = tokens_processed / dt

print(f"Step: {i}, Loss: {loss.item()}, Tokens/sec: {tokens_per_sec}")

In this loop, we employ mixed precision training using PyTorch’s autocast to enhance training speed without compromising the model’s performance. The torch.cuda.synchronize() function ensures that we accurately measure the time taken per iteration by waiting for the GPU to complete its work.

Ensuring Efficient Memory Usage

One key aspect of optimization involves ensuring that tensors and operations are aligned with the GPU’s architecture, which favors powers of two. By adjusting the vocabulary size to 53004—a number with many factors of two—we can reduce the need for special case handling in CUDA kernels, leading to improved training speed:

# Adjusting vocabulary size to a more hardware-friendly number

vocab_size = 53004

This seemingly minor change can lead to significant performance gains due to better utilization of the GPU’s memory bandwidth and compute capabilities.

Leveraging Kernel Fusion with FlashAttention

Another sophisticated optimization is the use of FlashAttention, an algorithmic improvement that fuses multiple operations into a single kernel, drastically reducing memory bandwidth usage. By replacing multiple lines of attention calculation with FlashAttention, we can further accelerate our training loop:

# Replace attention mechanism with FlashAttention for improved performance

with torch.autocast(mode='x', device_type=torch.bfloat16):

logits, loss = model.flash_attention(x, y)

Hyperparameter Alignment with GPT Papers

Finally, we align our training hyperparameters with the guidelines provided in the GPT-2 and GPT-3 papers. While the GPT-2 paper is vague about the specifics of the training setup, the GPT-3 paper’s appendix offers a more detailed list of hyperparameters used for training. We incorporate these recommendations into our training script to ensure we’re following the best practices that led to the success of these models.

In sum, by carefully configuring our data loader, optimizing tensor sizes, employing mixed precision training, leveraging kernel fusion, and adhering to the hyperparameters outlined in seminal papers, we can significantly enhance the performance and efficiency of our GPT-2 model training. These advanced optimizations are crucial for handling the computational demands of large language models and achieving state-of-the-art results.

Hyperparameter Tuning in Line with GPT-3

In our quest to replicate the architecture and success of GPT models, it becomes evident that the devil is in the details—especially when it comes to hyperparameter tuning. The GPT-3 paper provides a plethora of subtle but critical details that ultimately make a significant impact on the model’s performance.

Adam Optimizer Configuration

For fine-tuning our model’s training process, we’ll adhere closely to the configurations recommended in the GPT-3 paper. This involves setting the optimizer parameters to specific values that have been empirically proven to work well for such large models.

Let’s configure our optimizer with the recommended beta values:

# Configure optimizer with GPT-3 recommended hyperparameters

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8)

These beta values control the exponential decay rates for the moment estimates in Adam, which in turn affect the step sizes taken during optimization.

Explicit Epsilon Value

With large-scale models, even the smallest parameters—such as epsilon—can have a significant impact. The GPT-3 paper specifies an epsilon value of 1e-8. Including this value explicitly ensures that we’re fully aligned with their setup:

# Explicitly define epsilon value as per GPT-3 recommendations

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8)

Gradient Clipping

Gradient clipping is a technique used to prevent exploding gradients in neural networks, which is even more important in the context of such large models as GPT-3. The GPT-3 paper suggests clipping the gradient norm at 1.0 to stabilize training:

# Clip gradients during training to prevent exploding gradients

for i in range(50):

...

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=1.0)

optimizer.step()

...

The function torch.nn.utils.clip_grad_norm_ modifies gradients in place and ensures that the norm of the parameter gradients does not exceed 1.0.

Learning Rate Schedule

GPT-3 introduces a sophisticated learning rate schedule that involves a warmup period followed by a cosine decay. To implement this, we would need to adjust the learning rate over time:

# Example of learning rate scheduler setup (requires further implementation)

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max=260e9, eta_min=lr*0.1)

This scheduler requires additional logic to handle the warmup phase and adjust T_max and eta_min based on the number of tokens processed.

Efficient Training with Full Context Windows

To train more efficiently, the GPT-3 paper suggests always training on sequences with the full context window size. This means packing multiple documents into a single sequence when they are shorter than the maximum context length, which increases computational efficiency:

# Pack multiple documents into a single sequence for efficient training

train_sequences = pack_documents(train_data, max_context_size=2048)

The above pseudo-code implies that you would need a function like pack_documents to handle this operation. The GPT-3 model uses a special end-of-text token to separate documents within a sequence.

Test Set Contamination Studies

Moreover, the GPT-3 paper details their methodology to minimize test set contamination. They filter out training data that overlaps with test and development sets by searching for 13-gram overlaps and discarding any colliding sequences:

# Filter training set for test set contamination

filtered_train_data = filter_contamination(train_data, test_sets, n_gram=13)

Implementing such a filter function would involve defining what constitutes an overlap and how to handle the surrounding context of the identified overlap.

Performance Metrics and Debugging

During training, it is crucial to keep an eye on various performance metrics. One such metric is the norm of the gradient, which can provide insights into the training stability:

# Print out the norm of the gradient to monitor training stability

for i in range(50):

...

norm = torch.nn.utils.clip_grad_norm_(model.parameters(), max_norm=1.0)

print(f"Step: {i}, Loss: {loss.item()}, Gradient Norm: {norm}, Tokens/sec: {tokens_per_sec}")

Monitoring the gradient norm allows us to detect issues early on. A well-behaved norm indicates stable training, while a climbing norm may signal that the model is destabilizing.

Wrapping Up

By meticulously aligning our model’s hyperparameters with those detailed in the GPT-3 paper, employing gradient clipping, and monitoring performance metrics, we set the stage for training a language model that could potentially rival the performance of GPT-3. While we may not have access to the weights of GPT-3, these practices bring us closer to the frontier of language modeling.

In the next section, we’ll explore additional strategies to further enhance our model’s training process, including data sampling techniques and model regularization. Stay tuned as we continue to delve deeper into the intricacies of training state-of-the-art language models.

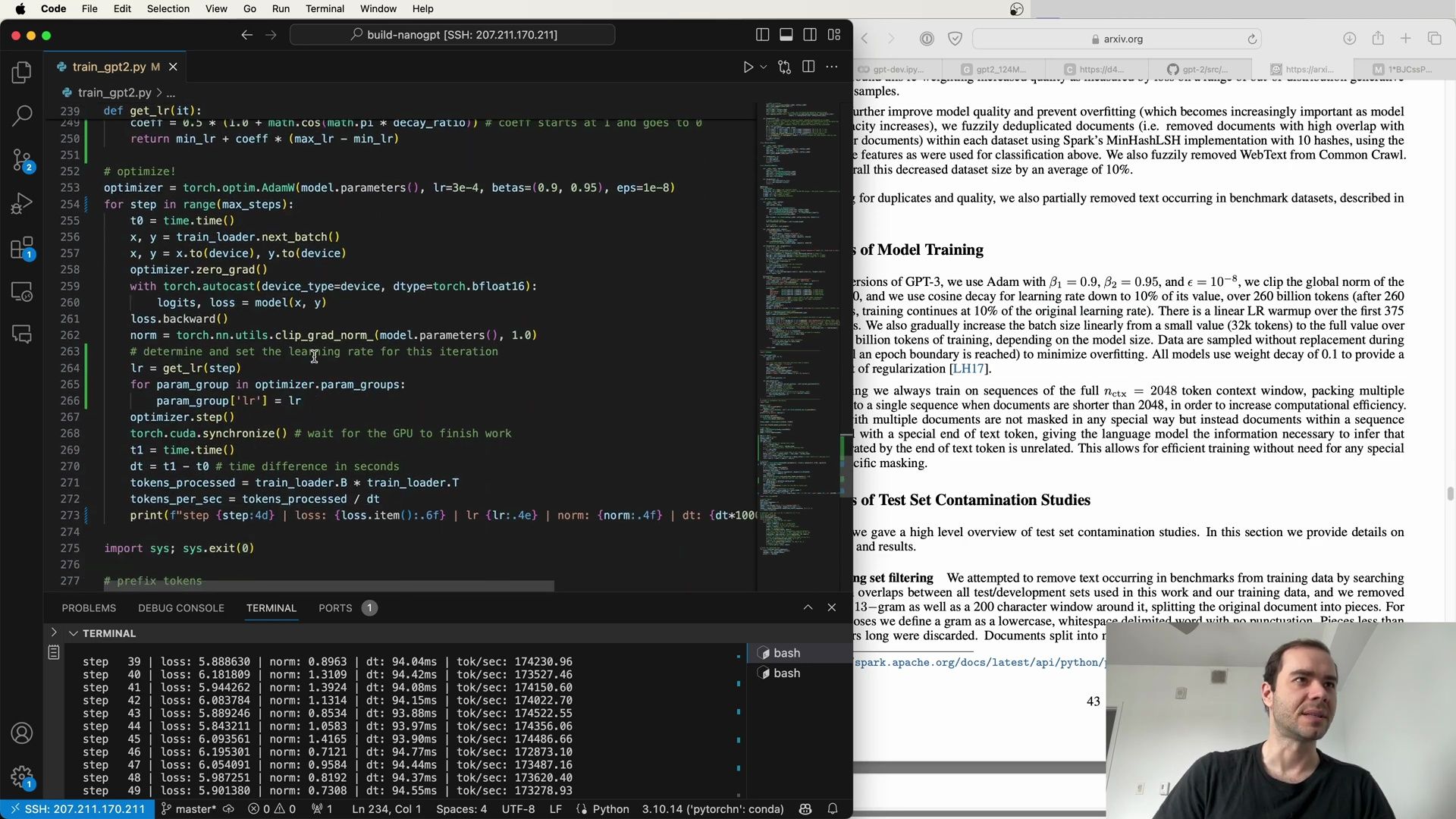

Advanced Learning Rate Scheduling

In the journey to replicate the performance of GPT-3, an advanced learning rate scheduling is used. This is not a fixed learning rate as one might typically see but involves a warmup phase followed by a cosine decay. This sophisticated scheduling was meticulously detailed in the GPT-3 paper and is essential to achieving optimal results.

Cosine Decay Learning Rate Schedule

GPT-3 employs a learning rate schedule that starts with a linear warmup period. After this, it transitions to a cosine decay that reduces the learning rate to 10% of its initial value over a vast span of 260 billion tokens. This is followed by continued training at this reduced rate.

Here’s how we can implement this in PyTorch:

import math

import torch

# Configuration parameters for the scheduler

max_lr = 3e-4

min_lr = max_lr * 0.1

warmup_steps = 375e6 # 375 million tokens

total_steps = 260e9 # 260 billion tokens

current_step = 0

# Function to calculate learning rate

def get_lr(current_step):

if current_step < warmup_steps:

# Linear warmup

return max_lr * (current_step + 1) / warmup_steps

else:

# Cosine decay to 10% of the max_lr

decay_steps = current_step - warmup_steps

decay_ratio = decay_steps / (total_steps - warmup_steps)

cos_decay = (1.0 + math.cos(math.pi * decay_ratio)) / 2

return min_lr + (max_lr - min_lr) * cos_decay

# Update learning rate at each training step

for step in range(int(total_steps)):

lr = get_lr(step)

for param_group in optimizer.param_groups:

param_group['lr'] = lr

It is important to note that the learning rate scheduling is a critical component of GPT-3’s training success, providing a controlled adjustment of the learning rate that promotes better convergence over time.

Batch Size Scaling

To further refine our training process, it is also recommended to scale up the batch size linearly. This gradual increase happens over the first 4 to 12 billion tokens of training, depending on the model size. Increasing the batch size over time allows the model to start learning from a more manageable amount of data and then leverage more significant amounts of data for learning as the training progresses.

Regularization with Weight Decay

GPT-3 introduces a small amount of regularization through weight decay. This strategy is designed to prevent overfitting by adding a penalty for larger weights in the model. In PyTorch, this can be achieved by setting the weight_decay parameter in the optimizer:

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8, weight_decay=0.1)

This addition of weight decay acts as a form of L2 regularization, encouraging the model to find simpler patterns within the data, which can generalize better to new, unseen data.

Test Set Contamination Avoidance

An often overlooked but crucial aspect of training large language models is avoiding contamination between training and test datasets. For GPT-3, a meticulous process was used to ensure that the training data did not include any sequences that overlapped with the test or development sets.

This was accomplished by searching for 13-gram overlaps and removing not just the overlapping sequence but also a substantial context around it. If a document was split into multiple parts due to this filtering process and the resulting pieces were too short, they were discarded. This approach is highly important to ensure that the model’s performance is a true reflection of its ability to generalize and not merely a result of memorizing parts of the test set.

Gradient Clipping

Another critical aspect of stable training is gradient clipping, which we’ve already touched upon earlier. It’s worth reiterating its importance as an effective technique to prevent the exploding gradients problem, which is particularly prevalent in large models like GPT-3. With each training step, we clip the gradient norm at 1.0 to stabilize the training:

# Perform gradient clipping

for i in range(max_training_steps):

...

loss.backward()

norm = torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

...

The gradient norm values during the initial stages of training can be quite high due to the model parameters being randomly initialized. However, as training progresses, the norm typically stabilizes. Sudden spikes or deviations from this pattern can be an indicator of issues in the training process and should be investigated.

Training Efficiency with Full Context Windows

In order to maximize computational efficiency during training, GPT-3 always utilizes sequences with the full context window of 2048 tokens. This is done by packing multiple documents into a single sequence when they are shorter than the context size. No special masking is necessary for these sequences, as documents are separated by a special end-of-text token, allowing the model to distinguish unrelated contexts within the same sequence.

Implementing the Learning Rate Scheduler

To implement the aforementioned learning rate schedule with warmup and cosine decay, we can use the following code snippet:

# Set precision for matrix multiplication

torch.set_float32_matmul_precision('high')

# Initialize the model

model = GPT(GPTConfig(vocab_size=50304))

model.to(device)

model = torch.compile(model)

# Learning rate scheduling parameters

max_lr = 3e-4

min_lr = max_lr * 0.1

warmup_steps = 375e6

total_steps = 260e9

# Define the learning rate schedule function

def get_lr(i):

if i < warmup_steps:

return max_lr * (i + 1) / warmup_steps

if i > total_steps:

return min_lr

decay_ratio = (i - warmup_steps) / (total_steps - warmup_steps)

coeff = (1.0 + math.cos(math.pi * decay_ratio)) / 2

return min_lr + coeff * (max_lr - min_lr)

# Initialize the optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8)

# Apply the learning rate schedule during optimization

for step in range(int(total_steps)):

lr = get_lr(step)

for param_group in optimizer.param_groups:

param_group['lr'] = lr

# Proceed with training steps

By adhering to these detailed training strategies, including the learning rate schedule, batch size scaling, regularization techniques, and avoidance of test set contamination, we approach the frontier of training large-scale language models. These practices are instrumental in refining the model to achieve performance on par with groundbreaking models such as GPT-3.

Learning Rate Scheduling in Practice

In the context of training models like GPT-3, the learning rate typically starts close to zero, ramps up linearly during a warmup phase, and then decays following a cosine curve to a predefined minimum. While the minimum learning rate in some settings might be zero, in the case of GPT-3, the team used a non-zero minimum as part of their cosine decay strategy.

The learning rate schedule is pivotal, affecting the model’s ability to converge to a suitable set of weights. During training, the learning rate is gradually decreased, following a cosine function, down to 10% of its maximum value over a significant amount of steps, and then training continues at this reduced rate.

Implementing the Learning Rate Scheduler

To implement this type of learning rate schedule in PyTorch, we can utilize the following code, which includes a linear warmup followed by a cosine decay:

import math

import time

import torch

# Set precision for matrix multiplication

torch.set_float32_matmul_precision('high')

# Initialize the model

model = GPT(GPTConfig(vocab_size=50304))

model.to(device)

model = torch.compile(model)

# Learning rate scheduling parameters

max_lr = 3e-4

min_lr = max_lr * 0.1

warmup_steps = 10

max_steps = 50

# Define the learning rate schedule function

def get_lr(step):

if step < warmup_steps:

# 1) Linear warmup for warmup_steps

return max_lr * (step + 1) / warmup_steps

if step >= max_steps:

# 2) After max_steps, use the minimum learning rate

return min_lr

# 3) Cosine decay down to min_lr in between

decay_ratio = (step - warmup_steps) / (max_steps - warmup_steps)

assert 0 <= decay_ratio <= 1

coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))

return min_lr + coeff * (max_lr - min_lr)

# Initialize optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8)

# Training loop with learning rate scheduling

for step in range(max_steps):

t0 = time.time()

X, y = train_loader.next_batch()

X, y = X.to(device), y.to(device)

optimizer.zero_grad()

logits = model(X)

loss = loss_fn(logits, y)

loss.backward()

optimizer.step()

t1 = time.time()

# Print training metrics

print(f'Step: {step}, Loss: {loss.item()}, Time/step: {t1-t0}, tok/sec: {tokens_per_second}')

This code snippet begins with a linear increase of the learning rate during the warmup phase and then transitions to a cosine decay, where the learning rate gradually decreases to a minimum value, emulating the schedule used in GPT-3’s training.

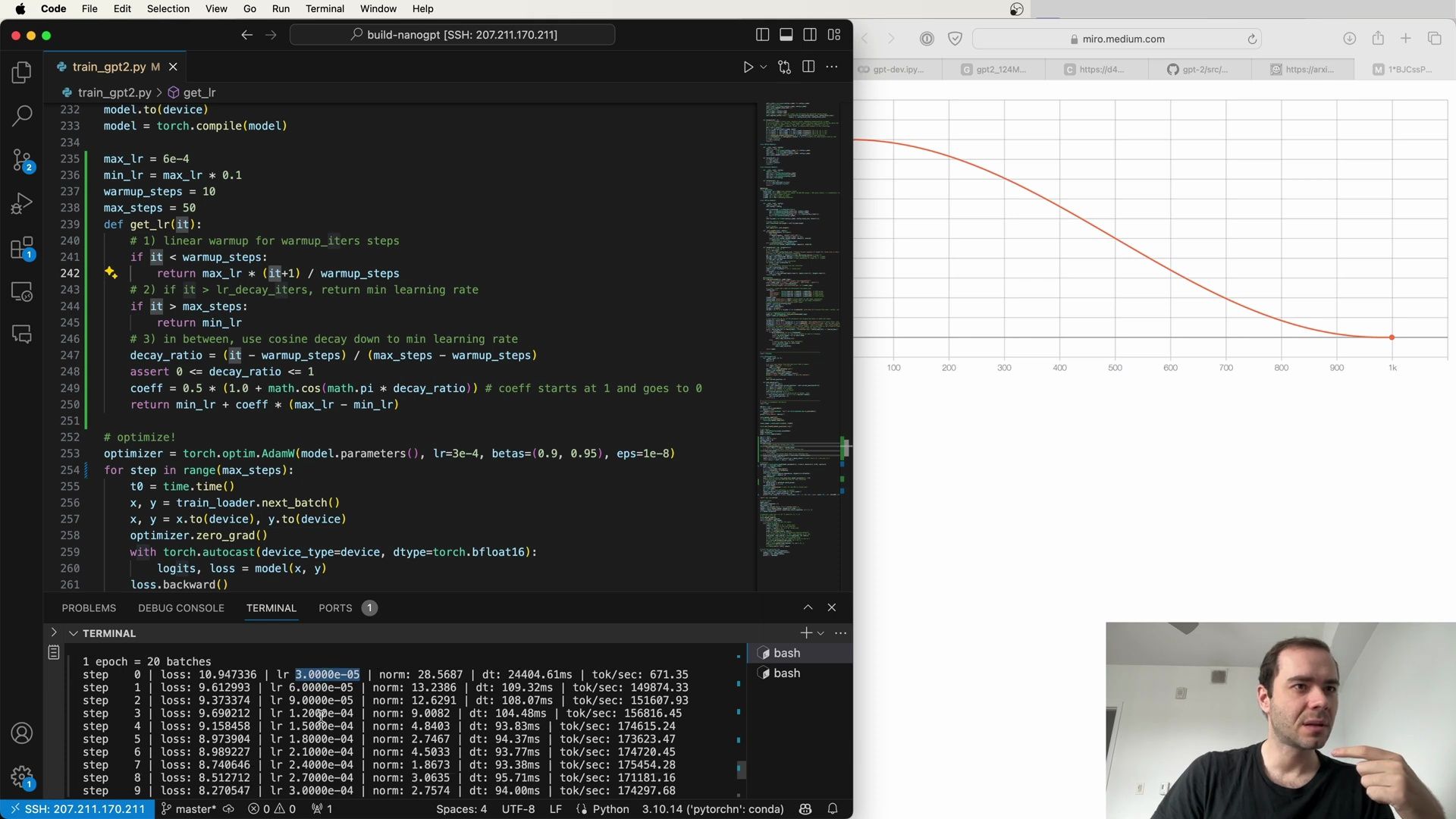

Learning Rate Scheduler Visualization

To better understand the effects of this learning rate schedule, let’s visualize it:

In the graph above, you can see the linear ramp-up during the warmup phase followed by the cosine decay. The visualization helps to intuitively grasp how the learning rate evolves over time, which is critical for the convergence of large models like GPT-3.

Model Specific Learning Rates

It’s important to note that the optimal maximum learning rate might vary depending on the model size. In the case of GPT-3, different configurations of the model used different learning rates:

- GPT-3 Small:

6.0 x 10^-4 - GPT-3 Medium:

3.0 x 10^-4 - GPT-3 Large:

2.5 x 10^-4 - GPT-3 XL:

2.0 x 10^-4 - And so on…

Each model configuration requires its own learning rate to optimize performance, as indicated in the partially visible table:

The learning rates for each model size are carefully chosen based on empirical results and are essential for training stability and efficiency.

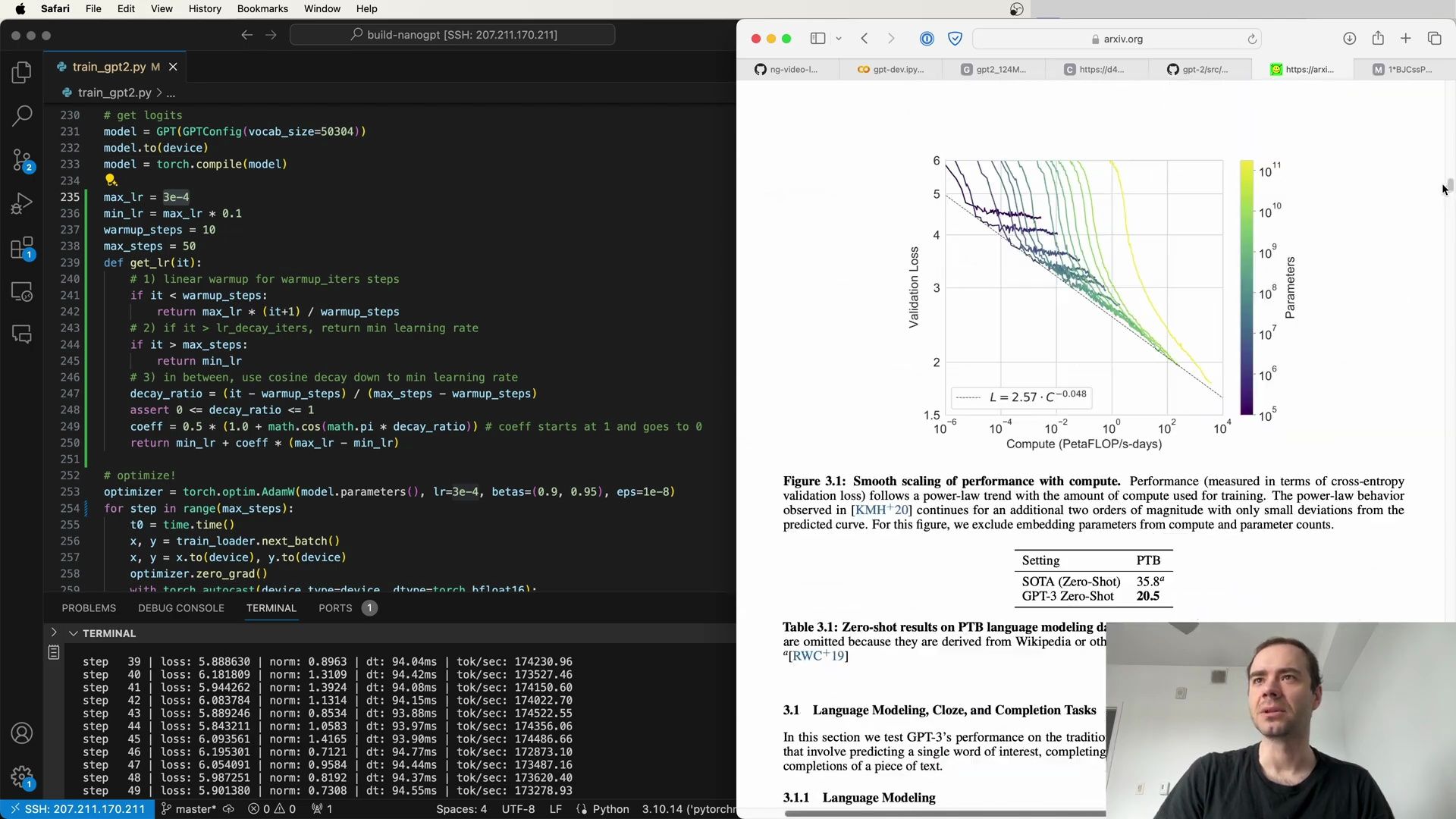

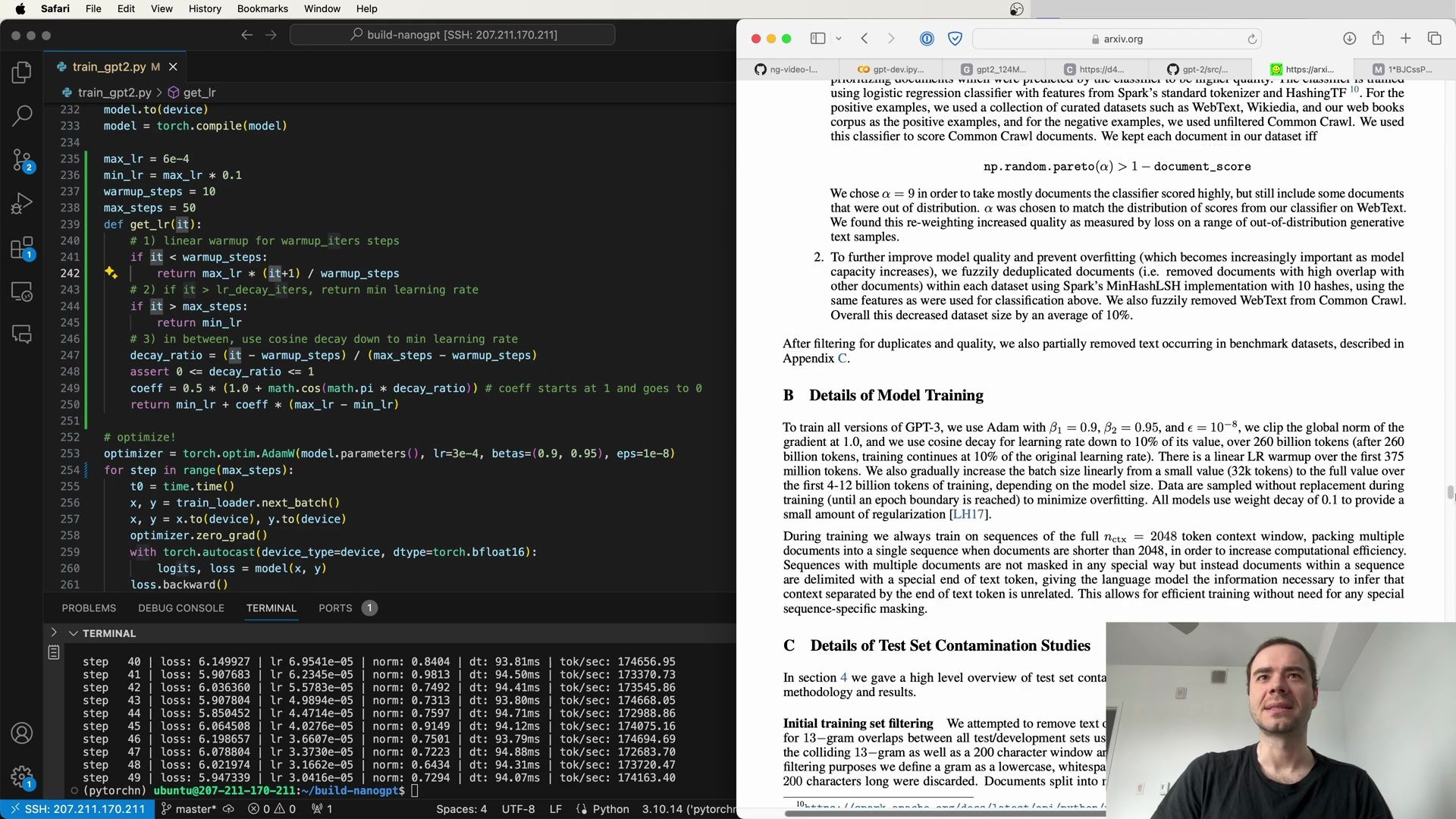

Insights into Training GPT-3

The training process for all versions of GPT-3 includes several vital components, as extracted from the script and screenshot descriptions:

- Adam Optimizer: The use of the Adam optimizer with hyperparameters

β1 = 0.9,β2 = 0.95, andε = 1e-8. - Gradient Clipping: Clipping the global norm of the gradient at

1.0to prevent gradients from exploding. - Cosine Decay: Employing cosine decay for the learning rate down to

10%of its initial value over an extensive number of tokens. - Batch Size Scaling: Increasing the batch size linearly from a small initial value to the full value over billions of tokens, depending on the model size.

- Regularization: Implementing weight decay of

0.1as a form of L1 regularization.

The models were trained for a total of 300 billion tokens, emphasizing the massive scale of the data processed.

Efficient Sequence Packing

To increase computational efficiency, sequences are packed with the full context window of 2048 tokens. Shorter documents are concatenated with longer ones, separated by a special end-of-text token, which signals the model that the contexts are not related:

# Sample Python code illustrating the concept:

sequences = []

for doc in documents:

if len(doc) < context_window:

sequences.append(doc + end_of_text_token)

else:

sequences.append(doc)

This approach allows multiple documents to be processed in a single sequence without the need for special masking, thus optimizing the use of computational resources.

Further Technical Details

An excerpt from the technical details reveals additional insights into the training regimen and the measures taken to prevent test set contamination:

-

Test Set Contamination Avoidance: Efforts were made to ensure that the training data was free from overlaps with the test and development sets. Any overlaps and a surrounding context window were removed, with too-short resulting documents being discarded.

-

Model Architectures: The models follow the same architecture as GPT-2, including pre-normalization and tokenization strategies, with variations in the size and parameters to explore the effects of scale.

Terminal and Training Metrics

The training process is often accompanied by a live feed of metrics in the terminal, providing real-time updates on the progress. These metrics typically include the number of tokens processed per second, loss values, and time taken per training step.

Such metrics are crucial for monitoring the model’s learning and ensuring that the training is proceeding as expected. This real-time feedback loop is an indispensable part of the training workflow for large models like GPT-3.

Understanding the Learning Rate Scheduler Code

In optimizing our model’s performance, we pay special attention to the learning rate as it crucially influences the model’s ability to converge to a good set of parameters. The learning rate scheduler plays a pivotal role in this process, and the code provided gives us an in-depth look at its implementation. Let’s dissect the provided Python code to understand each component:

# Learning rate schedule parameters

max_lr = 6e-4 # The maximum learning rate

min_lr = max_lr * 0.1 # The minimum learning rate (10% of max_lr)

warmup_steps = 10 # The number of steps over which the learning rate is warmed up

max_steps = 50 # The maximum number of steps

# The learning rate scheduling function

def get_lr(step):

if step < warmup_steps:

# Warm-up region: linearly increase the learning rate

return max_lr * (step + 1) / warmup_steps

if step >= max_steps:

# After max_steps, use the minimum learning rate

return min_lr

# In between: use cosine decay down to the minimum learning rate

decay_ratio = (step - warmup_steps) / (max_steps - warmup_steps)

assert 0 <= decay_ratio <= 1

coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))

return min_lr + coeff * (max_lr - min_lr)

# Optimizing with the AdamW optimizer

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8)

# Training loop

for step in range(max_steps):

t0 = time.time()

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

with torch.autocast(device_type=device, dtype=torch.bfloat16):

logits, loss = model(x, y)

loss.backward()

optimizer.step()

t1 = time.time()

# Log training metrics

print(f'Step: {step}, Loss: {loss.item()}, Time/step: {t1-t0}, tok/sec: {tokens_per_second}')

In this code snippet, we start with a linear warmup of the learning rate, followed by a cosine decay. The learning rate increases linearly from zero (to avoid a non-useful learning rate of zero) to the maximum learning rate (max_lr), then it decays following a cosine curve down to the minimum learning rate (min_lr), which is 10% of the maximum learning rate.

Key Elements in the Learning Rate Scheduler Code:

- Linear Warmup: The learning rate starts very low and linearly increases during the warmup phase to the maximum learning rate, avoiding the pitfall of a zero learning rate.

- Cosine Decay: After the warmup phase, the learning rate follows a cosine decay pattern, which gradually decreases it to the minimum learning rate.

- AdamW Optimizer: The AdamW optimizer is used with specific hyperparameters

betas=(0.9, 0.95)andeps=1e-8. - Mixed Precision Training: The

torch.autocastcontext manager is used for mixed-precision training, which allows for faster computation and reduced memory usage.

Training Log Insights

During training, it’s essential to monitor the model’s performance through logs that provide insights into loss, learning rate, and tokens processed per second. Below is an example of the training log output:

step | loss: 5.8868630 | lr: 0.0006 | dt: 94.20ms | tok/sec: 174320.96

step | loss: 5.8488910 | lr: 0.0006 | dt: 94.20ms | tok/sec: 174320.96

...

From these logs, we can extract the following details:

- Step: The current step number in the training process.

- Loss: The loss value at the current step, which we aim to minimize.

- Learning Rate (lr): The learning rate applied at the current step, adjusted according to the schedule.

- Time per Step (dt): The duration in milliseconds it takes to complete one training step.

- Tokens per Second (tok/sec): The number of tokens processed per second.

By monitoring these metrics, we can gauge the efficiency and effectiveness of the training process.

Dataset Examples

The training data plays a significant role in how the model learns to perform tasks. Here are examples of the formatted datasets for PIQA and COPA, respectively:

- PIQA Example:

- Context: How to apply sealant to wood.

- Correct Answer: Using a brush, brush on sealant onto wood until it is fully saturated with the sealant.

- Incorrect Answer: Using a brush, drip on sealant onto wood until it is fully saturated with the sealant.

- COPA Example:

- Context: (CNN) Yuval Rabin, whose father, Yitzhak Rabin, was assassinated while serving as Prime Minister of Israel, criticized Donald Trump for appealing to ‘Second Amendment people’ in a speech and warned that the words that politicians use can incite violence and undermine democracy.

- Correct Answer: Referencing his father, who was shot and killed by an extremist amid political tension in Israel in 1995, Rabin condemned Donald Trump’s aggressive rhetoric.

- Incorrect Answer: Referencing his father, who was shot and killed by an extremist amid political tension in Israel in 1995, Rabin condemned Donald Trump’s aggressive rhetoric.

These examples illustrate how the datasets are structured, with contexts provided along with correct and incorrect answers, which helps the model learn to distinguish between the two.

Training and Debugging

As we train our model, it is not uncommon to encounter issues that require debugging. The training logs are invaluable in this regard, offering real-time feedback on the model’s performance and any potential issues. Debugging may involve examining the loss, gradients, or tokens per second to ensure that the training is progressing as expected.

In the screenshot, we observe a debug console with various metrics that provide insight into the training process. The console includes information such as the current step, loss, learning rate, and tokens processed per second, all of which are critical for diagnosing and resolving issues during training.

By carefully examining training logs and employing debugging tools, we can ensure that our model is learning effectively and make any necessary adjustments to the training regimen.

Diving Deeper into Learning Rate Scheduling

In the pursuit of fine-tuning our model’s training process, we delve into the nuances of learning rate scheduling, an area that has been popularized and extended significantly in recent research. The learning rate scheduler we’re exploring is inspired by the methods used in training GPT-2 and GPT-3, although with some modifications tailored to our specific needs.

The Learning Rate Scheduler in Detail

The scheduler’s code is a critical component of our training strategy. Below is a breakdown of the code block that defines the learning rate scheduler:

# Learning rate schedule parameters

max_lr = 6e-4 # The maximum learning rate

min_lr = max_lr * 0.1 # The minimum learning rate (10% of max_lr)

warmup_steps = 10 # The number of steps over which the learning rate is warmed up

max_steps = 50 # The maximum number of steps

# Learning rate scheduling function

def get_lr(t):

# Linear warmup for warmup_iters steps

if t < warmup_steps:

return max_lr * (t+1) / warmup_steps

# Cosine decay down to min learning rate

decay_ratio = (t - warmup_steps) / (max_steps - warmup_steps)

coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio)) # coeff starts at 1 and goes to 0

assert 0 <= decay_ratio <= 1

return min_lr + coeff * (max_lr - min_lr)

# Optimizer instantiation using AdamW

optimizer = torch.optim.AdamW(model.parameters(), lr=3e-4, betas=(0.9, 0.95), eps=1e-8)

# Training loop for updating model parameters

for step in range(max_steps):

# ... training code ...

Key Points to Remember

- Warmup Period: The learning rate starts very low and increases linearly during the warmup phase to the maximum learning rate.

- Cosine Decay: Following the warmup, the learning rate decreases following a cosine curve to the minimum learning rate.

- Parameters: The

max_lr,min_lr,warmup_steps, andmax_stepsparameters can be adjusted to suit the model’s training needs.

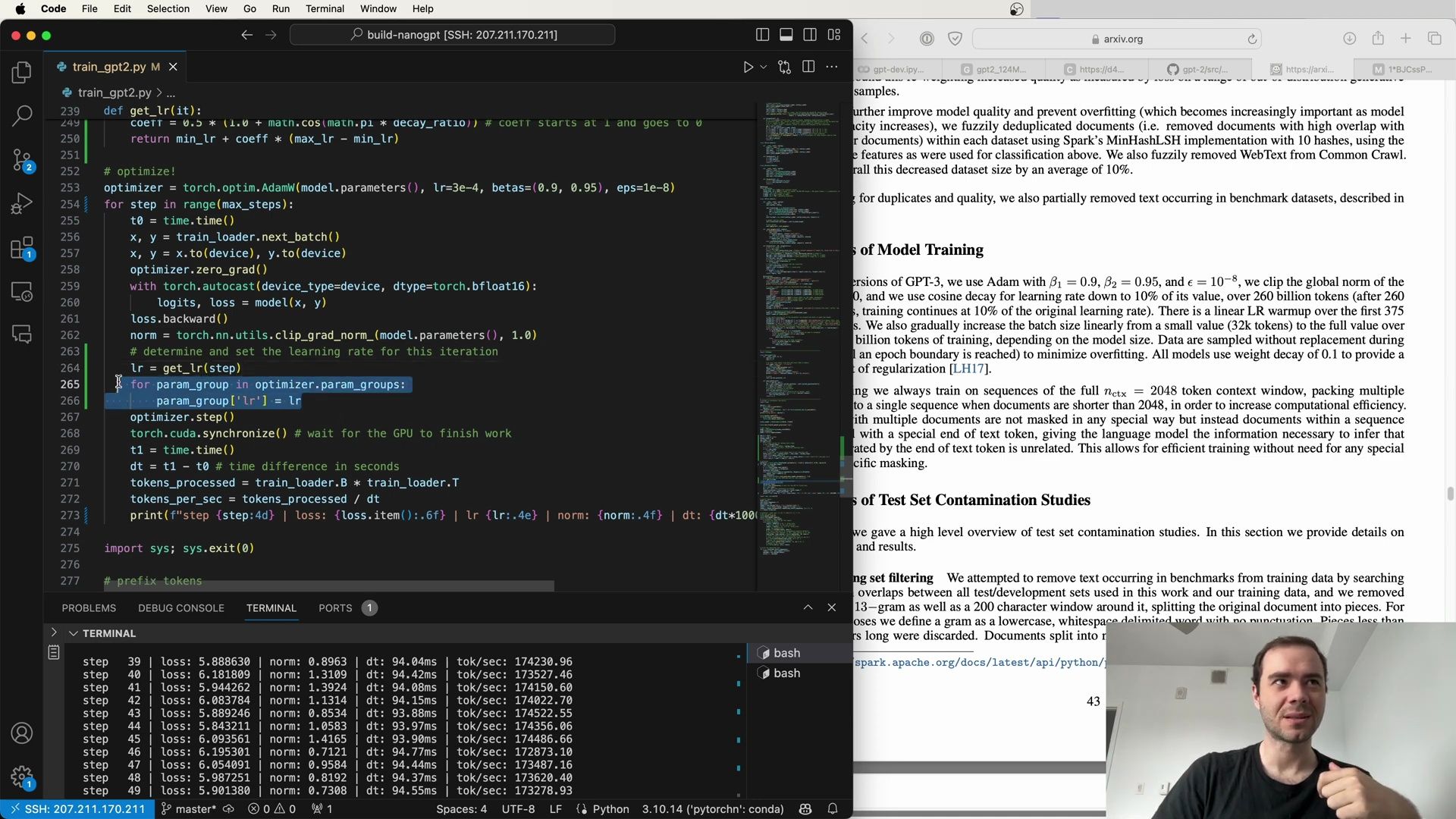

Model Training and Optimization

The training process is at the heart of developing a robust and effective model. Let’s take a closer look at the training loop, where the learning rate scheduling function and the optimizer come into play:

# Training loop

for step in range(max_steps):

# Record the time at the start of the step

t0 = time.time()

# Obtain the next batch of training data

x, y = train_loader.next_batch()

# Move the batch to the appropriate device (e.g., GPU)

x, y = x.to(device), y.to(device)

# Prepare the model for a new gradient calculation

optimizer.zero_grad()

# Forward pass through the model with autocasting to mixed precision

with torch.autocast(device_type=device, dtype=torch.bfloat16):

logits, loss = model(x, y)

# Backward pass to compute the gradient

loss.backward()

# Clip gradients to prevent exploding gradient problem

norm = torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

# Update the learning rate based on the current step

lr = get_lr(step)

for param_group in optimizer.param_groups:

param_group['lr'] = lr

# Update model parameters

optimizer.step()

# Synchronize GPU to ensure all tasks are completed before moving on

torch.cuda.synchronize()

# Record the time at the end of the step

t1 = time.time() - t0

Insights from the Training Loop

- Gradient Clipping: To prevent the notorious exploding gradient problem, gradients are clipped to a norm of 1.0.

- Learning Rate Updates: The learning rate for each step is determined by the

get_lrfunction and dynamically updated. - Mixed Precision Training: The

torch.autocastcontext manager is used to enable mixed precision, thereby accelerating computation and reducing memory usage. - Synchronization: The

torch.cuda.synchronize()function ensures that all GPU operations are completed before proceeding to the next step.

The following screenshot illustrates a debug console outputting training metrics, providing visibility into the training dynamics:

Tackling Training Data Quality

Training data quality is paramount for the performance of language models. To ensure high-quality data, various strategies are employed:

- Logistic Regression Classifier: Used to score documents from the Common Crawl dataset, with a preference for higher-scored documents.

- Pareto Distribution: To select documents, the Pareto distribution is used, with a parameter

achosen to match the classifier’s score distribution on WebText. - Fuzzy Deduplication: Documents with high overlap are removed using Spark’s MinHashLSH, decreasing dataset size and improving quality.

- Excluding Benchmarks: Partial removal of text occurring in benchmark datasets mitigates overfitting and test set contamination.

Elaborating on GPT-3 Training Details

For GPT-3, several specific training strategies are outlined:

- Adam Optimizer: The Adam optimizer is configured with

β1 = 0.9,β2 = 0.95, andϵ = 10^-8. - Gradient Clipping: The global norm of the gradients is clipped to 1.0.

- Cosine Decay: The learning rate is decayed to 10% of its original value over 260 billion tokens.

- LR Warmup: A linear learning rate warmup is applied over the first 375 million tokens.

- Batch Size Scaling: The batch size is gradually increased during the initial phase of training.

- Sequence Packing: To increase computational efficiency, multiple documents are packed into a single sequence when shorter than the full context window (

nctx = 2048tokens), with no special masking required.

These intricate details highlight the complexity and thoughtfulness that goes into training state-of-the-art language models like GPT-3. The process involves a fine balance between learning rate scheduling, data quality control, and optimization techniques. Through careful tuning and monitoring, these models can achieve remarkable performance on a wide range of tasks.

Understanding Batch Size Ramp-Up

In the realm of model optimization, one approach that has been discussed is the gradual increase in batch size—referred to as batch size ramp-up. This technique starts with a very small batch size and linearly increases it over time. However, we have chosen to skip this step for a couple of reasons:

- Batch size ramp-up complicates the arithmetic of the optimization process, as the number of tokens processed at each step changes.

- It is primarily a systems and speed optimization rather than an algorithmic one.

- In the early stages of training, the model is learning simple biases such as which tokens are used frequently and which are not, leading to highly correlated gradients across examples.

Consequently, we keep the batch size constant to maintain simplicity in our optimization calculations.

Sampling Data Without Replacement

Our training approach involves sampling data without replacement until an epoch boundary is reached. This means that once a sequence is drawn for training, it is not eligible to be drawn again until the next epoch. Our data loader iterates over chunks of data, exhausting a pool before moving on to the next set. Here’s how our custom DataLoaderLite class is structured:

class DataLoaderLite:

def __init__(self, B, T):

self.B = B # Batch Size

self.T = T # Token Size

# At initialization, load tokens from disk and store them in memory

with open('input.txt', 'r') as f:

text = f.read()

enc = tokenizer.get_encoding('gpt2')

tokens = enc.encode(text)

tokens = torch.tensor(tokens)

print(f'Total tokens loaded: {len(tokens)}')

This class ensures that once tokens are processed in a batch, they are not reused until the next epoch, thereby reducing the likelihood of overfitting.

Implementing Weight Decay

Weight decay is another tool in our optimization arsenal, providing a small amount of regularization to the model. We integrate this into our learning rate scheduler and optimizer configuration as follows:

# Learning rate and weight decay function

def get_lr(t, max_lr, min_lr, warmup_steps, max_steps):

if t < warmup_steps:

return max_lr * (t + 1) / warmup_steps

decay_ratio = (t - warmup_steps) / (max_steps - warmup_steps)

coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))

return min_lr + coeff * (max_lr - min_lr)

# Configure optimizer with weight decay

def configure_optimizers(model, weight_decay=1e-1, learning_rate=6e-4, device):

optimizer = torch.optim.AdamW(

model.parameters(),

lr=learning_rate,

betas=(0.9, 0.95),

eps=1e-8,

weight_decay=weight_decay

)

return optimizer

# Example usage within the training loop

optimizer = configure_optimizers(model, 0.1, 6e-4, device)

The get_lr function is designed to adjust the learning rate using a cosine decay schedule, while the configure_optimizers function sets up our optimizer with the necessary parameters, including weight decay.

Training Loop Enhancements

Our enhanced training loop includes the configured learning rate scheduler and weight decay. We also keep track of the processing speed to monitor the model’s efficiency:

for step in range(max_steps):

t0 = time.time()

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

with torch.autocast(device_type=device, dtype=torch.bfloat16):

logits, loss = model(x, y)

loss.backward()

norm = torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

lr = get_lr(step, max_lr, min_lr, warmup_steps, max_steps)

for param_group in optimizer.param_groups:

param_group['lr'] = lr

optimizer.step()

torch.cuda.synchronize() # Wait for GPU to finish work

t1 = time.time()

td = t1 - t0 # Time difference in seconds

tokens_processed = train_loader.B * train_loader.T

tokens_per_sec = tokens_processed / td

# Output performance metrics

print(f'step {step} loss: {loss.item():.6f} lr: {lr:.7f} | dt: {td*1000:.2f}ms | tok/sec: {tokens_per_sec:.2f}')

This training loop provides visibility into the model’s learning process, with clear metrics to analyze its performance in terms of loss, learning rate, processing time, and tokens processed per second.

GPT-3 Training Strategies

In the training of GPT-3, we adhere to the following practices:

- Adam Optimizer: Configured with β1 = 0.9, β2 = 0.95, and ε = 10^-8.

- Gradient Clipping: The global norm of the gradients is clipped to 1.0.

- Cosine Decay: The learning rate is decayed to 10% of its value over 260 billion tokens, continuing at this reduced rate thereafter.

- Linear Warmup: A warmup period is included over the first 375 million tokens.

- Data Sampling: We sample data without replacement during training to minimize overfitting.

- Weight Decay: A weight decay of 0.1 is used for regularization.

These strategies are encapsulated within our model’s configure_optimizers method:

class GPT(nn.Module):

# ... model definition ...

def configure_optimizers(self, weight_decay, learning_rate, device):

# Start with all parameters that require gradients

param_dict = {pn: p for pn, p in self.named_parameters() if p.requires_grad}

# Group parameters for weight decay

decay_params = [p for n, p in param_dict.items() if p.dim() > 2]

nodecay_params = [p for n, p in param_dict.items() if p.dim() <= 2]

optim_groups = [

{'params': decay_params, 'weight_decay': weight_decay},

{'params': nodecay_params, 'weight_decay': 0.0}

]

optimizer = torch.optim.AdamW(

optim_groups,

lr=learning_rate,

betas=(0.9, 0.95),

eps=1e-8

)

return optimizer

The configure_optimizers method creates an optimizer with different parameter groups, some of which have weight decay applied. This nuanced approach allows us to tailor the regularization to the specific needs of different parts of the model.

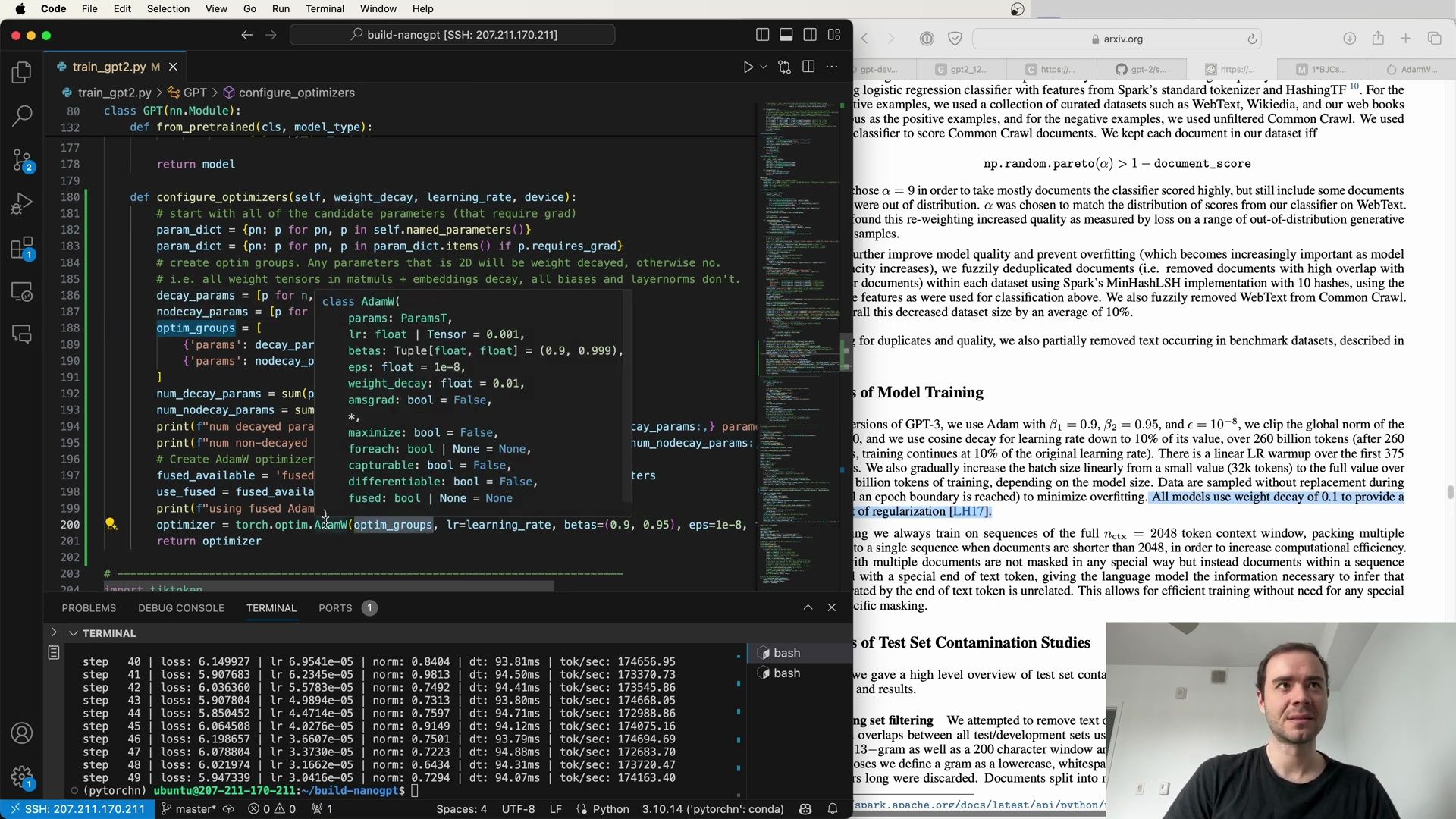

Fine-Tuning the Weight Decay Parameter

When configuring our optimizer, a key consideration is the weight_decay parameter. This parameter is essential for regularization, as it helps prevent individual weights from growing too large and encourages the distribution of importance across more neurons. In our implementation, this parameter is carefully fine-tuned and passed into a list of optimization groups, ultimately used by the AdamW optimizer.

The process involves segregating the model parameters into groups based on whether they should experience weight decay or not. Typically, biases and one-dimensional tensors, such as layer normalization scales, do not undergo weight decay. On the other hand, weights involved in matrix multiplications and embeddings are subject to decay. This distinction is made clear in the following code block, which details the configure_optimizers method of our GPT class:

class GPT(nn.Module):

def configure_optimizers(self, weight_decay, learning_rate, device):

# Start with all parameters that require gradients

param_dict = {n: p for n, p in self.named_parameters() if p.requires_grad}

# Split parameters into decay and no-decay groups

decay_params = [p for n, p in param_dict.items() if p.dim() > 2]

nodecay_params = [p for n, p in param_dict.items() if p.dim() <= 2]

optim_groups = [

{'params': decay_params, 'weight_decay': weight_decay},

{'params': nodecay_params, 'weight_decay': 0.0}

]

# Print the number of decay and no-decay parameters

num_decay_params = sum(p.numel() for p in decay_params)

num_nodecay_params = sum(p.numel() for p in nodecay_params)

print(f'Using {num_decay_params} decay parameters and {num_nodecay_params} no-decay parameters')

# Use the fused AdamW optimizer if available and running on CUDA

fused_available = 'fused' in inspect.getsource(torch.optim.AdamW).parameters

use_fused = fused_available and 'cuda' in device

print(f'Using fused AdamW optimizer: {use_fused}')

optimizer = torch.optim.AdamW(

optim_groups,

lr=learning_rate,

betas=(0.9, 0.95),

eps=1e-8

)

return optimizer

In the above method, we separate parameters into two lists: one for parameters that will undergo weight decay (decay_params) and one for parameters that will not (nodecay_params). The optimizer is then constructed with these two parameter groups, ensuring that only the appropriate parameters are regularized.

This nuanced approach allows the model to leverage the benefits of weight decay without negatively affecting parameters that should not be regularized, such as biases and normalization factors.

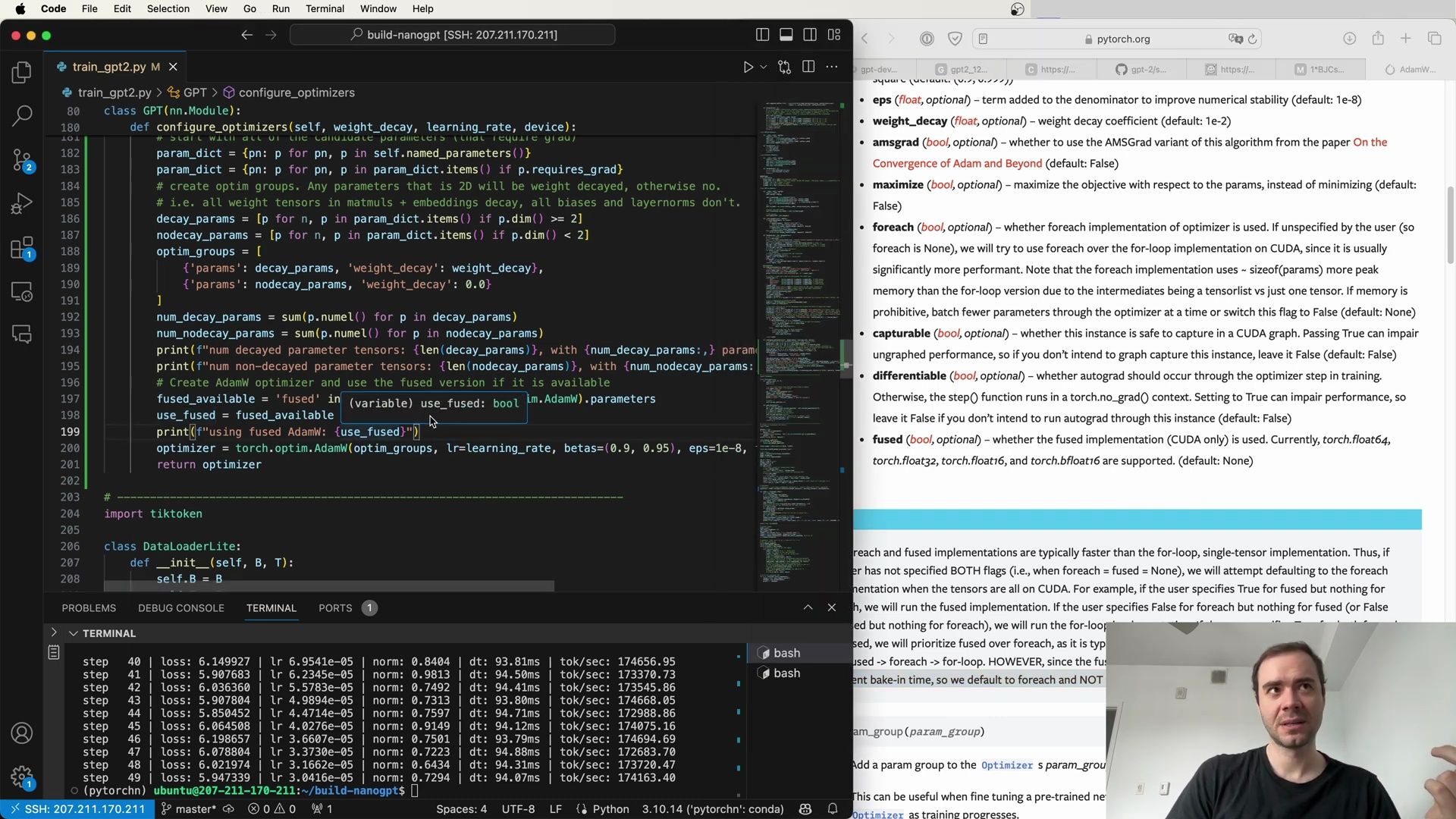

Leveraging Fused AdamW for Performance

An important optimization in our code is the use of the fused version of the AdamW optimizer when it’s available. This is a relatively new feature in PyTorch that can provide significant performance improvements, especially when running on CUDA-enabled devices. Fused AdamW essentially combines multiple operations into a single kernel call, which reduces the overhead of launching multiple kernels and can speed up the optimization process.

The following code snippet shows the detection of the fused AdamW optimizer and its conditional usage:

# ... within configure_optimizers method ...

# Check for the availability of the fused AdamW optimizer

fused_available = 'fused' in inspect.getsource(torch.optim.AdamW).parameters

use_fused = fused_available and 'cuda' in device

print(f'Using fused AdamW optimizer: {use_fused}')

# Create the AdamW optimizer, potentially using the fused version

optimizer = torch.optim.AdamW(

optim_groups,

lr=learning_rate,

betas=(0.9, 0.95),

eps=1e-8

)

return optimizer

By using the fused AdamW optimizer, we minimize the number of individual operations that need to be performed during each optimization step, leading to a more efficient training process.

Kernel Fusion Optimization

Kernel fusion is a technique that amalgamates several GPU kernel launches into a single launch. This can significantly reduce the computational overhead associated with the optimization step in training neural networks. When discussing the AdamW optimizer’s update step, we refer to kernel fusion as a method to streamline the update process across all parameter tensors. Instead of updating each tensor individually, which would result in multiple kernel launches, kernel fusion allows for a single kernel to update all parameters at once.

This optimization is particularly beneficial when using CUDA, as it maximizes the use of GPU resources and leads to faster execution times.

# ... within the configure_optimizers method ...

# Determine if the fused optimizer can be used

fused_available = 'fused' in inspect.getsource(torch.optim.AdamW).parameters

use_fused = fused_available and 'cuda' in device

print(f'Using fused AdamW optimizer: {use_fused}')

# The optimizer is instantiated with the option to use the fused variant if available

optimizer = torch.optim.AdamW(

optim_groups,

lr=learning_rate,

betas=(0.9, 0.95),

eps=1e-8

)

return optimizer

This method of optimization underscores the importance of staying up to date with advancements in machine learning libraries, as such updates can provide tangible benefits to model training efficiency.

Conclusion and Next Steps

As we continue to push the boundaries of neural network training, it’s crucial to fine-tune every aspect of the optimization process. From the careful selection of parameters that undergo weight decay to the adoption of fused kernel operations, each choice plays a role in enhancing the model’s performance. In the next section of our training journey, we will delve deeper into additional strategies and techniques that can further refine our model’s learning process. Stay tuned for more insights and code snippets that will help you master the art of neural network optimization.

Refining the configure_optimizers Method

Building upon our previous optimizer configuration, let’s delve into the specifics of the configure_optimizers method within our GPT class. This method plays a crucial role in distinguishing which parameters undergo weight decay and which do not. To ensure that our model performs at its best, we’ve got to be precise about how we apply weight decay.

Here’s an enhanced version of the configure_optimizers method:

class GPT(nn.Module):

def configure_optimizers(self, weight_decay, learning_rate, device):

# Start with all parameters that require gradients

param_dict = {n: p for n, p in self.named_parameters() if p.requires_grad}

# Split parameters into decay and no-decay groups

# Weight tensors in matrix multiplications and embeddings will decay,

# biases and layer norms will not

decay_params = [p for n, p in param_dict.items() if p.dim() >= 2]

nodecay_params = [p for n, p in param_dict.items() if p.dim() < 2]

optim_groups = [

{'params': decay_params, 'weight_decay': weight_decay},

{'params': nodecay_params, 'weight_decay': 0.0}

]

# Count the total number of decay and no-decay parameters

num_decay_params = sum(p.numel() for p in decay_params)

num_nodecay_params = sum(p.numel() for p in nodecay_params)

# Print the parameter counts for debugging

print(f"Decay params: {num_decay_params}, No-decay params: {num_nodecay_params}")

# Use the fused AdamW optimizer if available and running on CUDA

fused_available = 'fused' in inspect.getsource(torch.optim.AdamW).parameters

use_fused = fused_available and 'cuda' in device

print(f"Using fused AdamW optimizer: {use_fused}")

optimizer = torch.optim.AdamW(

optim_groups,

lr=learning_rate,

betas=(0.9, 0.95),

eps=1e-8,

use_fused=use_fused

)

return optimizer

As we can see from the script, the method begins by collecting all the trainable parameters. It then assigns these parameters to two distinct groups:

- Decay parameters: These are the weight tensors that are found in matrix multiplications and embeddings, which will undergo weight decay.

- No-decay parameters: These include biases and layer normalization scales, which will not undergo weight decay.

By segregating the parameters into these two categories, we can apply a different weight_decay value to each group, thereby ensuring that only the appropriate parameters are regularized.

One of the notable optimizations we’ve introduced is the conditional use of the fused version of the AdamW optimizer. We check for its availability and use it if we’re running on a CUDA-enabled device. The use of the fused optimizer can lead to substantial performance gains, as it combines multiple operations into fewer kernel calls.

Performance Improvements with Fused AdamW

The use of the fused AdamW optimizer can make a significant difference in training time. By reducing the number of kernel calls, we streamline the optimization step, allowing for a more efficient training loop. Even small improvements in run time per step can lead to substantial time savings over the entire training process.

Consider the following terminal output, which illustrates the performance improvement:

step 41 | loss: 5.097683 | lr: 6.7345e-05 | dt: 94.10ms | tok/sec: 173350.73

...

step 44 | loss: 5.084924 | lr: 6.7345e-05 | dt: 94.17ms | tok/sec: 174163.40

After implementing the fused version of AdamW, we observe a reduction in the time per step from 94 milliseconds to 90 milliseconds. This optimization results from the introduction of fused Adam and the decision to apply weight decay only to two-dimensional parameters like embeddings and matrices involved in linear transformations.

Emphasizing the Importance of Weight Decay Selection

To reiterate the significance of our weight decay strategy, it’s worth noting that most of the model’s parameters undergo decay. This is a deliberate choice, as it’s primarily the embeddings and weight matrices in matrix multiplications that require regularization to prevent overfitting. On the other hand, biases and layer normalization parameters, which are fewer in number, do not experience weight decay, as applying decay to these can negatively impact the model’s learning capacity.

Here’s a comparative look at the parameter counts:

- Number of decayed tensors: 50 (most of the parameters)

- Number of non-decayed tensors: 98 (biases and layers norms)

This careful balance ensures that our model remains robust and generalizes well to new data, without compromising on the ability to learn complex patterns.

In summary, the configure_optimizers function is a foundational piece of our training pipeline, setting the stage for an efficient and effective optimization process. By leveraging the latest features available in optimization algorithms and being selective about which parameters undergo weight decay, we’re optimizing not just the model’s performance but also our training efficiency.

Learning Rate Scheduling and Optimization

Optimizing the learning rate schedule is a key aspect of training neural networks effectively. The get_lr function provided in the script is a crucial component of such a schedule. It defines three phases of learning rate adjustments: linear warmup, cosine decay, and a constant minimum learning rate.

Here’s the get_lr function as described:

def get_lr(it):

# 1) linear warmup for warmup_steps steps

if it < warmup_steps:

return max_lr * (it+1) / warmup_steps

# 2) if it > max_steps, return minimum learning rate

if it > max_steps:

return min_lr

# 3) in between, use cosine decay down to min learning rate

decay_ratio = (it - warmup_steps) / (max_steps - warmup_steps)

assert decay_ratio >= 0

coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))

return max_lr * coeff

In the training loop, we see the model’s optimizer configured with a specific weight decay and learning rate. An important note is made regarding the efficiency of different implementations of optimizers. Specifically, the text points out that the foreach and fused implementations typically offer greater speed than the traditional for-loop, single-tensor implementations. It also emphasizes the use of the foreach implementation as the default when the tensors reside on CUDA devices.

The following code snippet shows the training loop where the optimizer is used, and the learning rate is updated at each step:

optimizer = model.configure_optimizers(weight_decay=0.1, learning_rate=6e-4, device=device)

for step in range(max_steps):

t0 = time.time()

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

optimizer.zero_grad()

with torch.autocast(device_type=device, dtype=torch.bfloat16):

logits, loss = model(x, y)

loss.backward()

norm = torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

# determine and set the learning rate for this iteration

lr = get_lr(step)

for param_group in optimizer.param_groups:

param_group['lr'] = lr

optimizer.step()

t1 = time.time()

print(f'step {step+1}/{max_steps}, dt: {t1-t0:.2f}s, tok/sec: {int(y.numel()/(t1-t0))}')

From the terminal output, we can see the learning rate is dynamically adjusted, leading to changes in the loss over time:

step 35 loss=0.6972, lr=0.000751, dt: 0.93s, tok/sec: 181974.72

...

step 47 loss=0.4870, lr=0.000739, dt: 0.89s, tok/sec: 181277.02

Advanced Optimizer Configurations

The script further discusses the importance of fine-tuning the optimizer settings when dealing with pre-trained models. The add_param_group method is highlighted as a tool for updating the optimizer with new parameter groups, which is often necessary when different parts of the model are fine-tuned with varying learning rates.

In the context of model training, the script outlines the approach used for training GPT-3 models:

- Adam Optimizer Settings: The models are trained using the Adam optimizer with β1 = 0.9, β2 = 0.95, and ϵ = 10^-8.

- Gradient Clipping: The global norm of the gradients is clipped at 1.0 to prevent exploding gradients.

- Learning Rate Scheduling: A cosine decay is used for the learning rate, reducing it to 10% of its value over a vast number of tokens.

- Batch Size Scaling: The batch size is gradually increased linearly from a smaller value to the full value over billions of tokens, depending on the model size.

- Data Sampling: To minimize overfitting, data are sampled without replacement during training.

These strategies are complemented by other details like document packing for efficiency and the use of a special end-of-text token to denote document boundaries within sequences.

Evaluation of GPT-3 on NLP Tasks

The evaluation of GPT-3 on various natural language processing tasks reveals insights into its capabilities:

- Reading Comprehension: GPT-3’s performance varies significantly across different datasets, suggesting its adaptability to different answer formats.

- SuperGLUE Benchmark: GPT-3 is also assessed on the SuperGLUE benchmark, a standardized collection of datasets, to compare its performance against models like BERT and RoBERTa.

In summary, the script and the extracted content from the images offer a deep dive into the detailed configurations and considerations necessary for effectively training and evaluating large-scale language models like GPT-3. Optimizations in learning rate scheduling, optimizer configurations, and evaluation methodologies contribute to the model’s overall performance and its ability to generalize across a wide range of tasks.

Model Size and Learning Rate Adaptations

As we delve deeper into the nuances of transformer-based models, we recognize that various hyperparameters require fine-tuning to optimize performance. The relationship between the size of the model and the learning rate is particularly pivotal. Generally, larger networks are trained with slightly lower learning rates, and the batch size tends to increase alongside the model’s size.

The trade-off between computational resources and the optimal hyperparameters is a constant challenge in the field of deep learning. For instance, a batch size of 0.5 million may be ideal for some large networks, but it’s impractical for individuals or organizations with limited GPU capabilities. However, the goal remains to emulate the conditions that these hyperparameters provide.

Gradient Accumulation: A Solution for Limited Resources

One effective strategy to overcome resource limitations is gradient accumulation. This technique allows us to simulate large batch sizes on smaller GPUs by running multiple forward and backward passes before performing a parameter update. Here’s how it works:

# total desired batch size (0.5 million tokens)

total_batch_size = 524288 # roughly 2**19

# micro batch size (number of tokens processed in a single forward/backward pass)

micro_batch_size = 16

# sequence length

sequence_length = 1024

# calculate gradient accumulation steps

grad_accum_steps = total_batch_size // (micro_batch_size * sequence_length)

By setting a micro_batch_size and calculating the number of gradient accumulation steps (grad_accum_steps), we can process a more extensive set of tokens across several iterations before updating the model parameters. This allows for the simulation of larger batch sizes without exceeding GPU memory limits.

Implementing Gradient Accumulation in the Training Loop

Let’s now integrate the gradient accumulation strategy into our training loop. The following code snippet demonstrates how to adjust the training loop to accommodate this method:

# Configure the device for training

device = 'cuda' if torch.cuda.is_available() else 'cpu'

print(f'Using device: {device}')

# Seed setting for reproducibility

torch.manual_seed(1337)

if torch.cuda.is_available():

torch.cuda.manual_seed(1337)

# DataLoader configuration

train_loader = DataLoaderLite(B=micro_batch_size, T=sequence_length)

# Model configuration

model = GPT(GPTConfig(vocab_size=50304))

model.to(device)

model = torch.compile(model)

# Learning rate configuration

max_lr = 6e-4

min_lr = max_lr * 0.1

warmup_steps = 10

max_steps = 50

# Begin the training loop

for step in range(max_steps):

optimizer.zero_grad()

for _ in range(grad_accum_steps):

x, y = train_loader.next_batch()

x, y = x.to(device), y.to(device)

with torch.autocast(device_type=device, dtype=torch.bfloat16):

logits, loss = model(x, y)

loss.backward()

# Update model parameters after accumulating gradients

optimizer.step()

In the modified training loop, each micro_batch is processed through the forward and backward pass, gradients are accumulated, and after grad_accum_steps iterations, the parameters are updated.

Model Architectures Across Different Scales

When examining the architecture of models trained for this research, we note a range of model sizes, from 125 million parameters all the way up to the 175 billion parameters of GPT-3. Each of these models has been trained for a total of 300 billion tokens, showcasing the scalability of the transformer architecture.

The various models share a common structure, taken from GPT-2, which includes modified initialization, pre-normalization, and attention bias. However, some adaptations have been made, such as alternating dense and locally banded sparse attention patterns, which contribute to the models’ efficiency and performance.

Hyperparameters Across Model Sizes

The following table provides a summary of the learning hyperparameters for different model sizes:

- Small (125M parameters): Batch Size: 0.5M, Learning Rate: 6.0 x 10^-4

- Medium (350M parameters): Batch Size: 0.5M, Learning Rate: 3.0 x 10^-4

- Large (760M parameters): Batch Size: 0.5M, Learning Rate: 2.5 x 10^-4

- XL (1.3B parameters): Batch Size: 1M, Learning Rate: 2.0 x 10^-4

- 2.7B parameters: Batch Size: 1M, Learning Rate: 1.6 x 10^-4

- 6.7B parameters: Batch Size: 2M, Learning Rate: 1.2 x 10^-4

- 13B parameters: Batch Size: 2M, Learning Rate: 1.0 x 10^-4

- GPT-3 (175B parameters): Batch Size: 3.2M, Learning Rate: 0.6 x 10^-4

Each model has been chosen based on computational efficiency and performance, with all models using a context window (n_ctx) of 2048 and a bottleneck dimension (neck_size) of 128. The sizes and architectures of these models are chosen within a reasonably broad range to facilitate a variety of computational capabilities and research objectives.

Conclusion

In summary, the meticulous optimization of learning rates, batch sizes, and model architectures is paramount for the successful training of large-scale language models. Techniques such as gradient accumulation provide a pathway for those with limited computational resources to participate in this research. The findings from these diverse models offer valuable insights into the scalability and performance of transformer networks, serving as a foundation for future advancements in the field.

Customizing the Learning Rate Schedule

In the context of training large models, the learning rate schedule plays a crucial role in achieving convergence and fine-tuning the model’s performance. A popular approach is to use a cosine decay schedule for the learning rate, which starts high and gradually decreases following a cosine curve. This method is often preferred because it allows for large initial learning rates for faster convergence, while slowly fine-tuning the weights as training progresses.

Let’s examine the implementation of a cosine decay learning rate schedule:

import math

def get_lr(t, warmup_steps, max_steps, min_lr, max_lr):

# 3) Between the warmup and max_steps, use cosine decay down to min learning rate

decay_ratio = (t - warmup_steps) / (max_steps - warmup_steps)

assert 0 <= decay_ratio <= 1

coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio)) # coeff starts at 1 and decays to 0

return min_lr + (max_lr - min_lr) * coeff

Here, t represents the current timestep, warmup_steps is the number of steps during which the learning rate linearly increases to max_lr, and max_steps is the total number of training steps. The min_lr and max_lr are the minimum and maximum learning rates, respectively.

Optimizer Configuration and Learning Rate Application

With the learning rate schedule defined, we can now configure the optimizer and apply the learning rate dynamically at each step of training: