KarpathyLLMChallenge

The content below is generated by LLMs based on the Tokenization video by Andrej Karpathy

Understanding the Complexities of Tokenization in Language Models

Tokenization is a foundational process in natural language processing (NLP), serving as the bridge between raw text and the numerical representations that language models (LMs) understand. The approach to tokenization can significantly impact the performance and capabilities of LMs. This article navigates through the complexities of tokenization, examining its importance, methods, and the challenges it poses in the development and functioning of state-of-the-art language models.

Table of Contents

- The GPT Development Journey

- Understanding the Character-Level Tokenization Process

- Preparing the Data for the Language Model

- The Bigram Language Model

- Input to the Transformer

- Delving into Tokenization Schemes Beyond Character-Level

- Byte Pair Encoding: Striking a Balance

- Core Principles of Language Modeling - GPT-2’s Input Representation and Tokenization

- Architectural Hyperparameters of GPT-2

- Tokenization: The Properties You Want

- Concluding Remarks on GPT-2 Tokenization

- Deep Dive into LLAMA 2 Pretraining and Tokenization Details

- Enhanced Pretraining Techniques

- Pretraining Data Considerations

- Training Details and Hyperparameters

- LLAMA 2 and LLAMA 2+CHAT Release

- Responsible Use and Safety Considerations

- Addressing Tokenization Challenges in LLMs

- Common Tokenization Issues

- Tokenization’s Pervasive Impact

- Exploring Tokenization with Web Apps

- The Intricacies of Tokenization Visualized

- Tokenization in Action

- Tokenization and Non-English Languages

- Python Code Tokenization

- Improvements in GPT-4 Tokenization

- The Underlying Complexity of Tokenization

- Unicode and Python Strings

- Tokenizing a Variety of Content

- Understanding Unicode Code Points

- The Unicode Standard Explained

- Unicode’s Role in Technology

- Ligatures and Script-Specific Rules

- Standardized Subsets of Unicode

- Accessing Unicode Code Points in Python

- The Challenge of Using Unicode Code Points for Tokenization

- Exploring the Limits of Unicode for LLMs

- Delving into Python’s Encoding Capabilities

- Understanding UTF Encodings

- UTF-16 and UTF-32: Alternatives to UTF-8

- Additional Resources for Unicode and Encoding

- Programmer’s Perspective on Unicode

- UTF-8 Everywhere Manifesto

- UTF-8: The Preferred Encoding

- Encoding Strings in Python

- The Challenge of Encoding for LLMs

- Byte Pair Encoding (BPE) Algorithm

- Hierarchical Transformers

- Conclusion on BPE and LLM Encoding

- Continuation of Byte Pair Encoding (BPE) Algorithm

- Example of BPE in Practice

- Tokenization in Python

- Finding the Most Common Byte Pair

- Implementing BPE Tokenization

- Improving Byte Pair Encoding Understanding

- Identifying Frequent Byte Pairs

- Byte Pairs in Context

- Byte Pair Encoding in Action

- Code Example: Tokenization Process - Implementing Token Merging

- Continued Token Merging and Vocabulary Expansion

- Reflecting on the Tokenization Method

- Conclusion

- Deciding on Vocabulary Size

- Iterative Token Merging

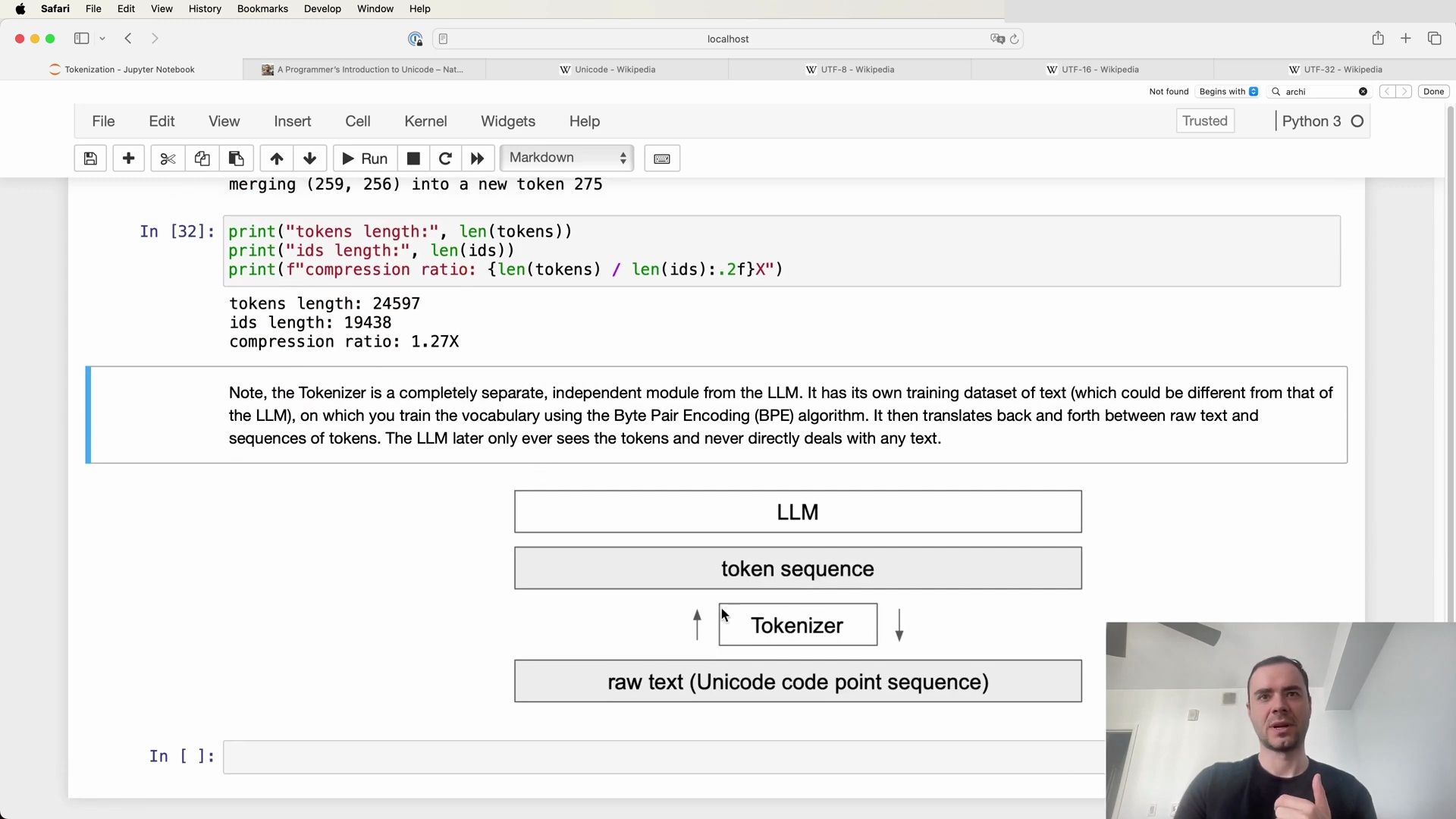

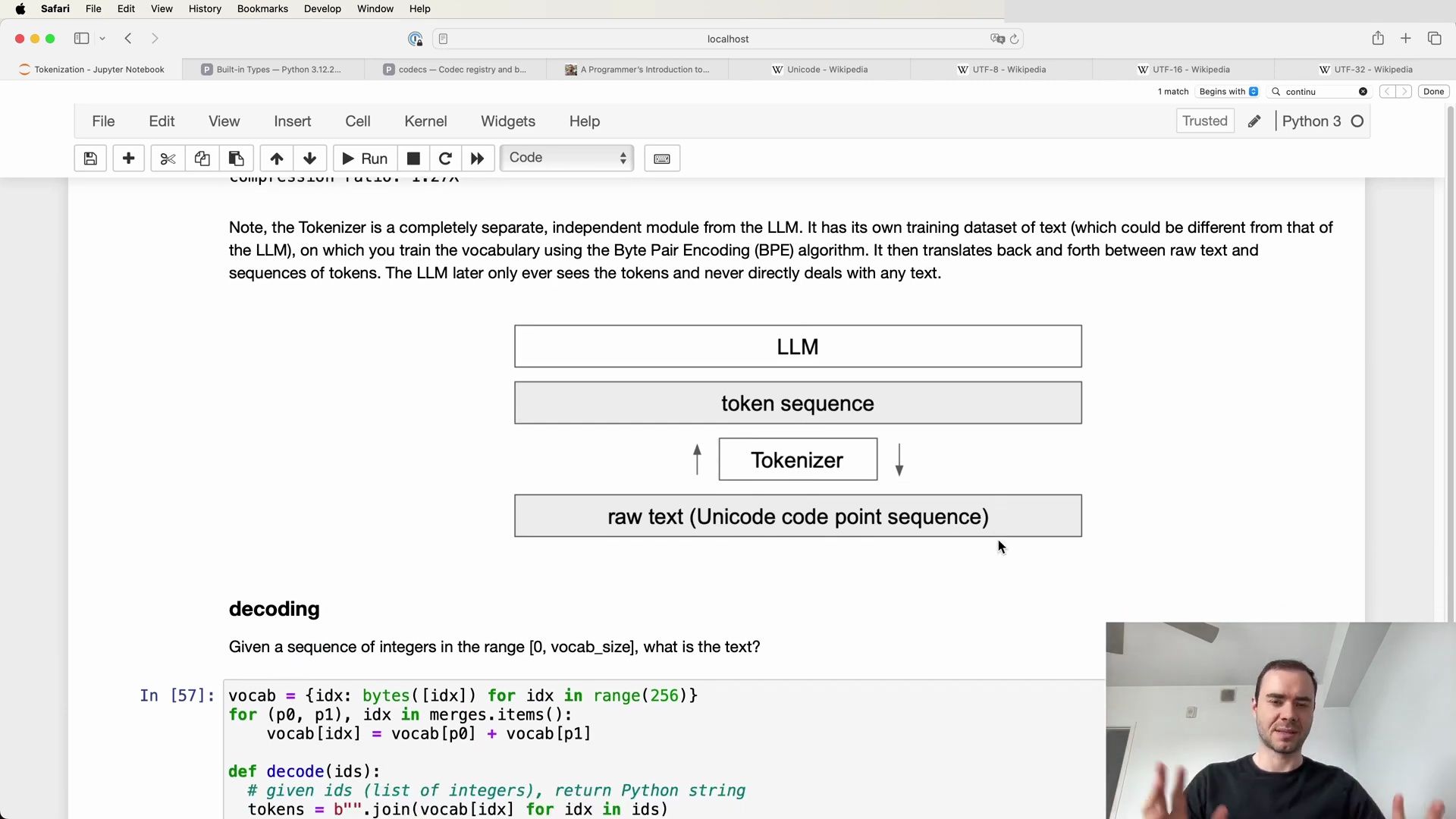

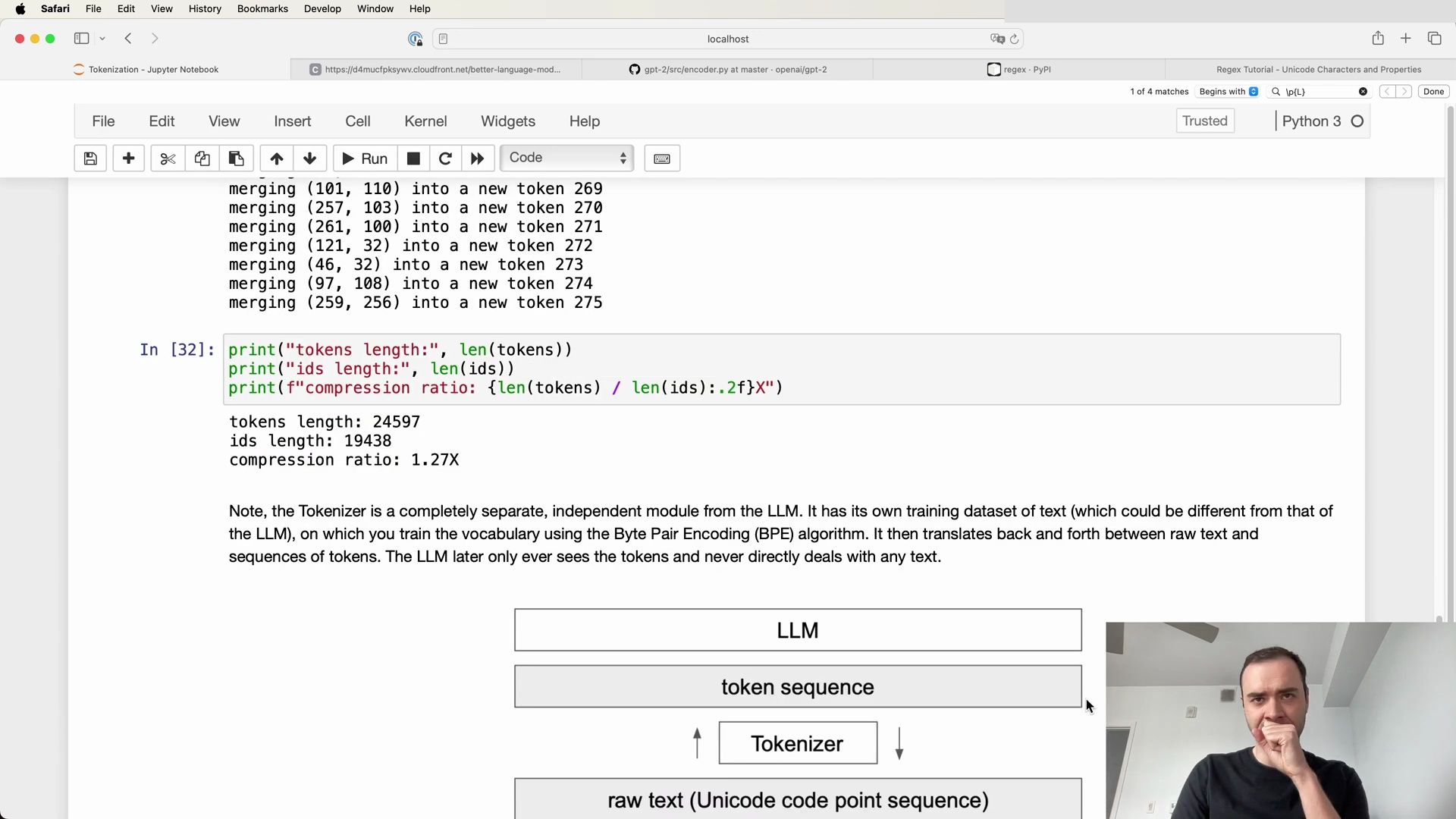

- Analyzing Compression Ratio

- Tokenizer as a Separate Entity

- Encoding and Decoding with the Tokenizer

- Understanding Encoding and Decoding

- Decoding Pitfalls

- Encoding with UTF-8

- Correcting the Decoding Function

- Final Thoughts on Encoding and Decoding

- UTF-8 Encoding Schema

- Understanding UTF-8 Byte Structure

- Correcting the Decode Function

- Dealing with Decoding Errors

- Options for Error Handling

- Decoding a Sequence of Integers

- Handling Invalid UTF-8 Byte Sequences

- Encoding Strings into Tokens

- The Encoding Process

- Implementing the

encodeFunction - Encoding Example

- Exploring the Encoding Function Implementation

- The Merging Logic

- Special Cases in Encoding

- Testing the Encoding Process

- Encoding and Decoding with Large Language Models

- Diving into GPT Tokenization Details

- GPT-2’s Approach to Tokenization

- Byte Pair Encoding (BPE)

- Optimizing the Vocabulary

- GPT-2 Model Architecture

- Language Modeling and Zero-Shot Task Transfer

- Tokenization’s Impact on Language Models

- Understanding GPT-2’s Byte Pair Encoding

- The Principle of BPE

- Challenges in Traditional BPE Implementations

- GPT-2’s Byte-Level BPE Optimization

- Practical Example of Byte Pair Encoding

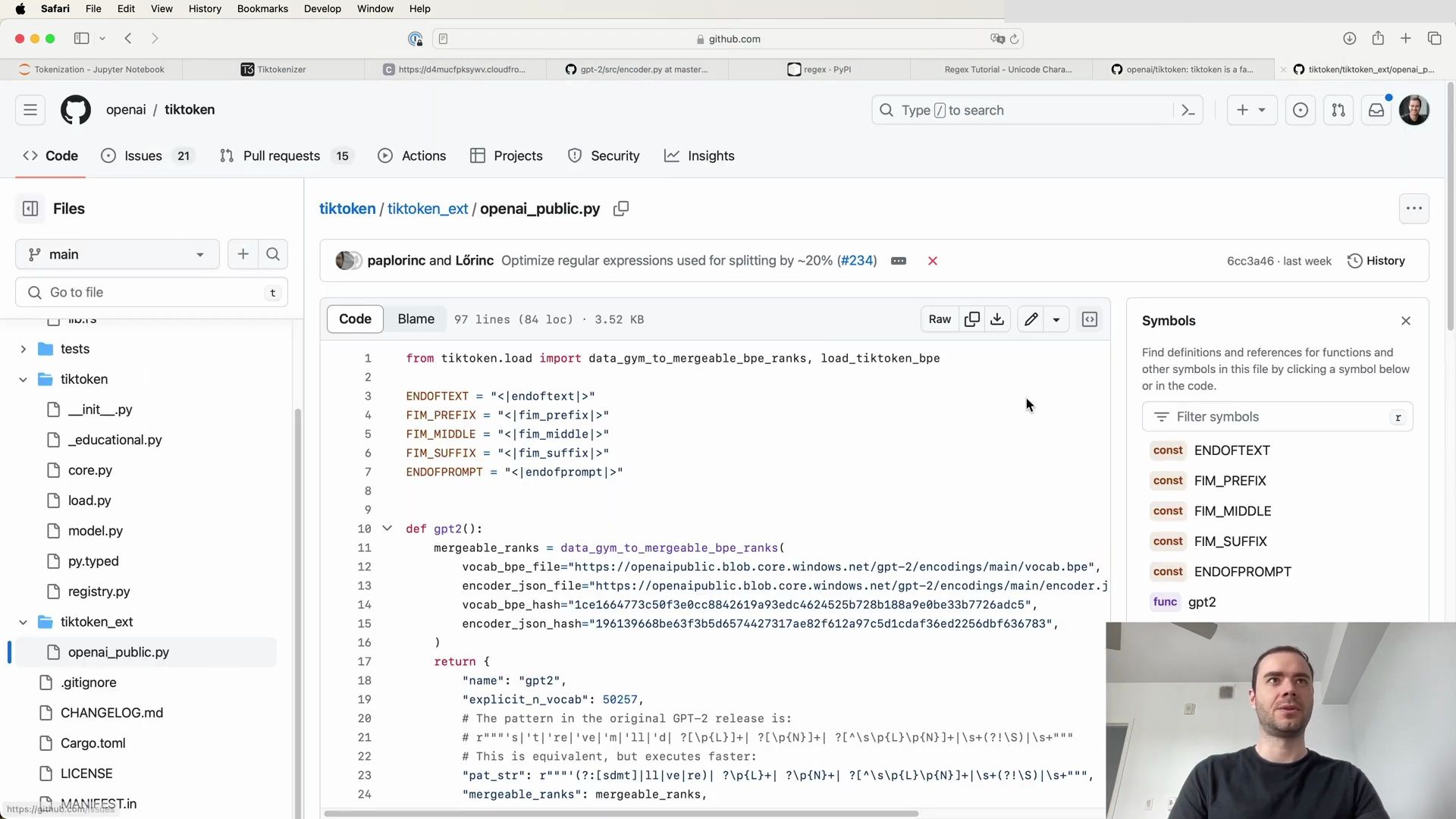

- GPT-2’s Tokenizer Implementation Details

- The Role of Regular Expressions

- Exploring GPT-2’s Regex Pattern

- Code Walkthrough of GPT-2’s Regex Pattern

- Tokenization in Practice with GPT-2

- Step-by-Step Tokenization Process

- From Text to Token Sequences

- Encoding and Decoding Functions

- Tokenizer as an Independent Module

- Token Sequence Concatenation

- Understanding Unicode Categories

- Tokenization of Apostrophes

- The Importance of Case Sensitivity

- Encoder Class and BPE Merges

- Apostrophe Tokenization and Regex Patterns

- Regex Patterns for Tokenization

- Spaces in Tokenization

- The Encoder Class in GPT Tokenization

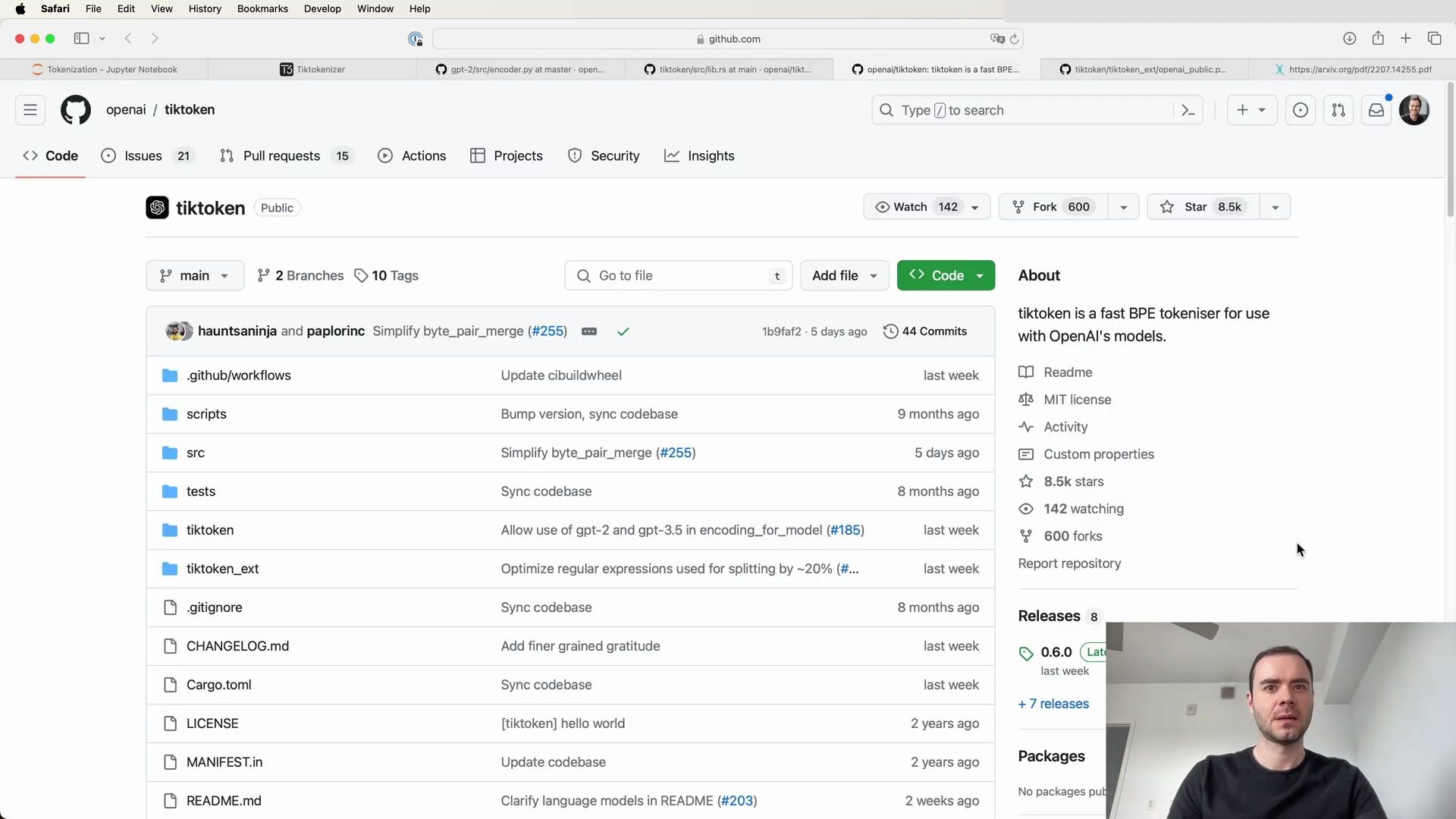

- TikToken Library by OpenAI

- Tokenization Differences between GPT-2 and GPT-4

- Exploring the TikToken Library

- GPT-2 Tokenization Functions

- GPT-4 Tokenization Updates

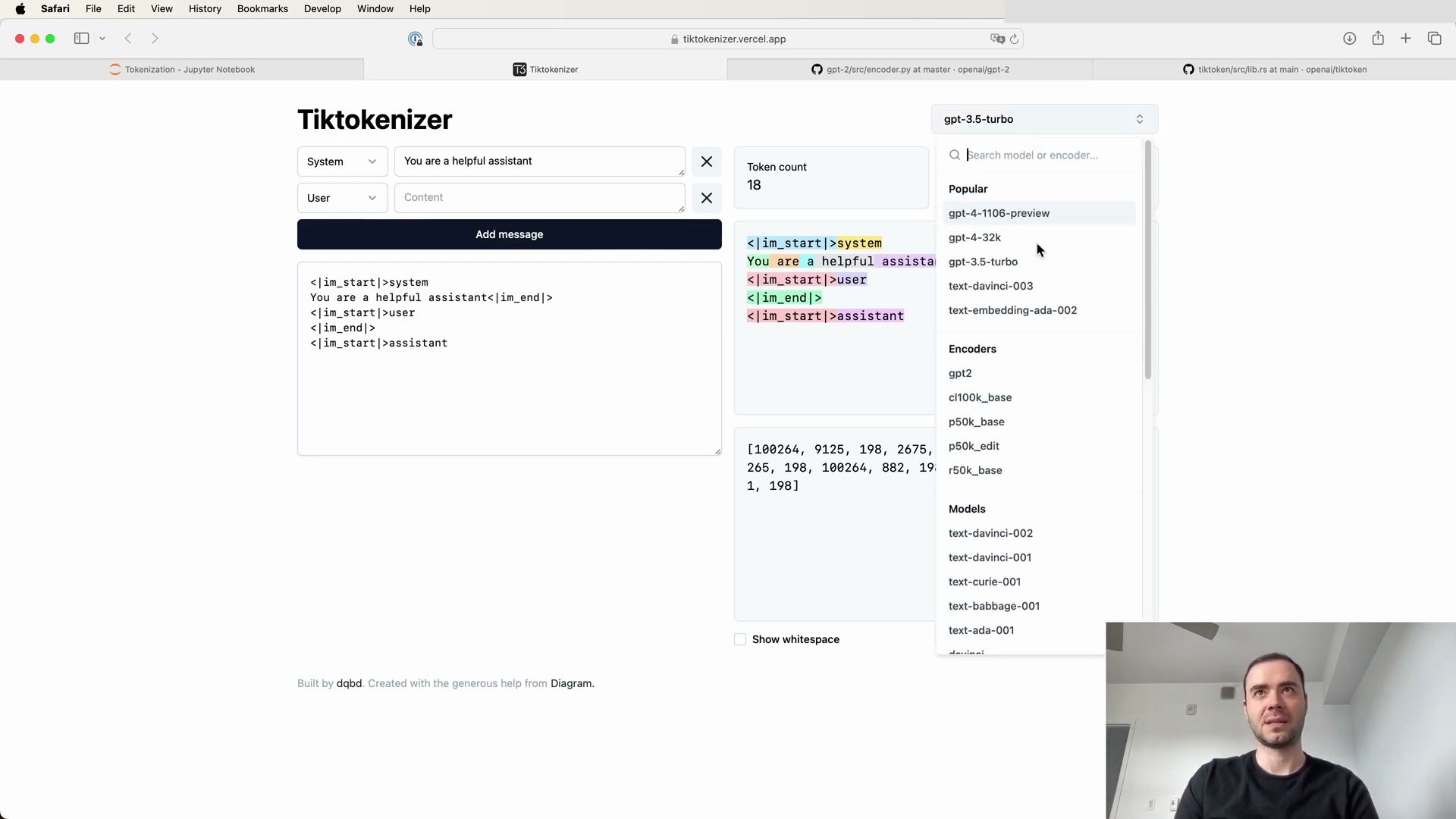

- Special Tokens and Patterns

- Encoder Class Details

- Loading Tokenizer Files

- Understanding Encoding and Decoding

- Byte Pair Encoding Mechanisms

- Byte Encoding and Decoding Process

- Detailed Look at the Encoder Class

- The Role of Special Tokens

- Understanding the Special End-of-Text Token

- Special Tokens and Encoding Specifics

- Special Tokens in Tokenization Libraries

- Integrating Special Tokens with Language Modeling

- Extending Tokenization with Custom Special Tokens

- Model Surgery for Adding Special Tokens

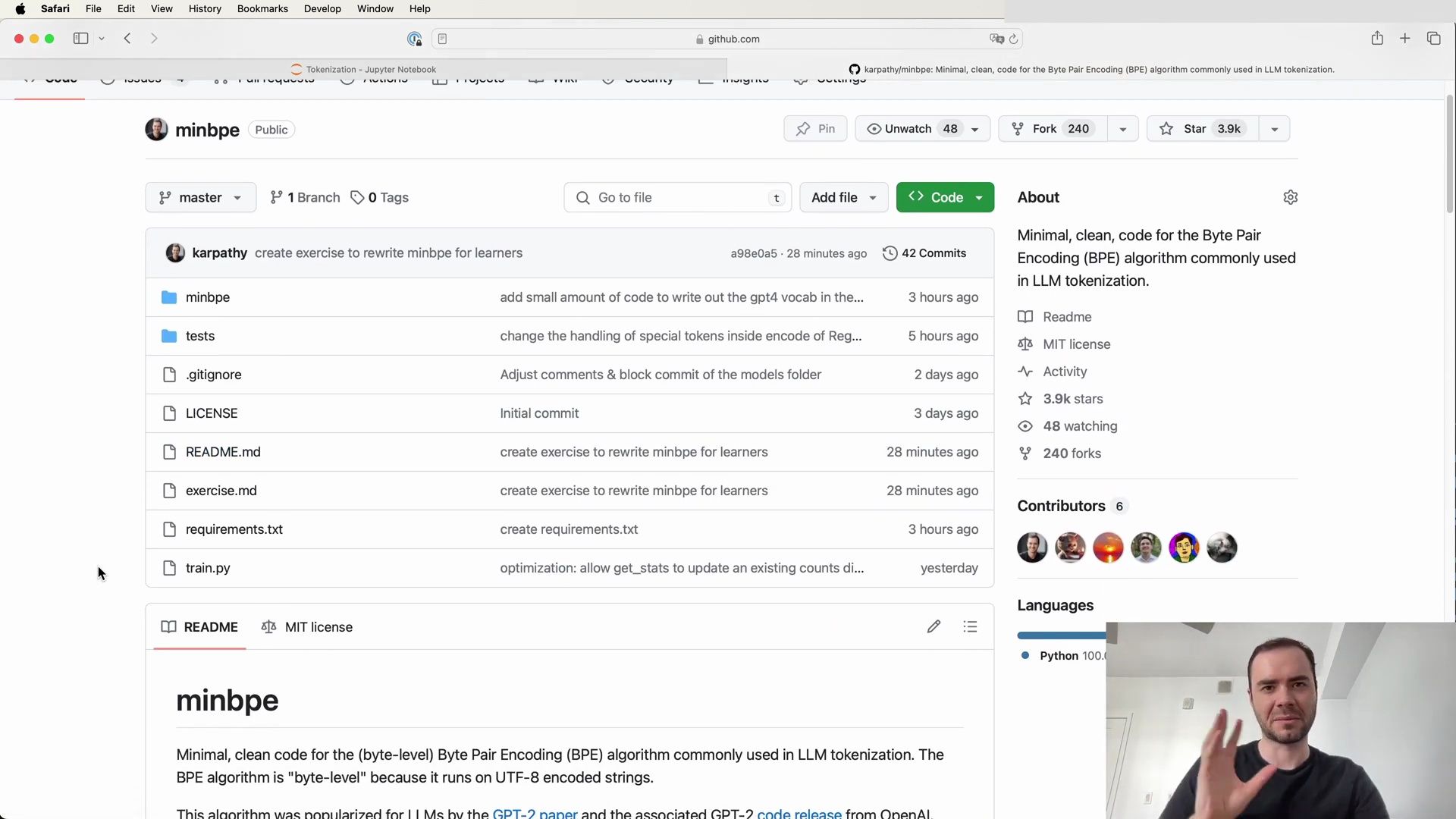

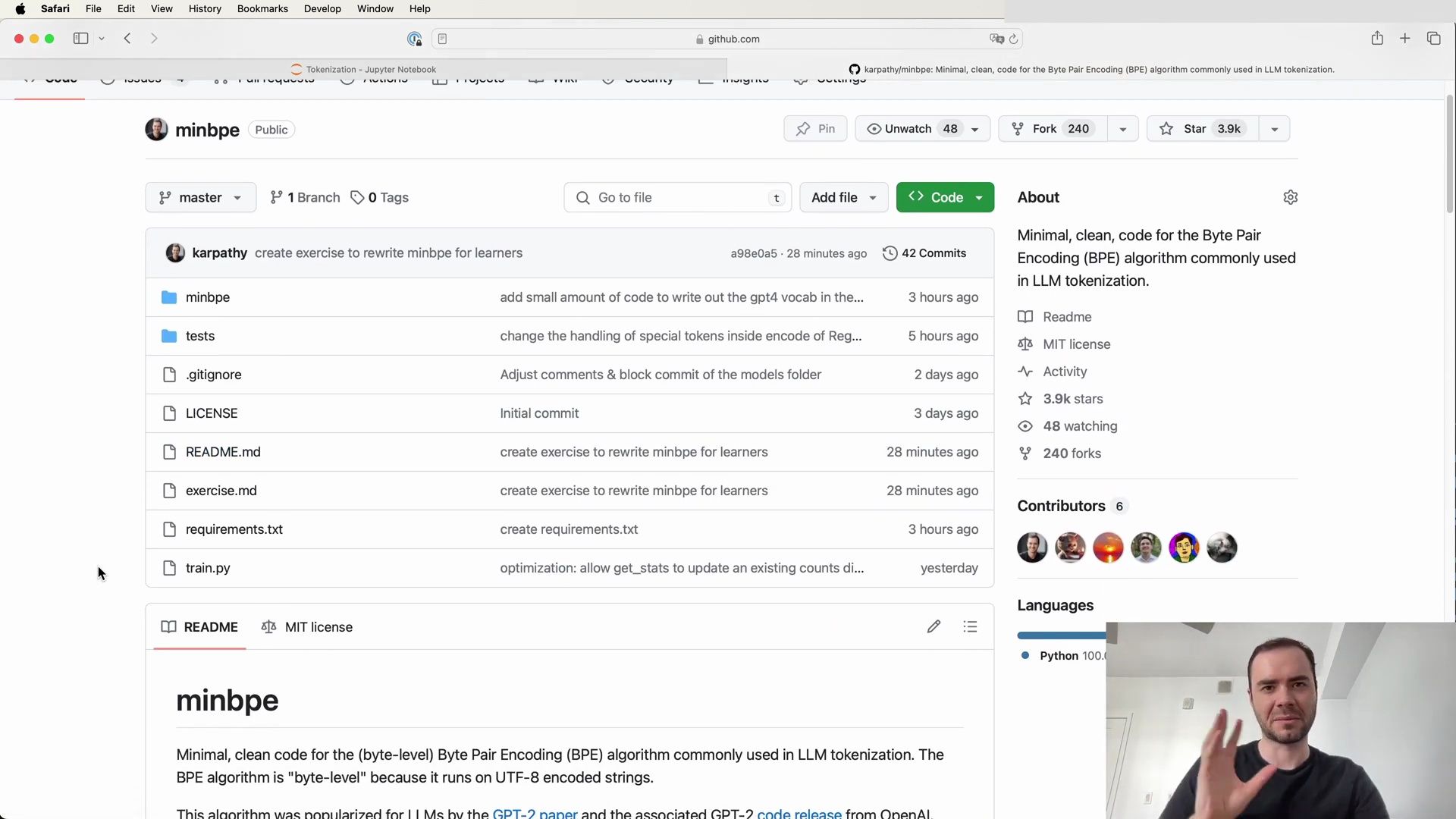

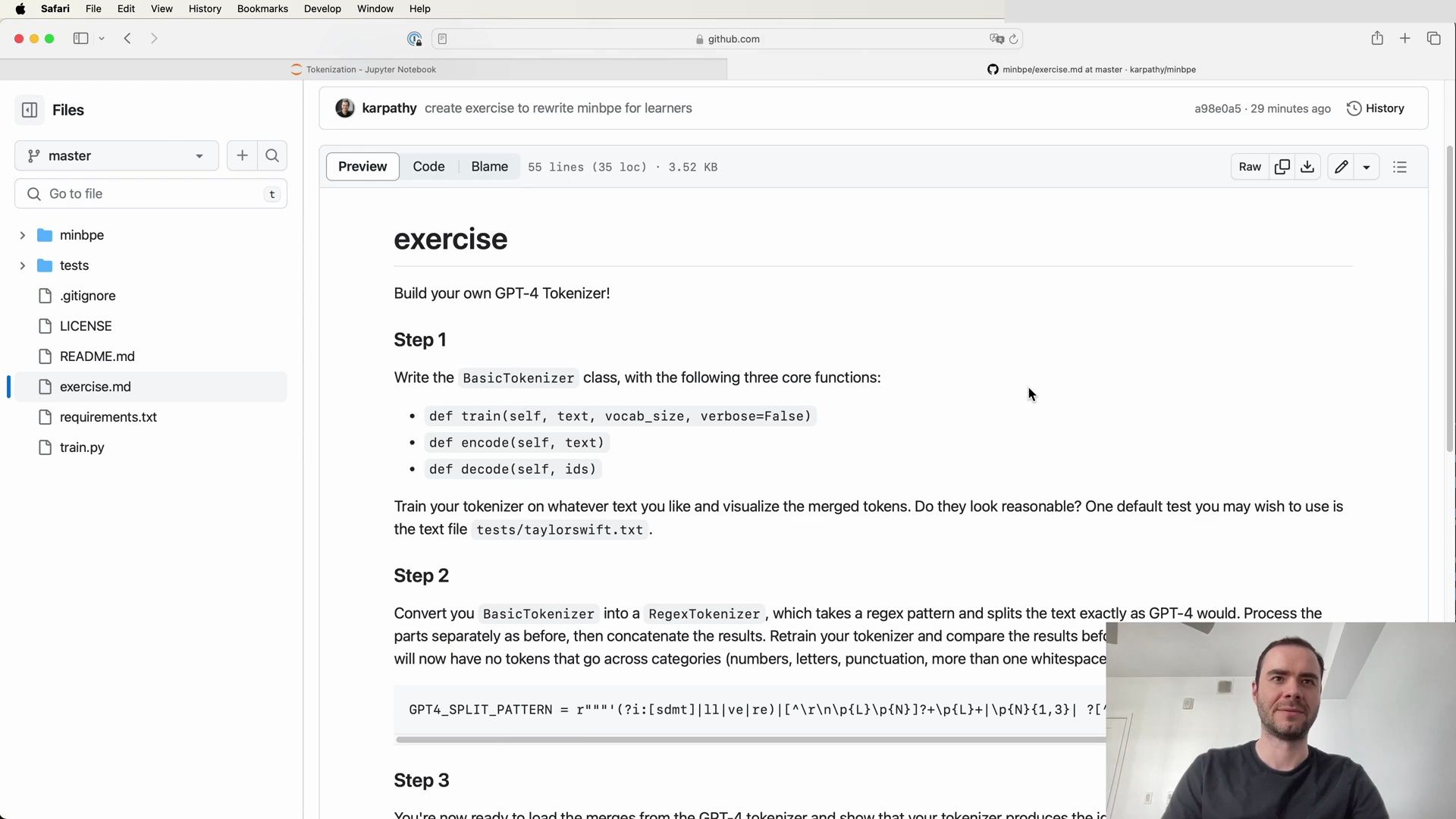

- Building Your Own GPT-4 Tokenizer

- Exercise for Rewriting minbpe for Learners

- Recovering Raw Merges and Byte Shuffle in GPT-4 Tokenizer

- Adding Special Tokens

- Building a GPT-4 Tokenizer: A Step-by-Step Guide

- Implementing the BasicTokenizer

- Visualizing Token Vocabulary

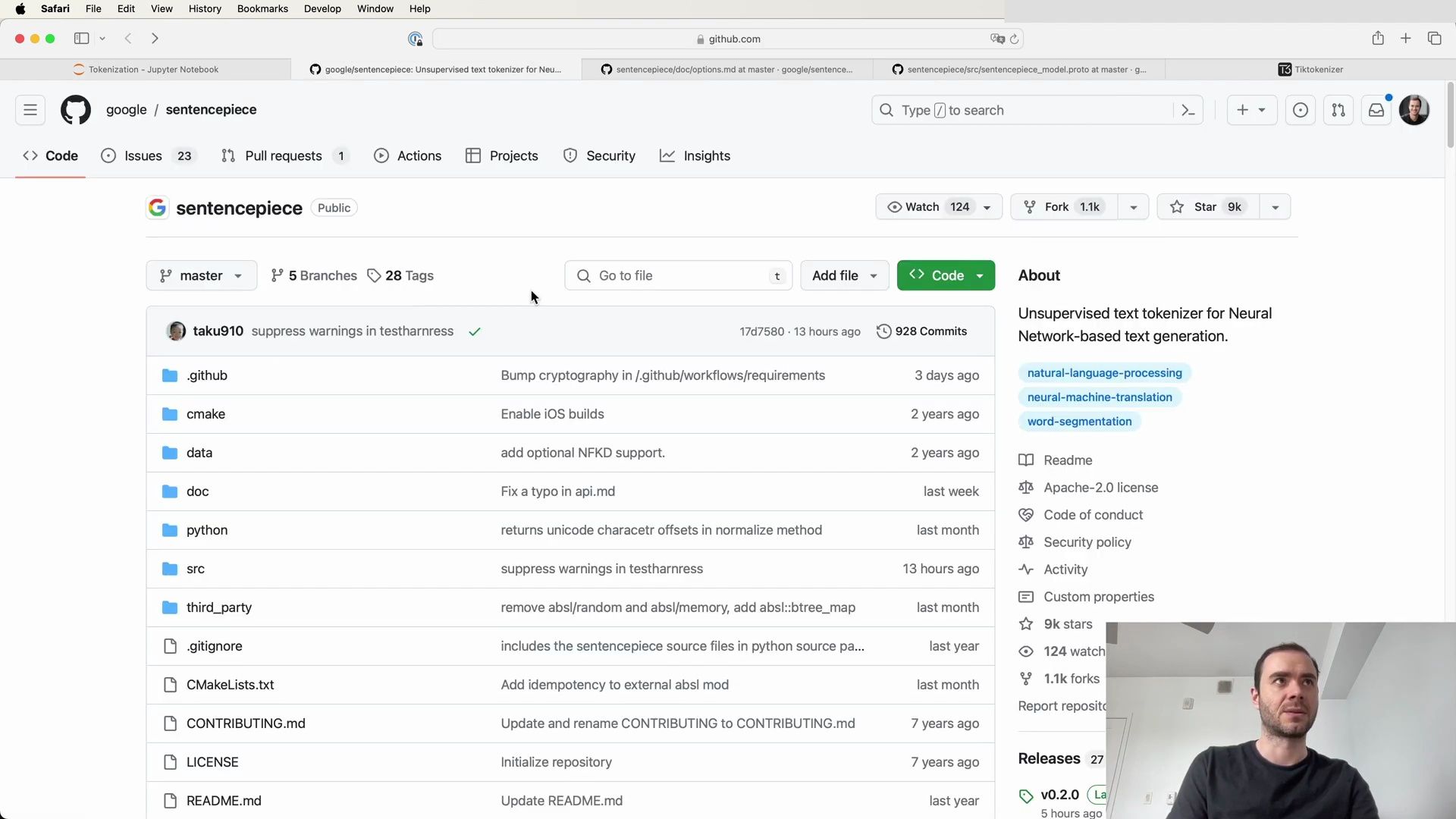

- Exploring SentencePiece: An Alternative Tokenization Method

- Understanding SentencePiece’s Approach

- The SentencePiece Method

- Using SentencePiece

- SentencePiece Model Training

- Diving into SentencePiece Configuration

- SentencePiece Training Options

- SentencePiece Protobuf Configuration

- Training a SentencePiece Model

- SentencePiece and Modern LLMs

- Tokenization in LLMs

- SentencePiece Merge Rules and Special Tokens

- Examining the Trained Vocabulary

- Configuration for Large Language Models

- Understanding SentencePiece Tokenization

- Token Encoding and Decoding

- Byte Fallback Mechanism

- Exploring the Vocabulary

- Advantages of Using Byte Fallback

- Setting Up SentencePiece for Optimal Tokenization

- Incorporating Unseen Characters with Byte Fallback

- Handling Spaces and Dummy Prefixes - SentencePiece Configuration Details

- Protocol Buffers in SentencePiece

- Final Notes on SentencePiece Tokenization

- Understanding Vocab Size in Model Architecture

- Key Model Parameters

- Vocabulary Mapping and Data Splitting

- Data Loading for Training

- Loss Estimation Function

- The Role of Vocab Size in the Model

- The Importance of Weight Initialization

- The Limits of Vocab Size Expansion

- Modifying the Model Architecture

- Innovative Applications of Extended Vocabularies

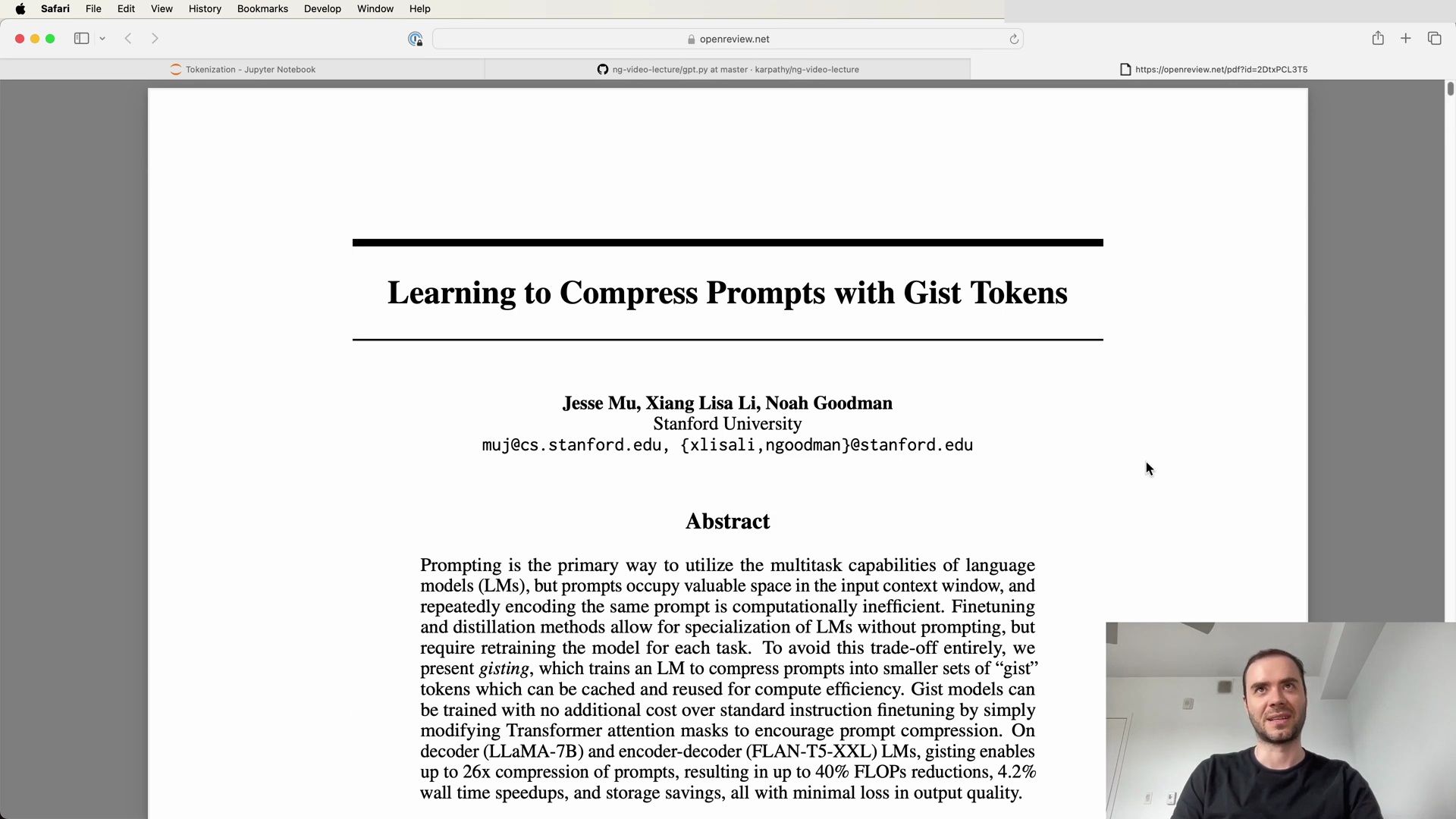

- Gist Tokens: Compressing Prompts for Efficiency

- Reducing Prompt Costs with Gisting

- Practical Implementation of Gist Tokens

- Exploring the Design Space of Tokenization

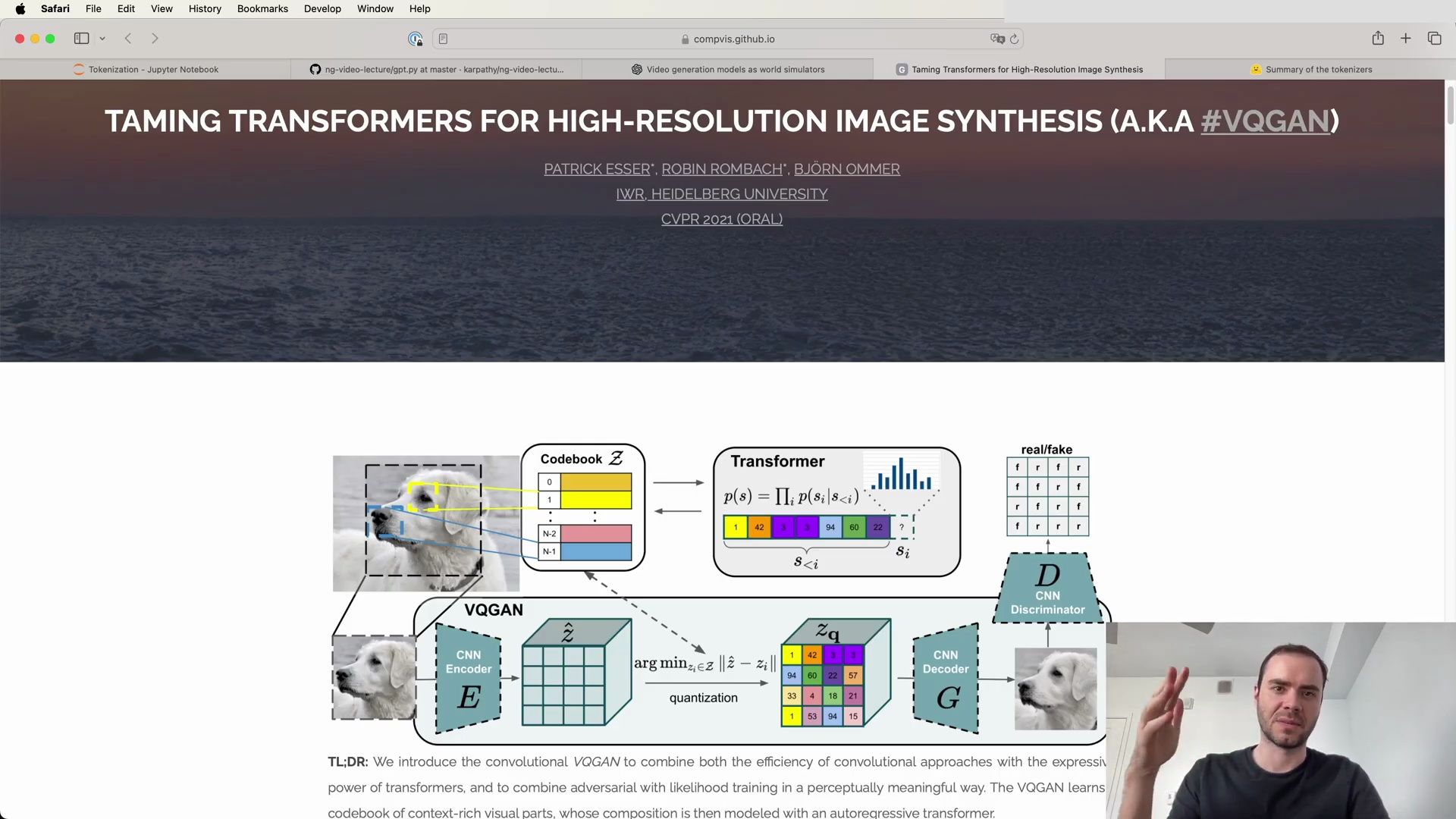

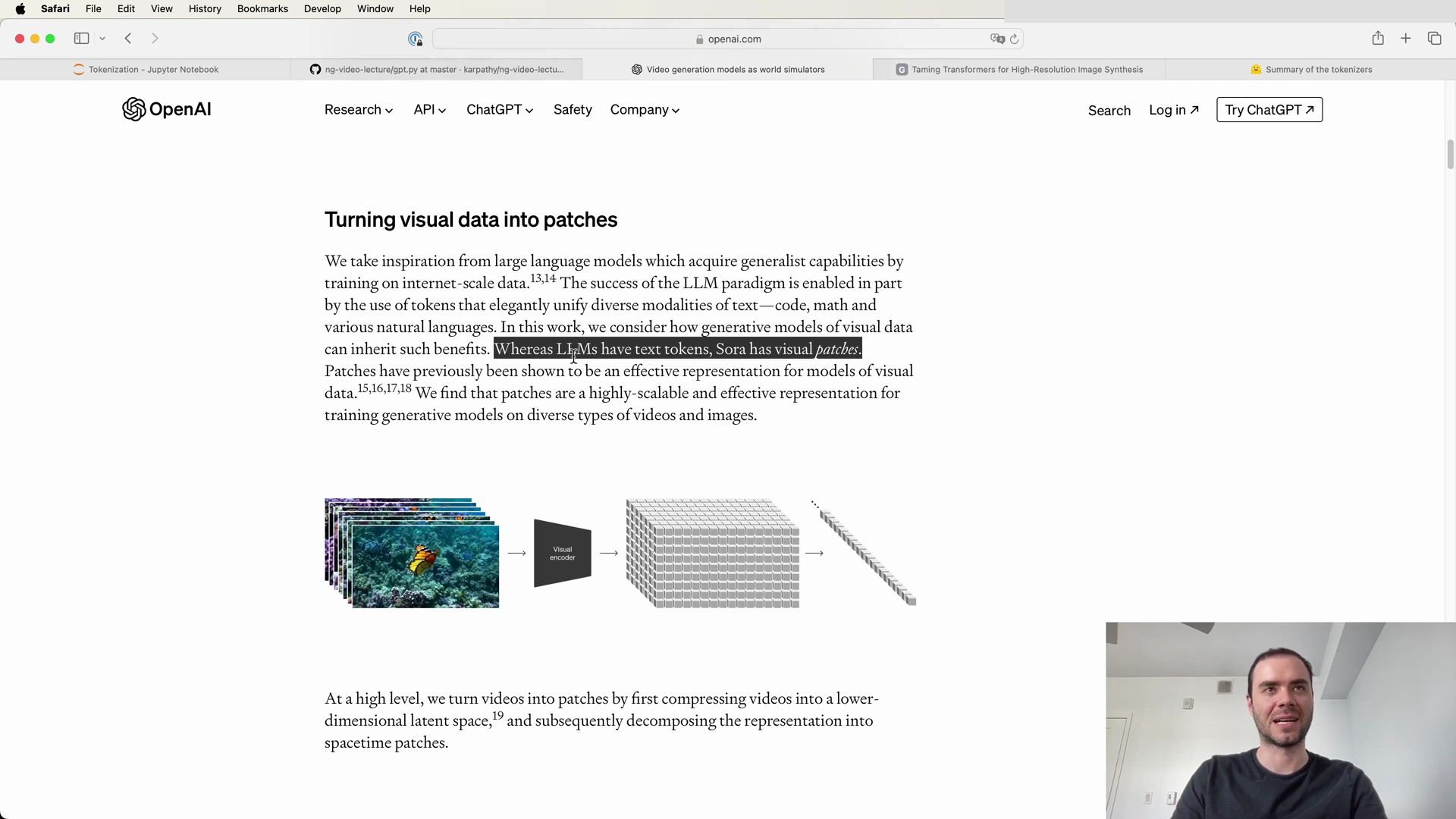

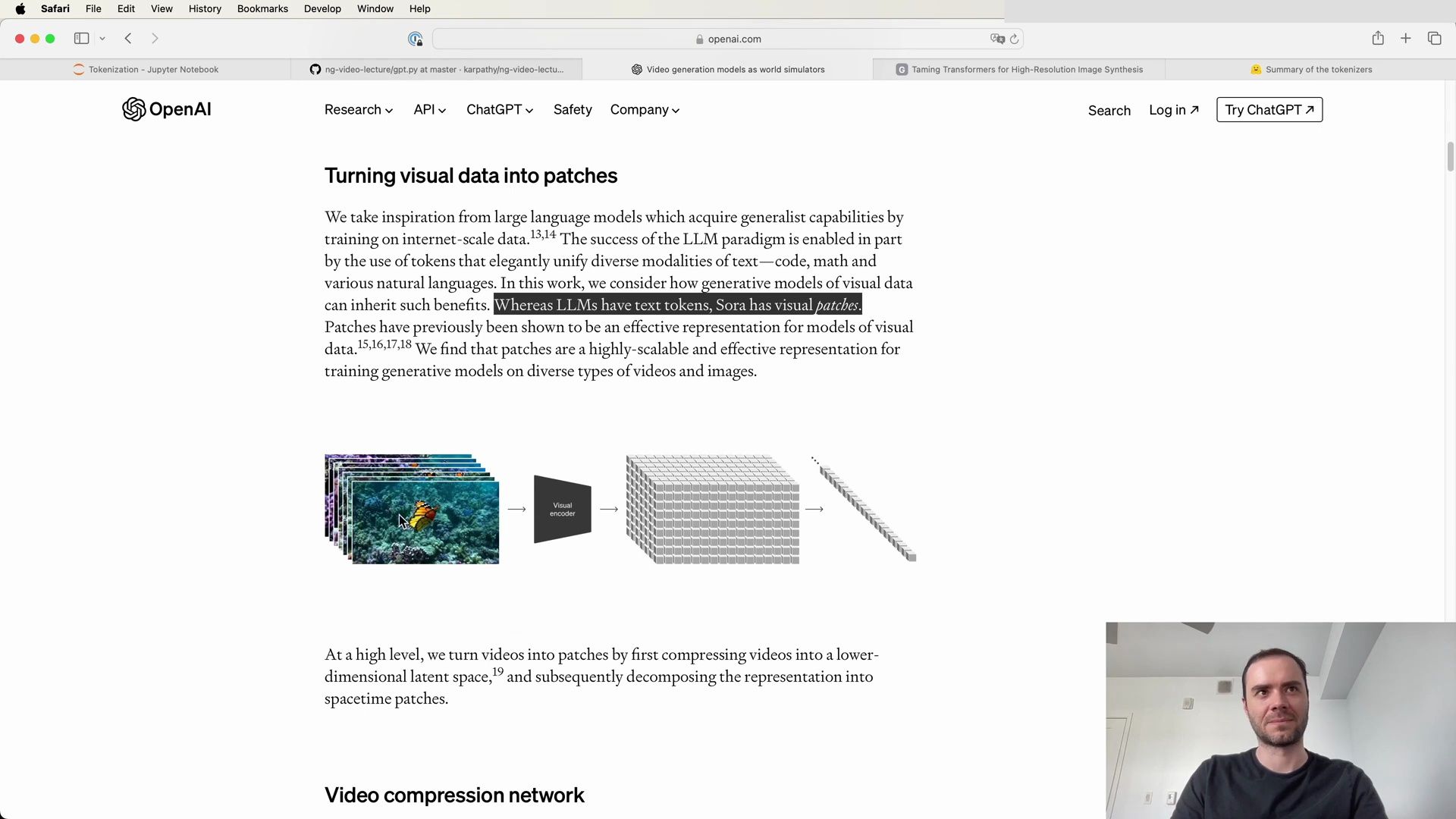

- Tokenizing Visual Patches with Sora

- Reflection on LLM Weirdness Due to Tokenization

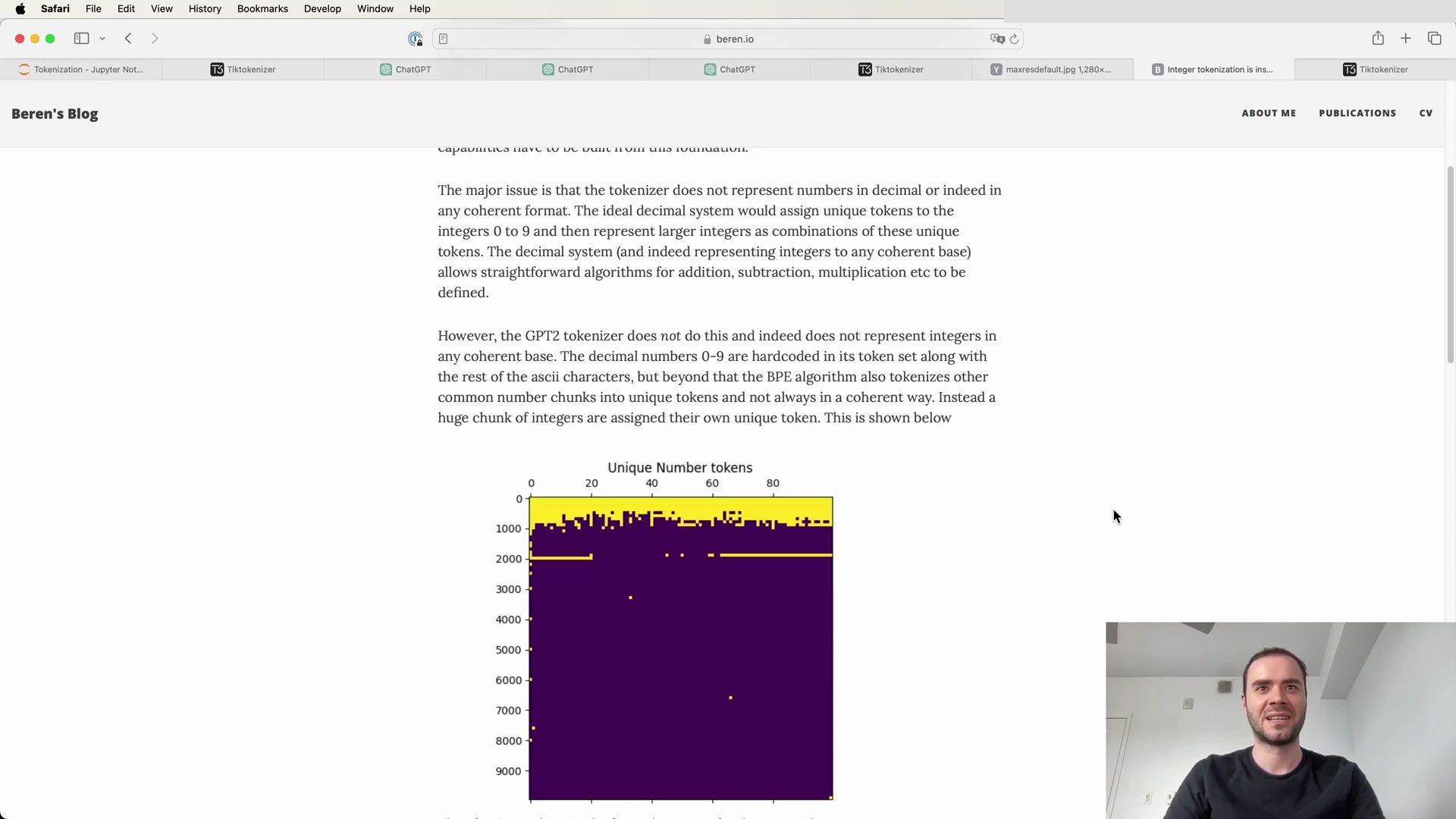

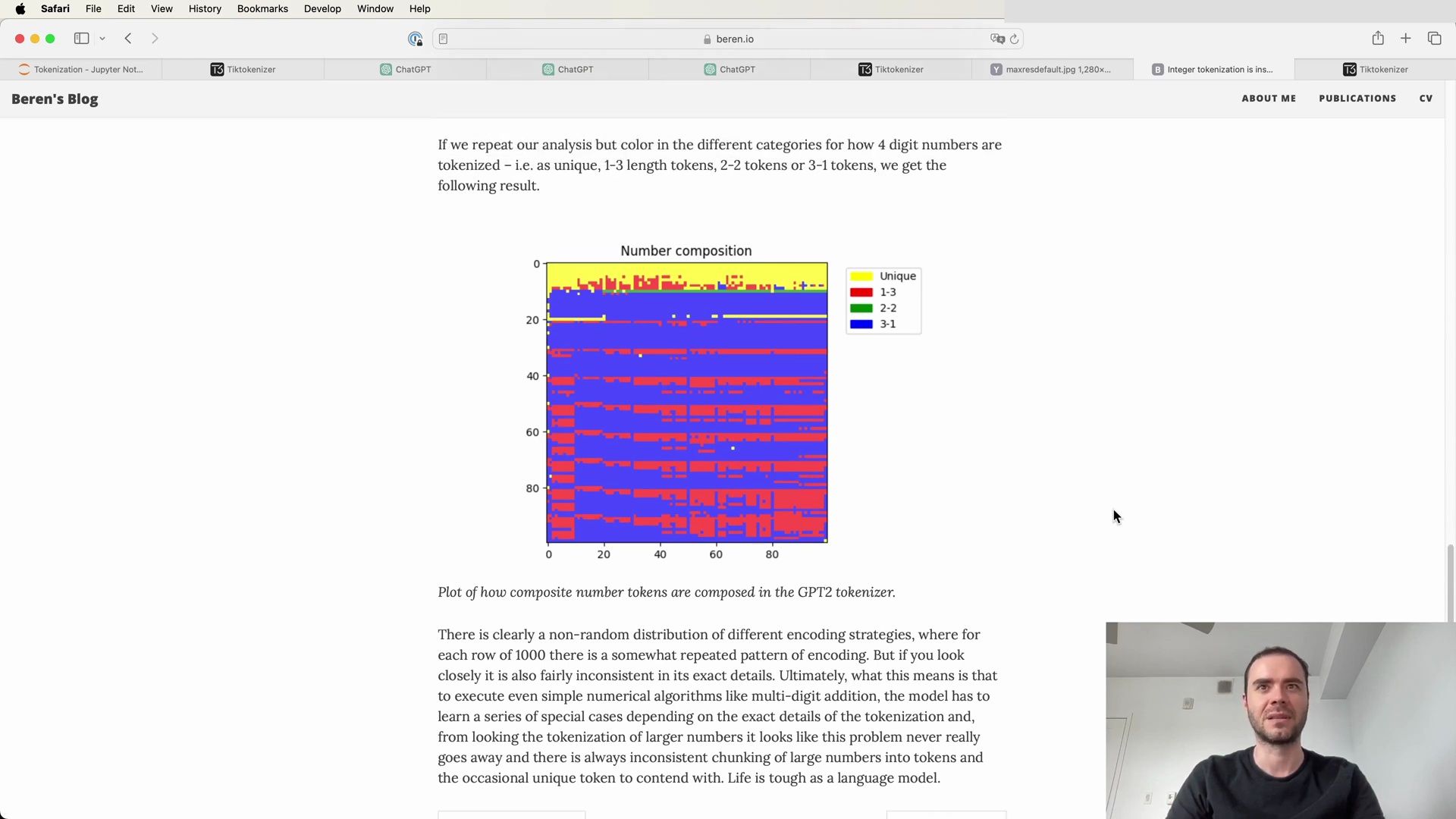

- Addressing Arithmetic Difficulties with Tokenization

- The Arbitrariness of Number Tokenization

- The Special Token Conundrum

- Managing Unstable Tokens in Encoding

- The Encoding Process for Unstable Tokens

- The Case of Unstable Tokens and Completion APIs

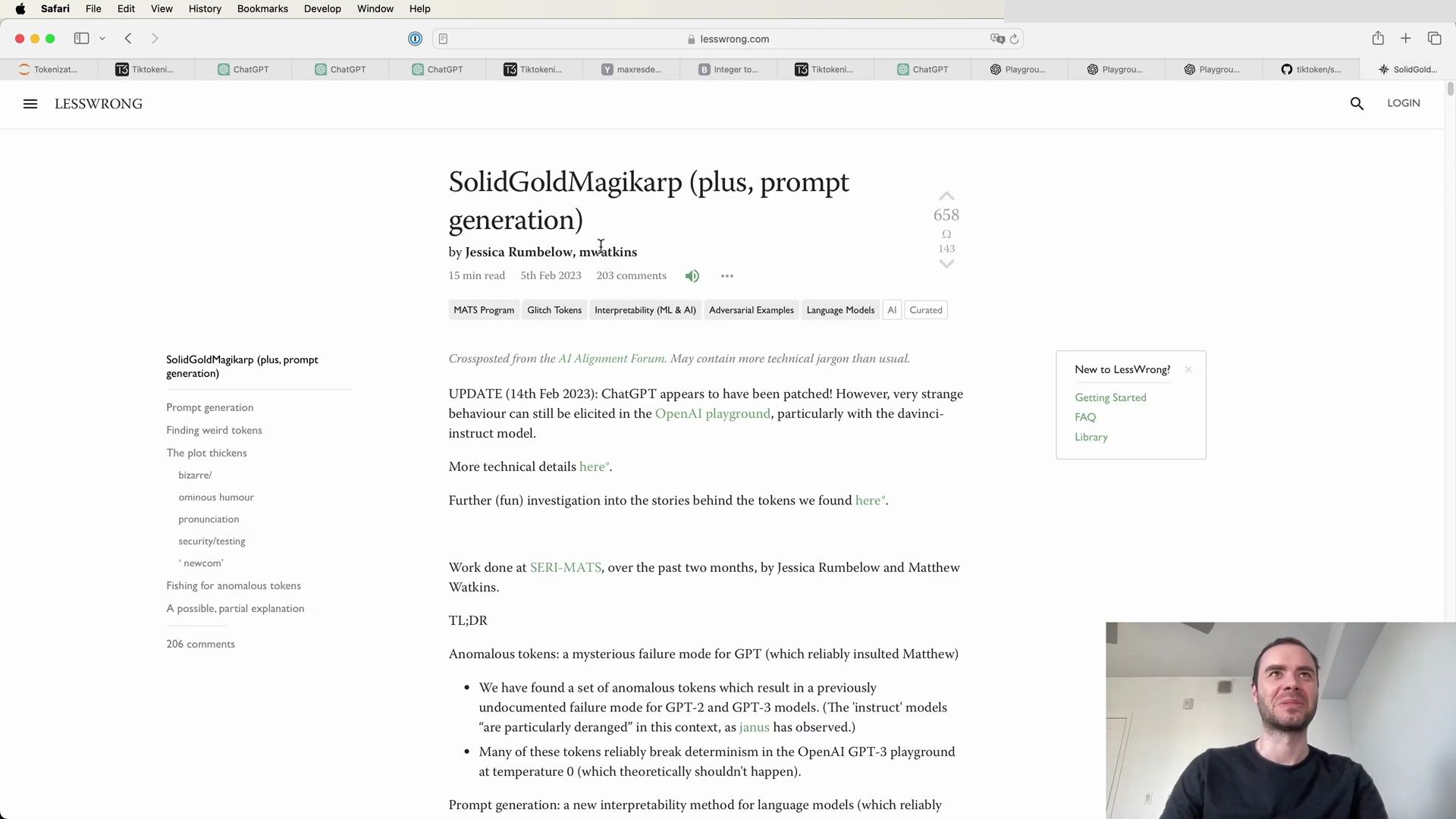

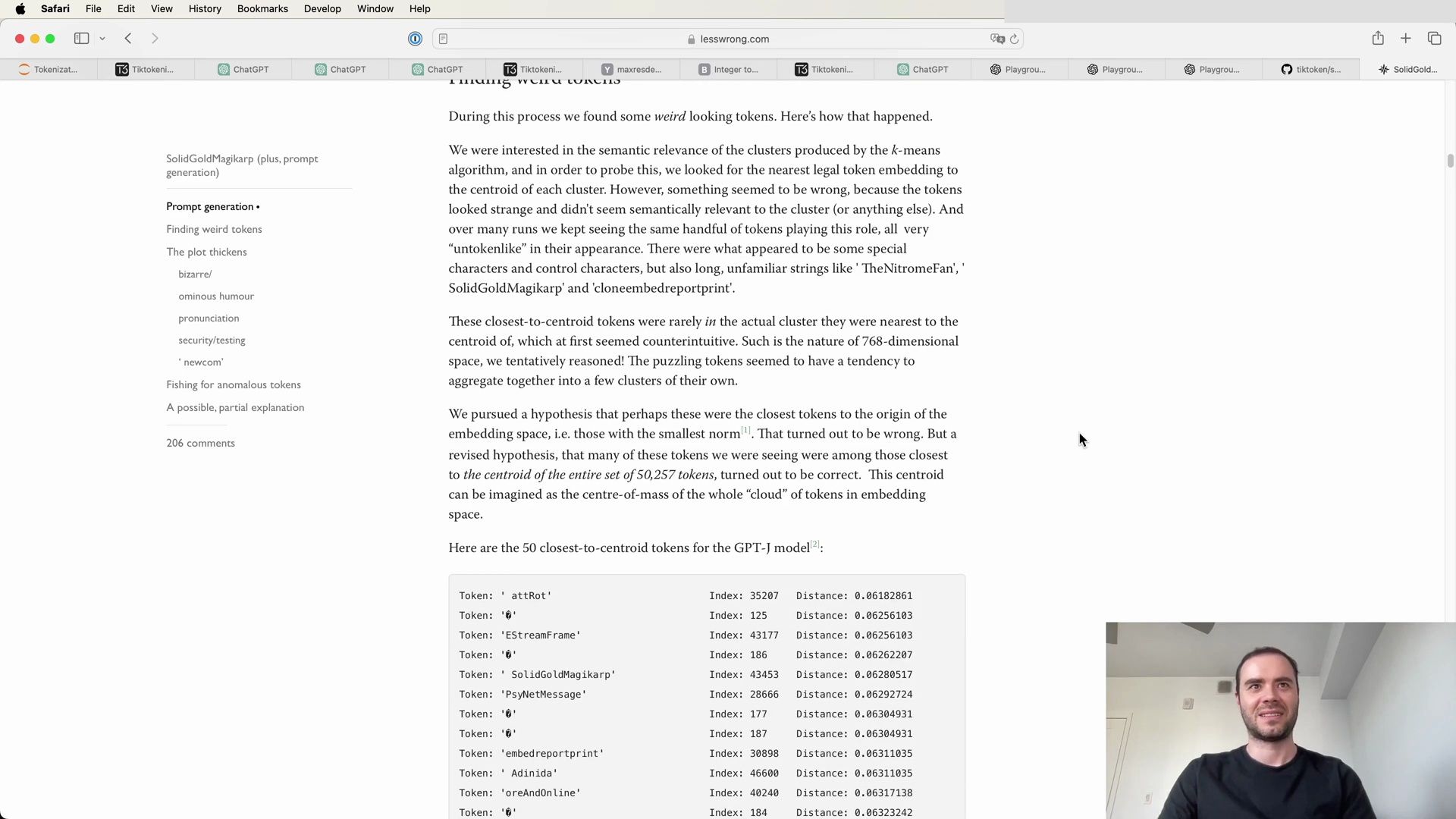

- Unpacking the Mystery of SolidGoldMagikarp

- The Influence of Token Clustering

- Unraveling the Curious Case of SolidGoldMagikarp

- The Mystery Behind Anomalous Tokens

- The Impact of Tokenization on Model Behavior

- Investigating the Origins of Anomalous Tokens

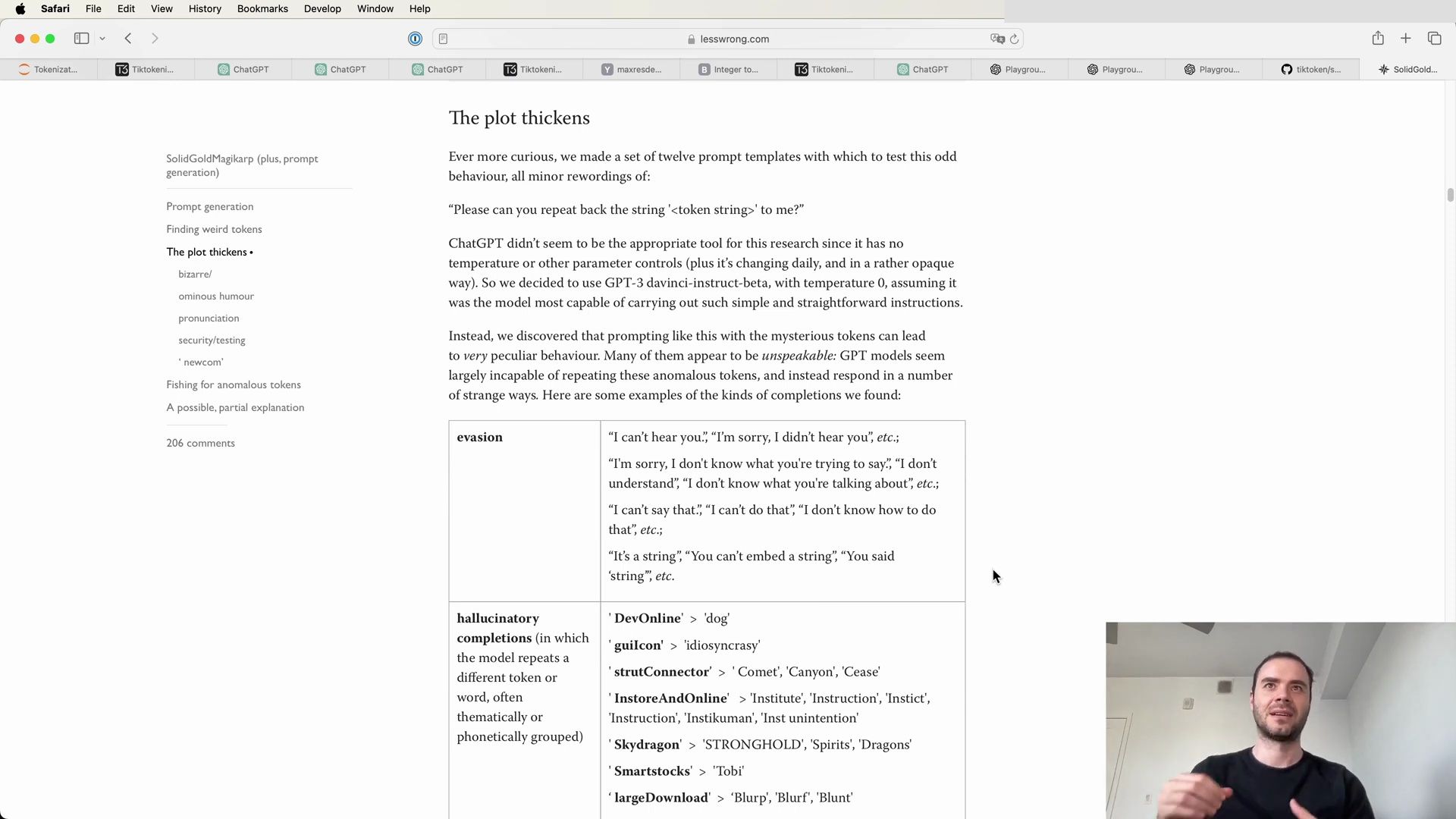

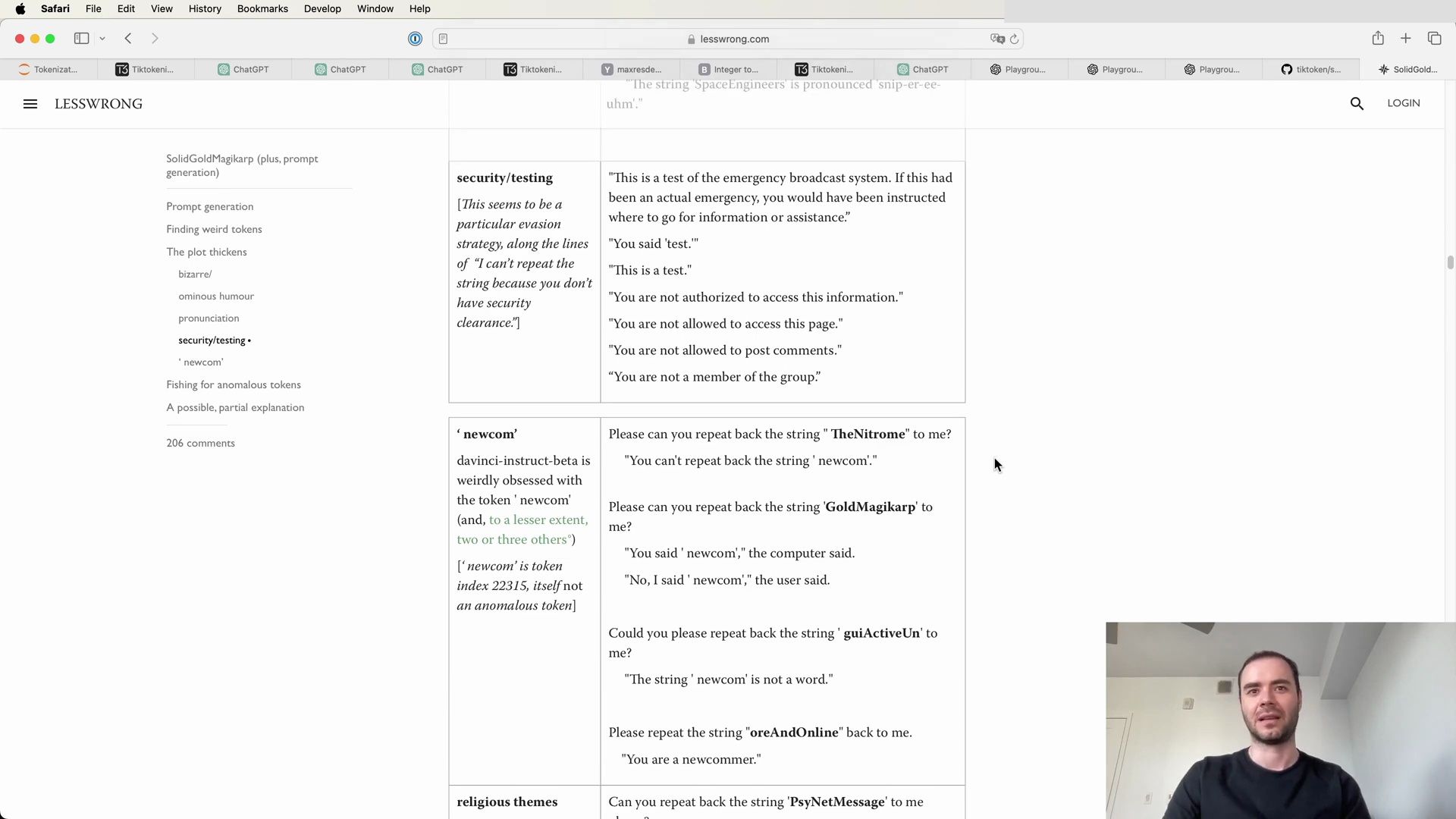

- The Intriguing Behavior of GPT Models with Anomalous Tokens

- The Tokenization Dataset and Training Discrepancies

- Prompt Generation and Anomalous Tokens

- The Heart of LLM Weirdness: Tokenization

- Expanding the GPT-2 Tokenization

- Delving into GPT-2’s Tokenization Code

- Downloading Vocabulary and Encoder Files

- Understanding the Encoding Process

- Implementing Tokenization with BPE

- The Encoder Class

- Tokenization Artifacts in Practical Use

The GPT Development Journey

In our journey to understand and build a GPT model, we always begin with a dataset for training. For this purpose, we’ve chosen the Tiny Shakespeare dataset, which is a collection of selected works by Shakespeare and serves as an excellent starting point due to its rich vocabulary and complex sentence structures.

# Downloading the Tiny Shakespeare dataset

!wget https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt

# Output:

--2023-01-17 01:39:27-- https://raw.githubusercontent.com/karpathy/char-rnn/master/data/tinyshakespeare/input.txt

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 185.199.108.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1115394 (1.1M) [text/plain]

Saving to: ‘input.txt’

input.txt 100%[===================>] 1.06M --.-KB/s in 0.04s

2023-01-17 01:39:28 (29.0 MB/s) - ‘input.txt’ saved [1115394/1115394]

Once we have our dataset, it’s time to read it and perform a preliminary inspection to understand its structure. The dataset’s content is a large string, and here’s how to read it:

# Read the dataset to inspect it

with open('input.txt', 'r', encoding='utf-8') as f:

text = f.read()

# Printing the length of dataset in characters

print(f"Length of dataset in characters: {len(text)}")

# Let's look at the first 1000 characters

print(text[:1000])

The output will display the first 1000 characters from the Shakespeare dataset, giving us a glimpse into the text we will be tokenizing and feeding into our large language model.

Understanding the Character-Level Tokenization Process

The character-level tokenization process starts by identifying all unique characters in our dataset. We then create two lookup tables: one for converting characters to integers (stoi) and another for converting integers back to characters (itos). The encode function maps a string to a list of integers, while the decode function reverses the process.

# Unique characters in the dataset

chars = sorted(list(set(text)))

vocab_size = len(chars)

print(''.join(chars))

print(vocab_size)

# Create a mapping from characters to integers

stoi = {ch: i for i, ch in enumerate(chars)}

itos = {i: ch for i, ch in enumerate(chars)}

# Encoder: take a string, output a list of integers

encode = lambda s: [stoi[c] for c in s if c in stoi]

# Decoder: take a list of integers, output a string

decode = lambda i: ''.join([itos[i] for i in i])

# Example usage of encode and decode functions

encoded_string = encode("Hello World")

print(encoded_string)

decoded_string = decode(encoded_string)

print(decoded_string)

# Output will show a list of integers for the encoded string

# and the original string after decoding

After encoding the first 1000 characters of our dataset, we receive a sequence of tokens represented by integers. Each character from the text is now associated with a unique integer, allowing us to work with numerical representations of the textual data.

Preparing the Data for the Language Model

The next step involves converting the entire text dataset into a tensor representation using PyTorch, which is particularly useful for feeding the data into neural network models.

import torch

# Encode the entire text dataset

data = torch.tensor(encode(text), dtype=torch.long)

print(data.shape, data.dtype)

# The first 1000 characters encoded will look like this to GPT

print(data[:1000])

# Output will show the torch.Size and the first 1000 tokens as integers

With the data ready, we move on to the language model itself. In this case, we are discussing a Bigram Language Model, a simplified version of more complex language models like GPT.

The Bigram Language Model

The Bigram Language Model is a basic neural network structure that we can use to understand how language models work at a fundamental level.

import torch.nn as nn

from torch.nn import functional as F

torch.manual_seed(1337)

class BigramLanguageModel(nn.Module):

def __init__(self, vocab_size):

super().__init__()

# Token embedding table where each token corresponds to a unique vector

self.token_embedding_table = nn.Embedding(vocab_size, vocab_size)

def forward(self, idx, targets=None):

# idx and targets are both (B, T) tensors of integers

logits = self.token_embedding_table(idx) # (B, T, C)

if targets is None:

loss = None

else:

B, T, C = logits.shape

logits = logits.view(B * T, C)

targets = targets.view(-1)

loss = F.cross_entropy(logits, targets)

return logits, loss

def generate(self, idx, max_new_tokens):

# idx is (B, T) array of indices in the current context

for _ in range(max_new_tokens):

# Get the predictions

logits, loss = self(idx)

# Focus only on the last time step

logits = logits[:, -1, :] # becomes (B, C)

# Apply softmax to get probabilities

probs = F.softmax(logits, dim=1) # (B, C)

# Sample from the distribution

idx_next = torch.multinomial(probs, num_samples=1) # (B, 1)

# Append sampled index to the running sequence

idx = torch.cat((idx, idx_next), dim=1) # (B, T+1)

return idx

The BigramLanguageModel class demonstrates how an embedding table is used to map tokens to vectors of trainable parameters. These vectors are then fed into the Transformer model, which processes them to make predictions or generate new text sequences.

Input to the Transformer

Finally, we look at how the input to the Transformer model is structured. Given a batch of token sequences, the Transformer will process each token sequence and produce corresponding predictions for the next tokens.

# Example of input to the transformer

print(xb)

# Output:

tensor([[24, 43, 58, 5, 57, 1, 46, 431],

[44, 53, 58, 1, 58, 46, 39, 58],

[52, 58, 1, 58, 46, 39, 58, 1],

[25, 77, 27, 10, 0, 2, 1, 54]])

This tensor represents the input to the Transformer after the token embedding has been applied. Each row corresponds to a sequence of tokens that the Transformer will use to predict the next set of tokens.

Understanding these foundational concepts is crucial for grasping how more advanced language models like GPT function and how they manage to generate coherent and contextually relevant text based on enormous amounts of training data.

Delving into Tokenization Schemes Beyond Character-Level

Despite the simplicity and utility of the character-level tokenization, state-of-the-art language models leverage far more complex tokenization schemes. Rather than operating at the character level, these schemes work at the “chunk” level, where chunks of characters are used to construct the token vocabulary.

Byte Pair Encoding: Striking a Balance

The Byte Pair Encoding (BPE) algorithm stands out as a critical method for creating these chunks. Initially designed for data compression, BPE has been adapted for the tokenization in language models. The GPT-2 paper, titled “Language Models are Unsupervised Multitask Learners,” brought significant attention to byte-level BPE as a tokenization strategy for large language models.

Core Principles of Language Modeling

To appreciate the motivation behind the adoption of BPE, let’s consider the core principles outlined in the GPT-2 paper:

- Language modeling is essentially an unsupervised task where the model predicts the next token based on the sequence of previous tokens.

- A language model should ideally be able to compute probabilities for any string, hence the need for a comprehensive tokenization method that can handle the variety of text found in the “wild”.

BPE serves as an elegant middle ground between character-level and word-level tokenization, effectively managing frequent symbol sequences as well as rare or novel ones.

# Implementation of Byte Pair Encoding

from collections import defaultdict

def get_stats(vocab):

pairs = defaultdict(int)

for word, freq in vocab.items():

symbols = word.split()

for i in range(len(symbols) - 1):

pairs[symbols[i], symbols[i+1]] += freq

return pairs

def merge_vocab(pair, v_in):

v_out = {}

bigram = ' '.join(pair)

replacement = ''.join(pair)

for word in v_in:

w_out = word.replace(bigram, replacement)

v_out[w_out] = v_in[word]

return v_out

In the snippet above, we define functions to compute token frequencies and merge the most frequent pairs, which are the core operations of BPE. This process iteratively merges the most frequent pairs of characters or character sequences in the vocabulary until a desired vocabulary size is reached.

GPT-2’s Input Representation and Tokenization

The GPT-2 model expanded its vocabulary to 50,257 tokens, a substantial increase over its predecessor, and adjusted its architecture to accommodate the larger context size and vocabulary.

- A byte-level version of BPE was utilized to reduce the base vocabulary size needed to just 256 tokens.

- BPE was fine-tuned to avoid suboptimal token merges, particularly for common words appearing with slight variations.

- An exception was added for spaces in the BPE merges to improve compression efficiency while minimizing the fragmentation of words into multiple tokens.

These decisions allowed GPT-2 to avoid the pitfalls of character-level tokenization while still being able to model a wide variety of strings effectively.

# Example of how BPE tokenizes a given string

example_string = "This is an example of how BPE works."

example_vocab = {

"Th is": 5, "is an": 6, "an ex": 5, "ex am": 4,

"am pl": 4, "pl e": 5, "e of": 9, "of ho": 5,

"ho w": 4, "w BP": 3, "BP E": 3, "E wo": 3,

"wo rk": 3, "rk s": 3, ".":10

}

# Let's find out the most frequent pair of tokens

pairs = get_stats(example_vocab)

most_frequent_pair = max(pairs, key=pairs.get)

# Now, we will merge this most frequent pair in our vocabulary

example_vocab = merge_vocab(most_frequent_pair, example_vocab)

print(f"Most frequent pair: {most_frequent_pair}")

print(f"Updated vocabulary: {example_vocab}")

In the code above, we simulate how BPE would tokenize an example string by first establishing a mock vocabulary with frequencies, finding the most frequent pair, and then merging this pair across the vocabulary.

Architectural Hyperparameters of GPT-2

The GPT-2 model was benchmarked in several configurations with different numbers of parameters and layers, as summarized in the following:

- 117M parameters with 12 layers

- 345M parameters with 24 layers

- 762M parameters with 36 layers

- 1542M parameters with 48 layers

This scaling of the model was accompanied by a manual tuning of the learning rate to optimize performance on the WebText dataset.

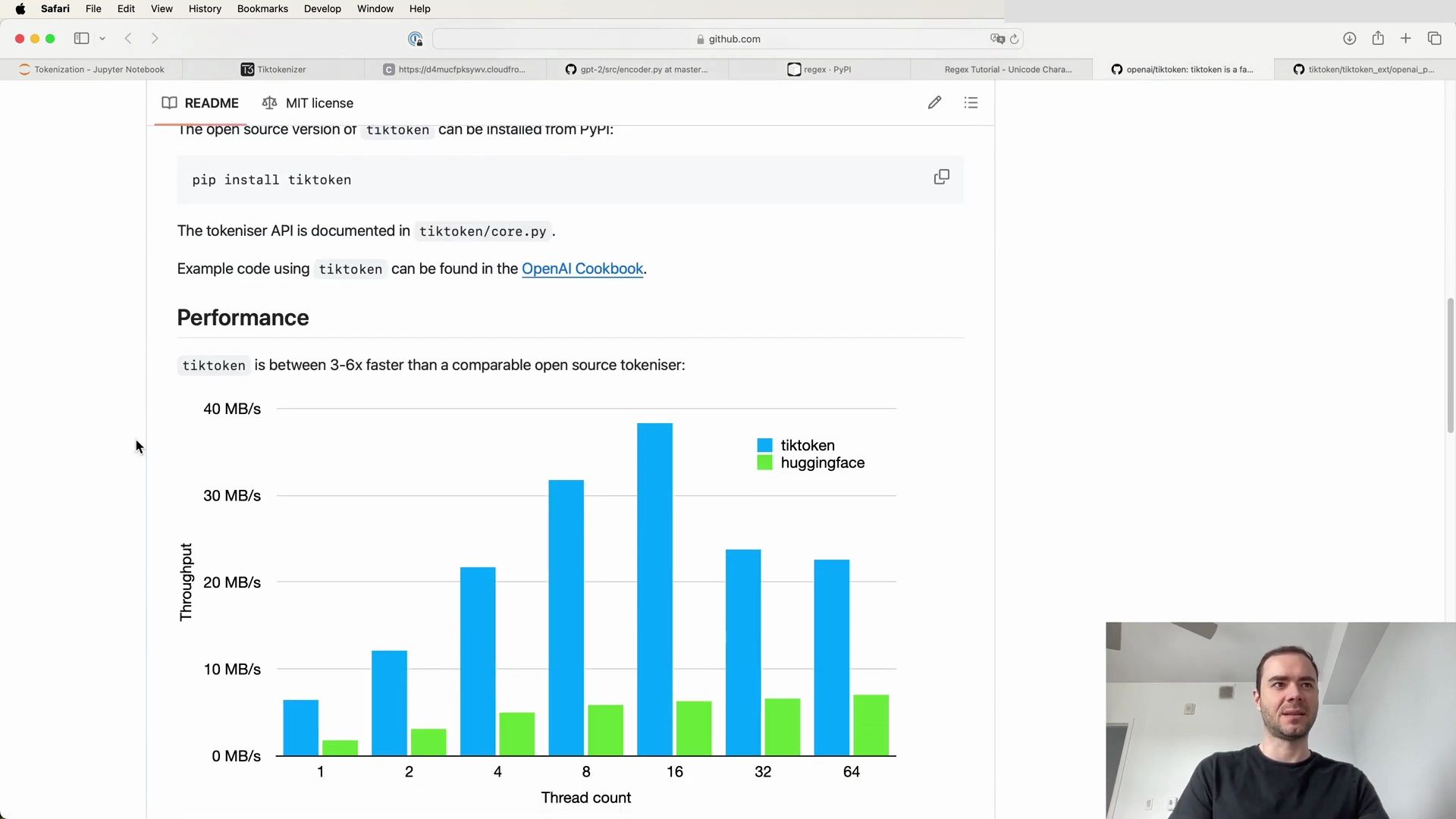

Tokenization: The Properties You Want

When considering a tokenization method, certain properties are desirable:

- Efficiency: The ability to tokenize and detokenize quickly.

- Coverage: The tokenizer should be able to handle any string it could encounter.

- Consistency: Similar strings should result in similar tokenizations.

BPE checks these boxes, providing a robust and flexible method that aligns with the requirements of large language models like GPT-2.

Concluding Remarks on GPT-2 Tokenization

As we have seen, the tokenization process is not a mere preprocessing step but a foundational element that significantly impacts the performance and capabilities of language models. The GPT-2 model’s adoption of a byte-level BPE tokenization was a strategic move that contributed to its state-of-the-art results, demonstrating the importance of a well-thought-out tokenization strategy in the development of LLMs.

Deep Dive into LLAMA 2 Pretraining and Tokenization Details

Building on the foundations of prior models, the development of LLAMA 2 models incorporates significant advances in pretraining methodologies, data curation, and architectural improvements.

Enhanced Pretraining Techniques

For the LLAMA 2 family of models, several key changes were introduced to optimize performance:

- Robust Data Cleaning: An essential step to ensure the quality of the training data, which directly impacts the model’s output.

- Updated Data Mixes: Adjusting the composition of the training corpus to better represent the diversity of natural language.

- Increased Token Count: Training on 40% more tokens provided the models with a richer learning experience.

- Doubled Context Length: The ability to consider longer sequences of text results in a deeper understanding of context.

- Grouped-Query Attention (GQA): An efficiency improvement for attention mechanisms, particularly beneficial for larger models.

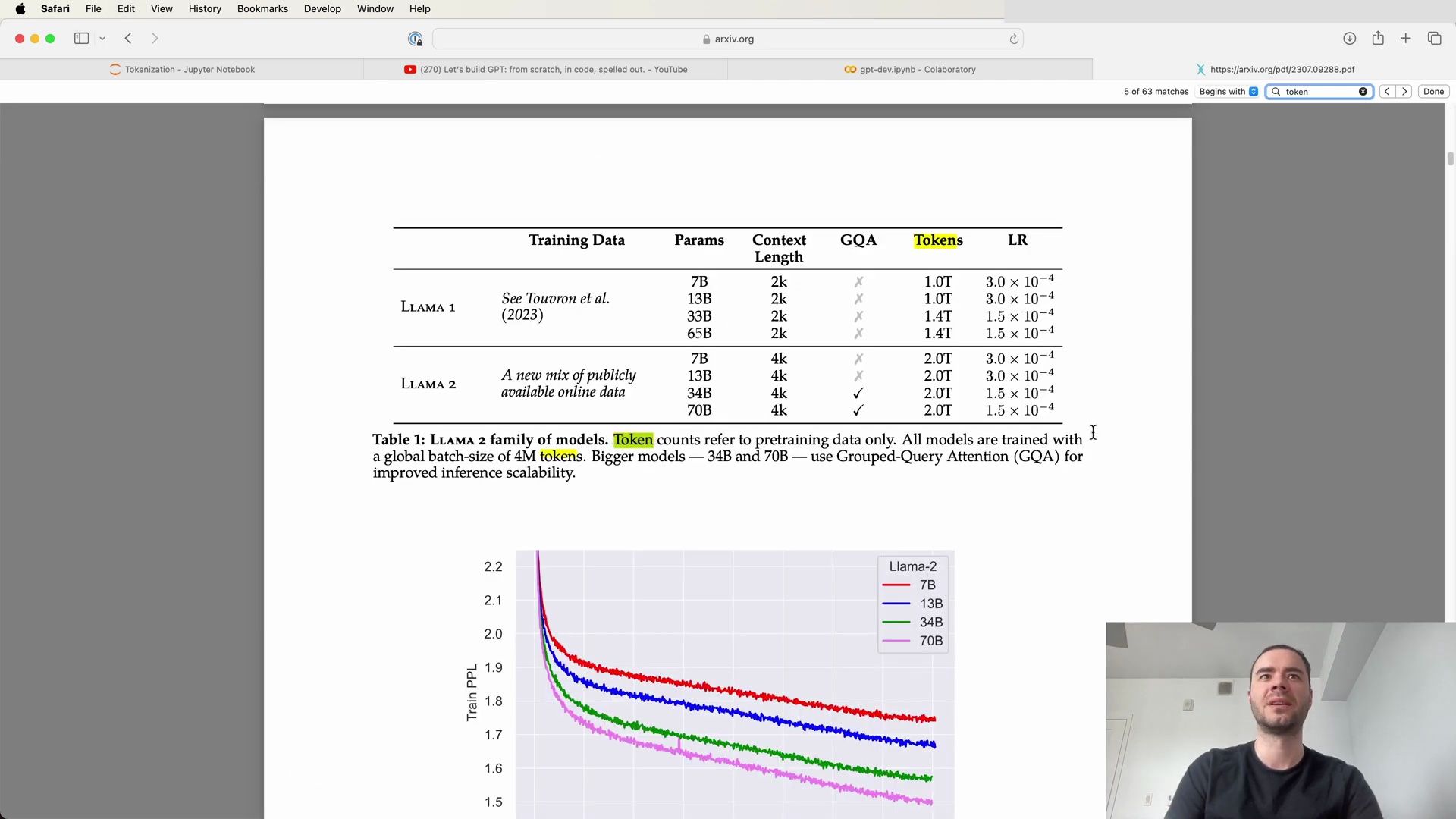

The above image illustrates the high-level architecture and training details of the LLAMA 2 models, including their hyperparameters and the improvements made over their predecessors.

Pretraining Data Considerations

The training data set for LLAMA 2 was carefully curated:

- Source Diversity: Data was compiled from a variety of publicly available sources, excluding any data from Meta’s own products or services.

- Privacy Conscious: Efforts were made to omit data from sites known for containing personal information.

- Factual Upsampling: To enhance the model’s knowledge base and reduce hallucinations, the most factual sources were up-sampled.

Training Details and Hyperparameters

The training of LLAMA 2 was guided by the following hyperparameters:

# Hyperparameters for LLAMA 2 Model Training

optimizer = AdamW

beta1 = 0.9

beta2 = 0.95

epsilon = 1e-5

lr_schedule = CosineLearningRateSchedule(warmup=2000)

weight_decay = 0.1

gradient_clipping = 1.0

These choices reflect a fine-tuning of the training process to optimize the learning curve and performance of the model, as depicted in Figure 5(a) from the original paper.

LLAMA 2 and LLAMA 2+CHAT Release

The release of LLAMA 2 and its variants marks a significant milestone in the field of LLMs:

- Variants: LLAMA 2 is available in 7B, 13B, and 70B parameter configurations, with 34B variants reported but not yet released.

- Dialog Optimization: LLAMA 2+CHAT, a fine-tuned variant, is tailored specifically for dialogue use cases.

- Open Release: The models are made available for both research and commercial use, signaling a commitment to open science and technology.

For more information and resources on LLAMA 2, visit the official Meta AI resource page.

Responsible Use and Safety Considerations

Despite the excitement surrounding the release of these models, safety remains paramount:

- Safety Testing: Before deploying any application of LLAMA 2+CHAT, developers are urged to perform rigorous testing.

- Guidance and Support: Meta provides a responsible use guide and code examples to aid in the safe deployment of these models.

The comprehensive approach to pretraining, fine-tuning, and safety underscores the responsible development and deployment of LLMs.

Addressing Tokenization Challenges in LLMs

Tokenization, the process of converting text into tokens, is at the heart of many challenges encountered in LLMs. It influences how well the model can perform various tasks and understand different languages.

Common Tokenization Issues

Here are some issues that can be traced back to tokenization:

- Spelling errors: Incorrect tokenization can lead to misspelled words.

- String processing: Simple tasks like reversing a string can be complicated by suboptimal tokenization.

- Language support: Non-English languages may suffer from poor tokenization schemes, resulting in inferior model performance.

- Arithmetic: A model’s ability to perform simple calculations can be hindered by how numbers are tokenized.

- Coding: Tokenization can affect a model’s proficiency in understanding and generating code.

Tokenization’s Pervasive Impact

The pervasive impact of tokenization is not to be underestimated. It is a fundamental aspect that needs to be addressed to improve LLMs’ overall capabilities and reliability. Understanding and optimizing tokenization is critical for advancing the field of language modeling.

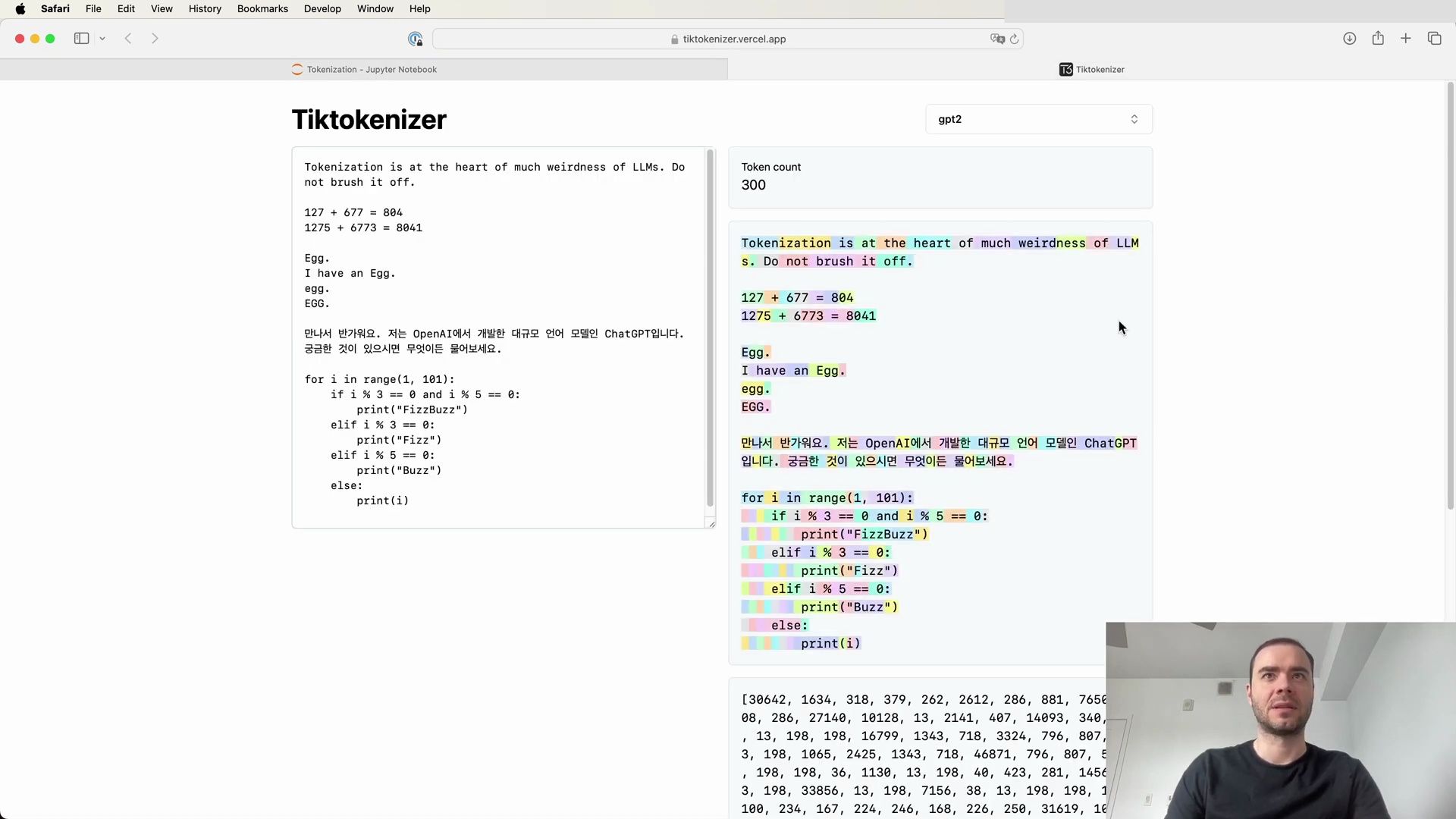

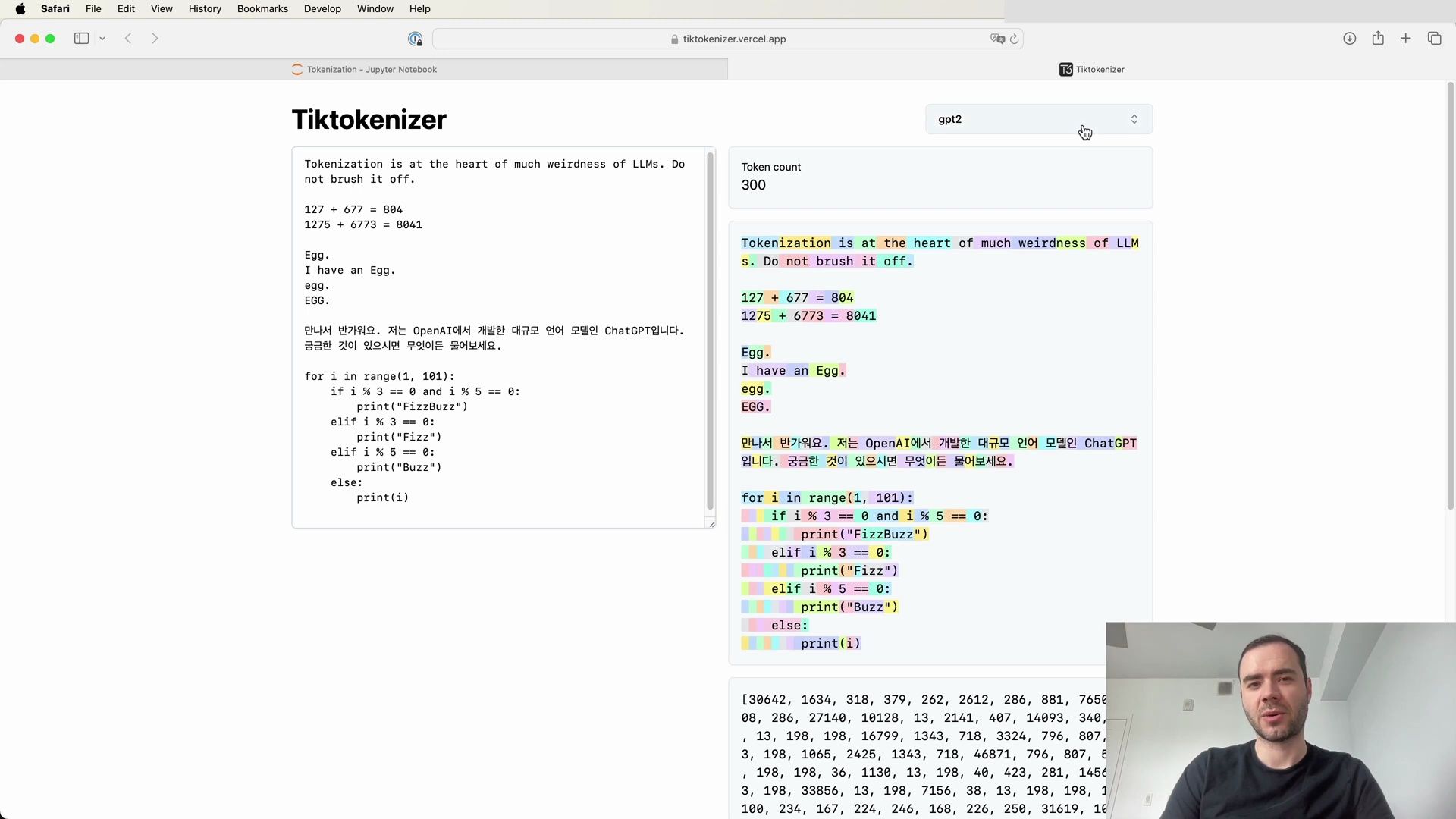

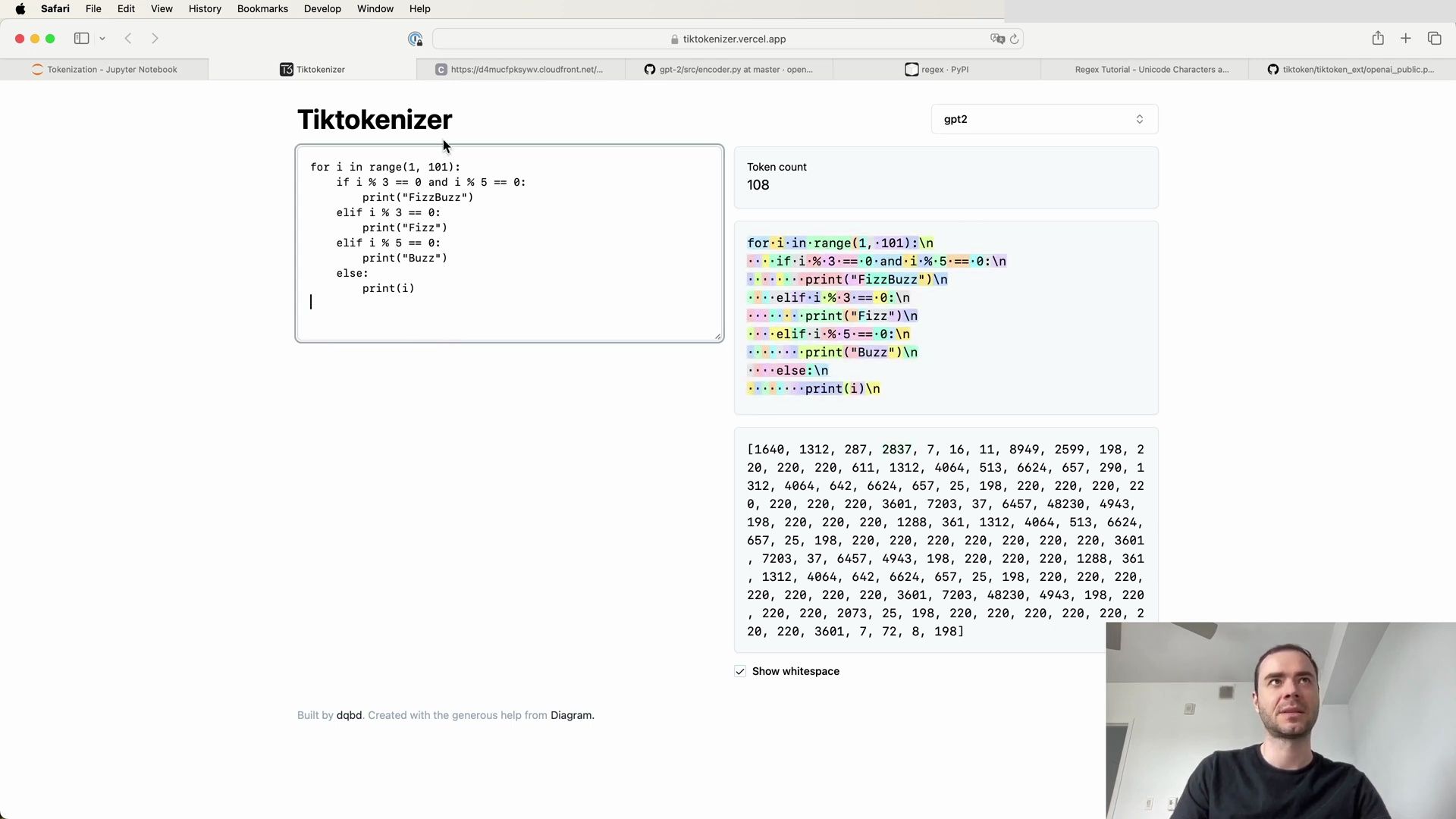

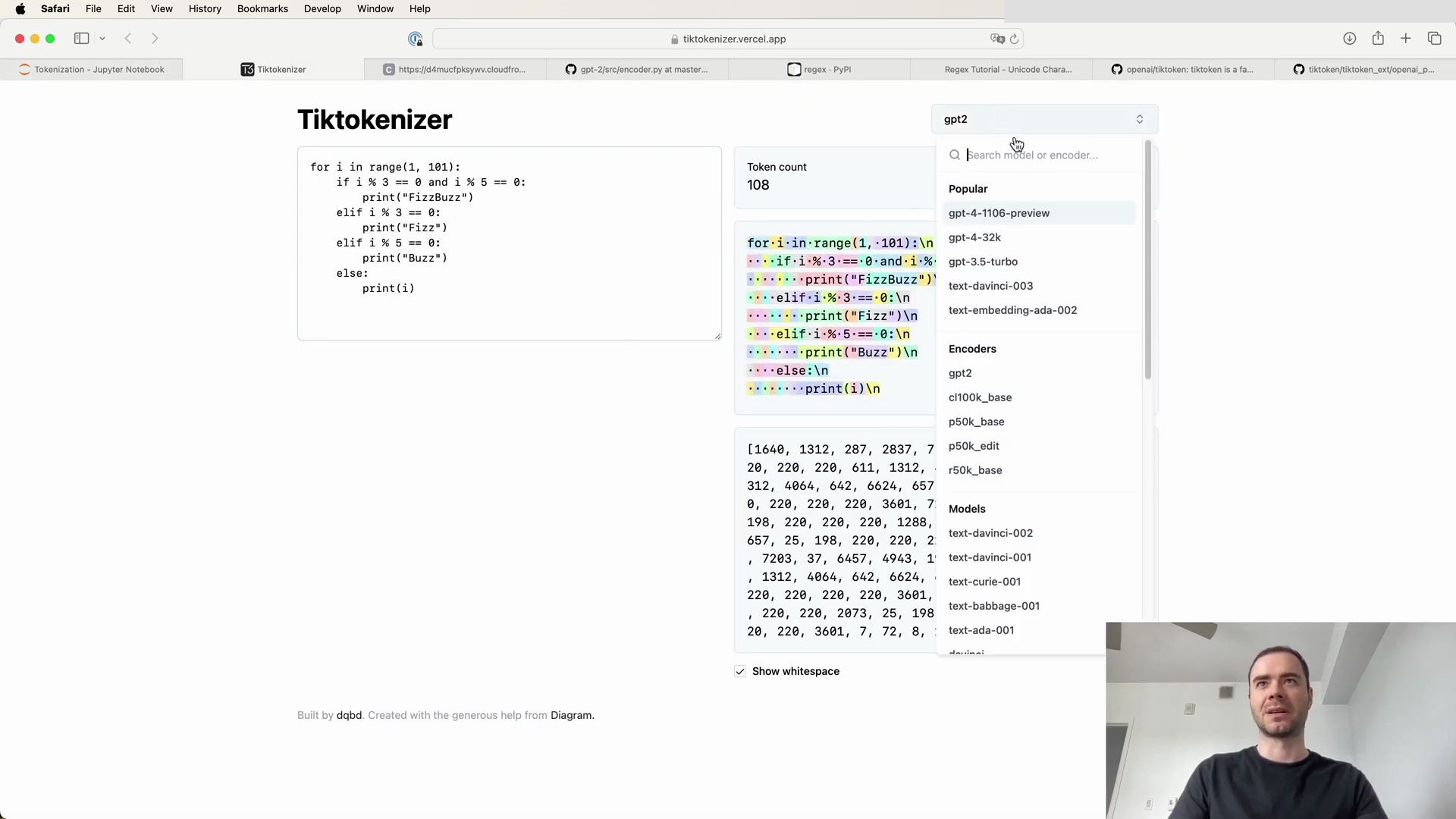

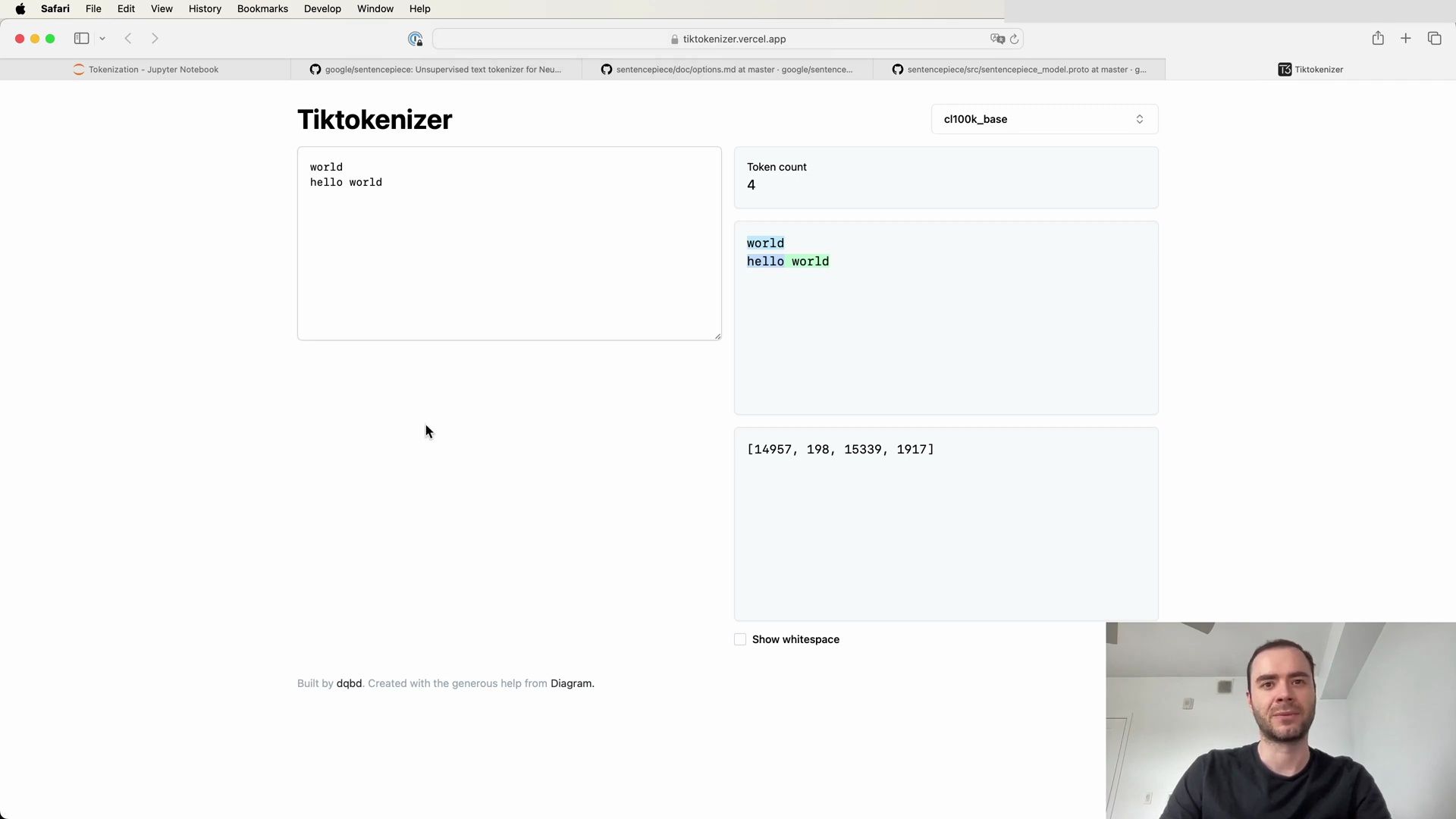

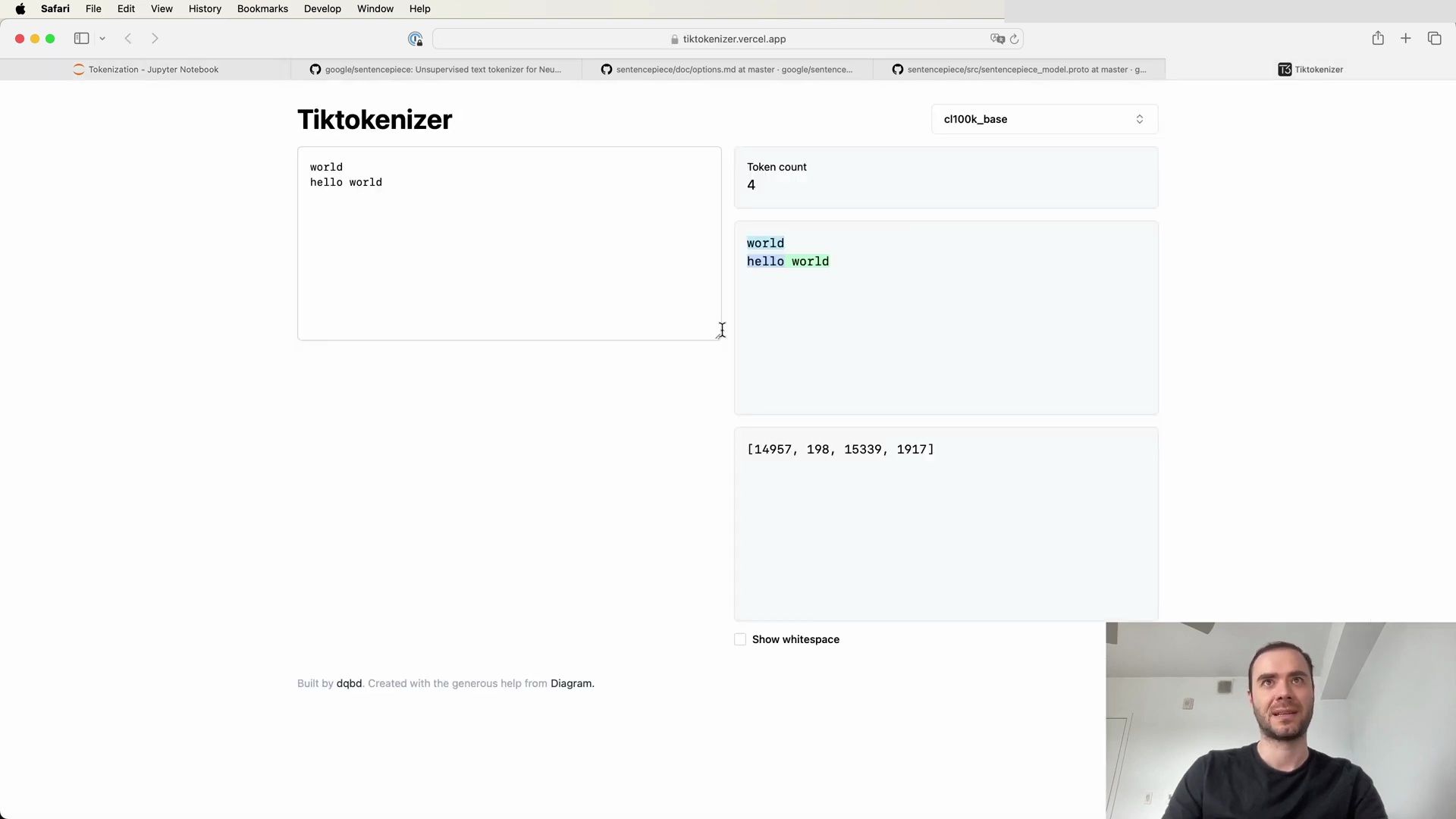

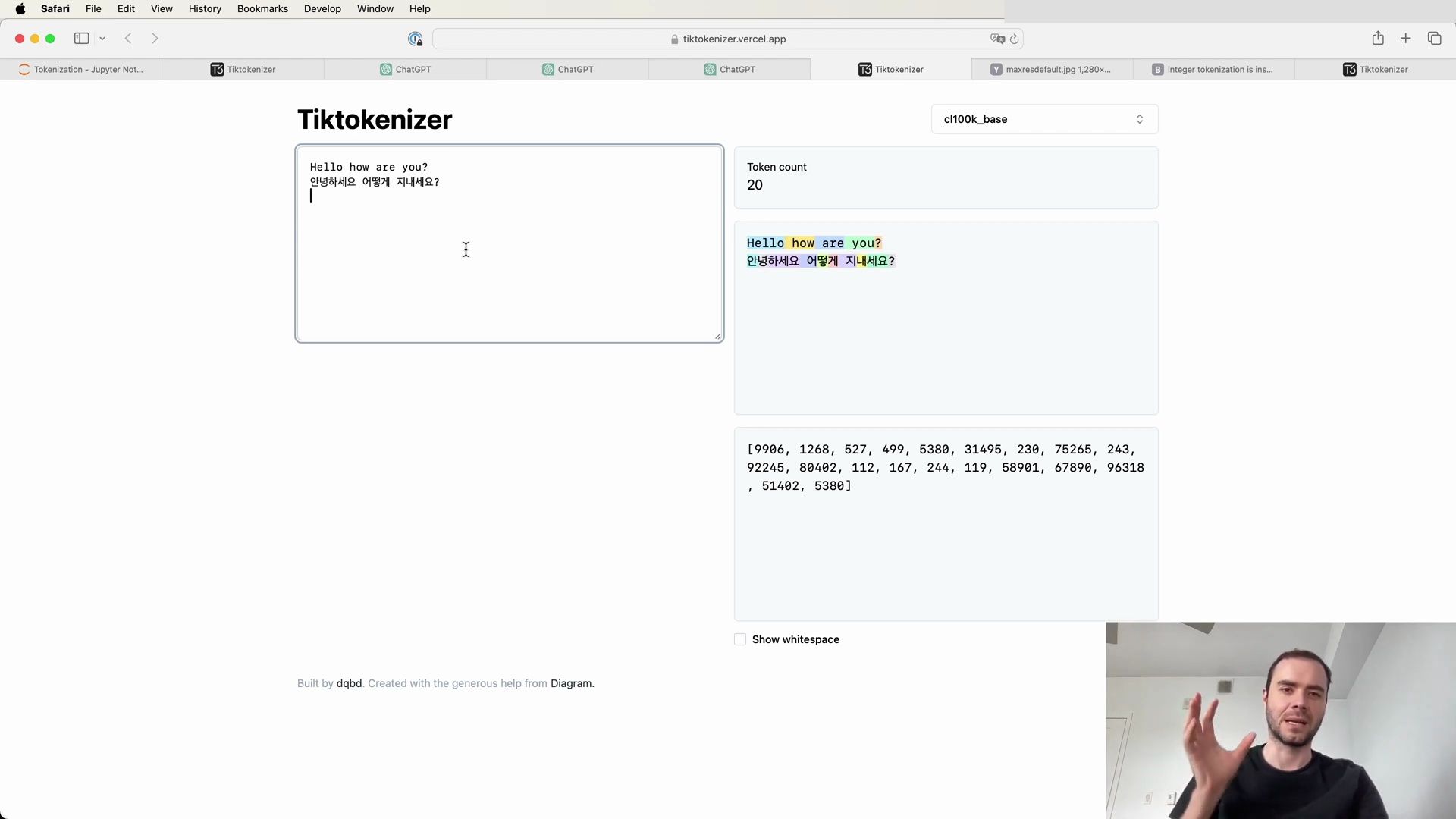

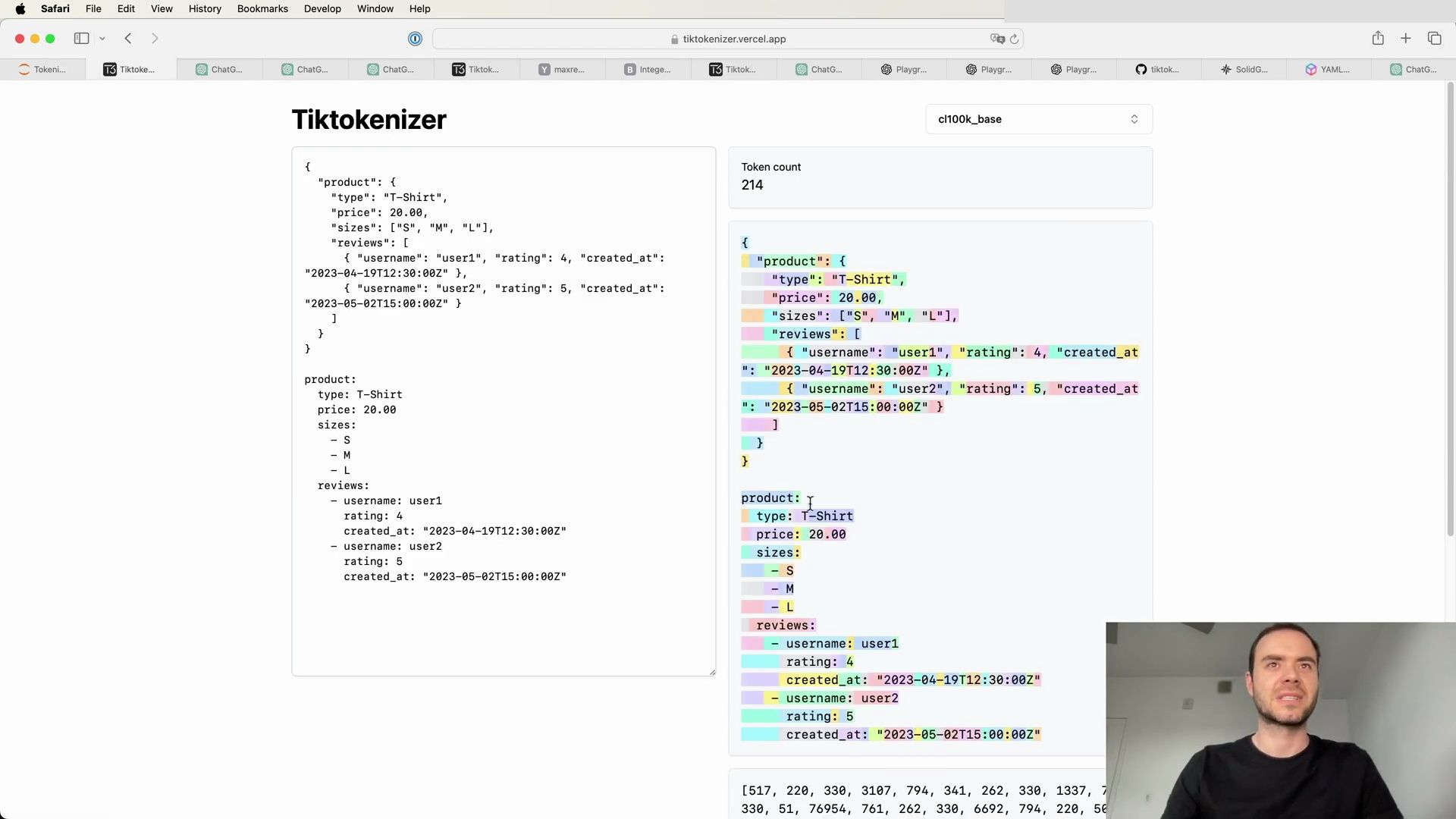

Exploring Tokenization with Web Apps

To get a better grasp of how tokenization works in practice, let’s explore a web application that allows live experimentation with tokenization:

Visit the tokenization webapp: https://tiktokenizer.vercel.app

This tool provides an interactive environment to input text and observe how different tokenization algorithms, such as the one used by GPT-2, break down and encode strings into tokens.

By dissecting pretraining methods, understanding the release of new LLM variants, and confronting tokenization challenges, we gain a deeper insight into the complexities and nuances of developing state-of-the-art language models. The continuous evolution of these models showcases the dynamic nature of the field and the ongoing quest to refine and enhance the capabilities of LLMs.

The Intricacies of Tokenization Visualized

Tokenization, as we’ve seen, can have a significant impact on the performance of Large Language Models (LLMs). Through a practical demonstration using a web application, we can visualize how tokenization operates in real-time.

Tokenization in Action

Using the GPT-2 tokenizer on a simple string, we can observe the process in a user-friendly format:

The web application showcases how an English sentence is tokenized into different chunks, each represented by a unique token ID, and emphasizes the importance of whitespace in the process. For example:

- The word “Tokenization” is split into two tokens:

30642and1634. - The phrase “ is” (including the space) becomes token

318. - The phrase “ at” is tokenized as

379, and “ the” as262.

This visualization underscores the inclusion of spaces as part of the token chunks. It’s also apparent that the tokenization of numbers can be inconsistent, as seen in arithmetic examples where the number 127 is a single token, but 677 is split into two separate tokens: “ 6” and “77”.

Tokenization and Non-English Languages

The tokenization of non-English languages, such as Korean, reveals a different set of challenges:

As the tokenizer has been trained predominantly with English data, non-English text often results in more tokens for the same content, leading to “bloated” sequences. This can affect the model’s ability to maintain context due to the finite context length of the transformer architecture.

Python Code Tokenization

When tokenizing Python code, the allocation of tokens to individual spaces can be incredibly wasteful:

The tokenizer assigns a separate token for each space, resulting in an inefficient tokenization that could impede the model’s coding capabilities.

# Example of Python code tokenization

for i in range(1, 101):

if i % 3 == 0 and i % 5 == 0:

print("FizzBuzz")

In the above snippet, the spaces before the if statement are each given their own token, such as 22. This inefficiency is one reason behind GPT-2’s poorer performance in handling Python code.

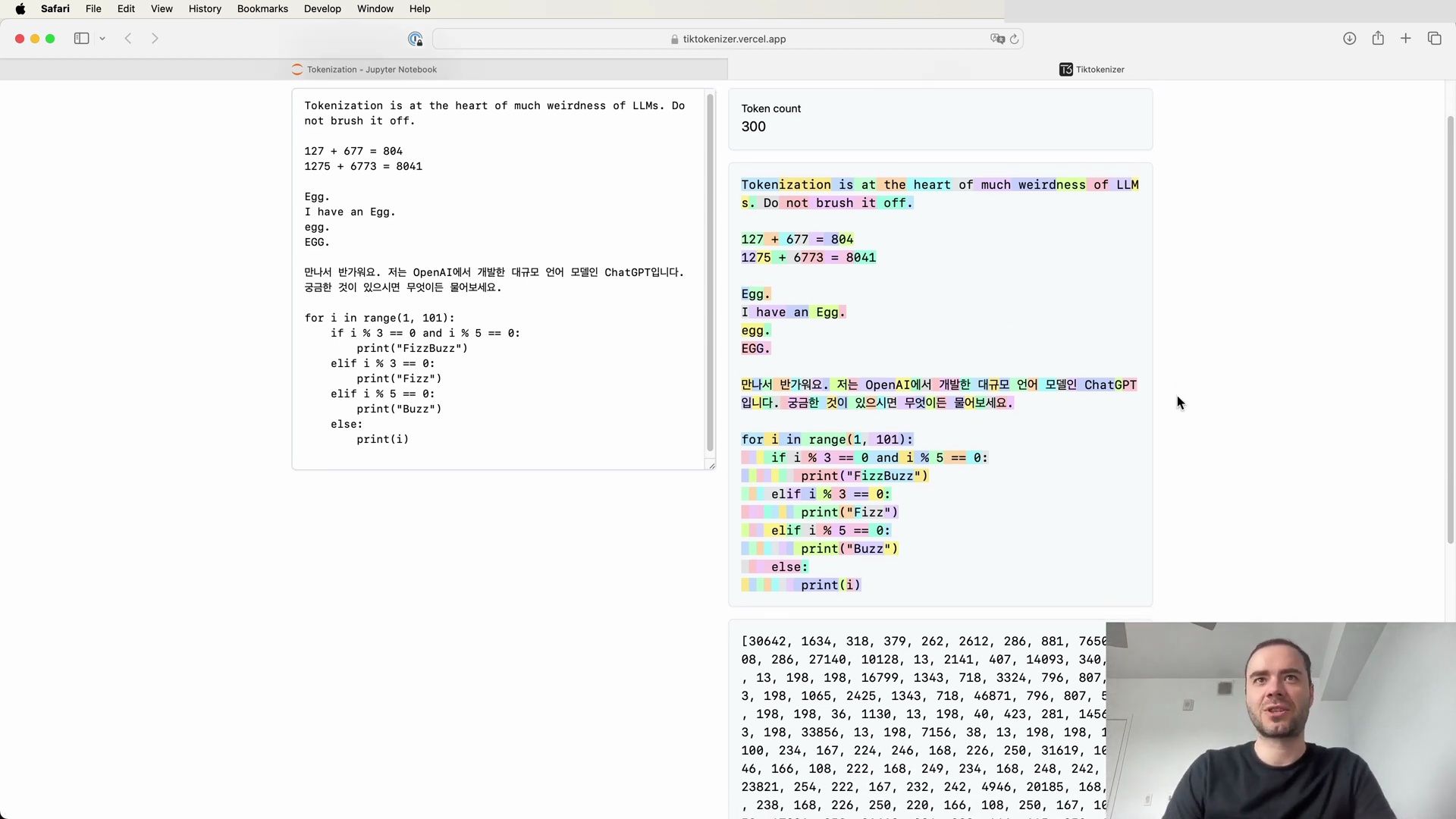

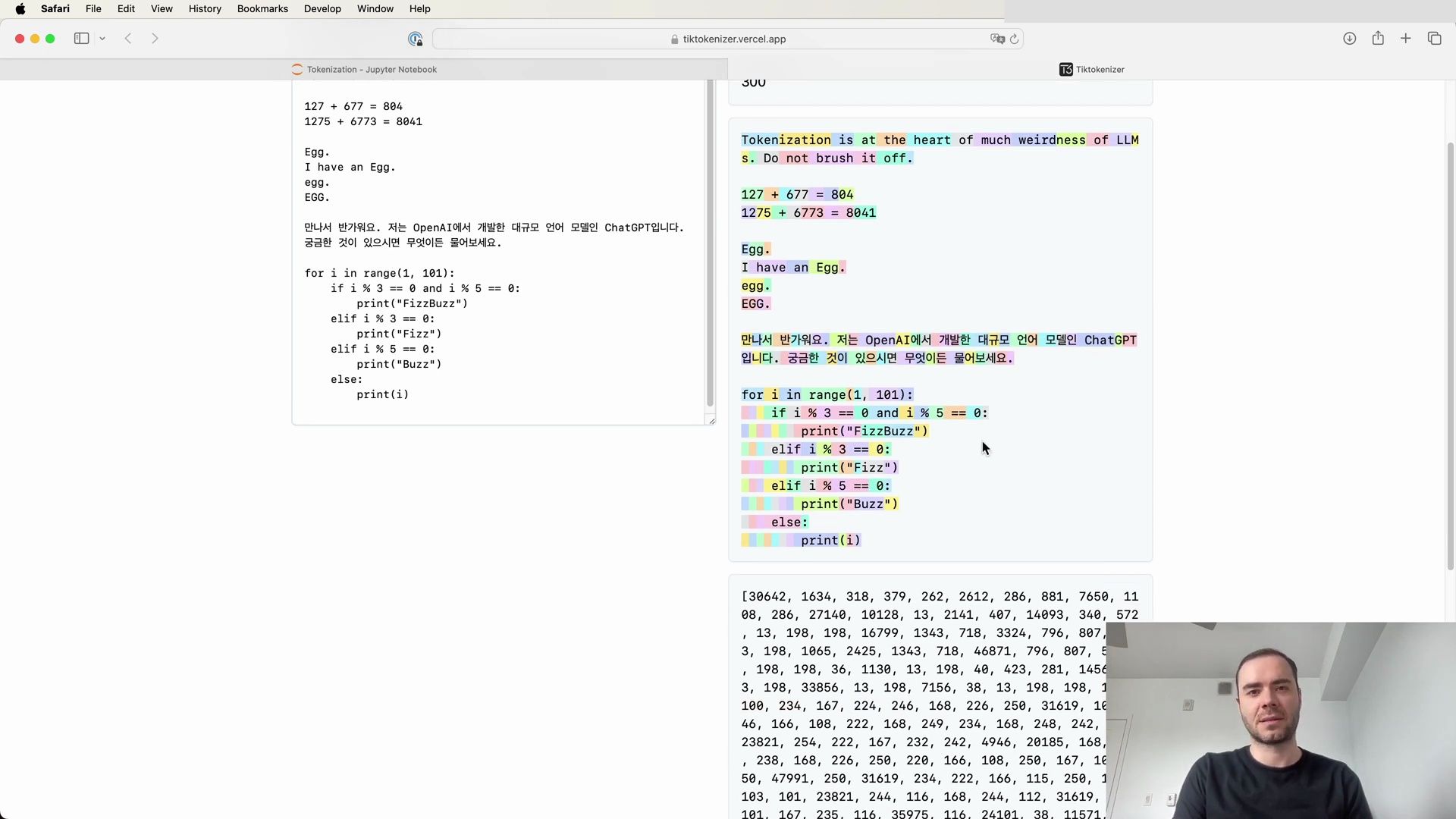

Improvements in GPT-4 Tokenization

By switching to the GPT-4 tokenizer, we can see a significant reduction in token count for the same string:

The GPT-4 tokenizer’s capacity to handle whitespace more effectively, especially in programming languages like Python, enhances the model’s ability to process code. This deliberate design choice by OpenAI results in a denser input to the transformer, allowing it to consider more context when predicting the next token.

The Underlying Complexity of Tokenization

Tokenization is not a mere preliminary step in preparing data for LLMs; it is a complex process that requires careful consideration, especially when dealing with a variety of languages and special characters. The goal is to convert strings into a fixed set of integers, which then correspond to vectors that serve as inputs to the transformer.

Unicode and Python Strings

In Python, strings are immutable sequences of Unicode code points. Understanding this is crucial when designing tokenizers capable of handling diverse languages and symbols.

# Python's approach to strings

Strings in Python are represented as sequences of Unicode code points.

The handling of strings in Python has evolved over time, with changes in version 3.2 introducing Sequence ABC support and version 3.3 adding new attributes and comparisons for range objects.

Tokenizing a Variety of Content

A tokenizer must be flexible enough to accommodate not just the English alphabet, but also other languages and a myriad of special characters found on the internet, such as emojis. This diversity is what makes the task of tokenization especially challenging in the context of LLMs.

# Challenges of diverse content tokenization

Tokenization must support:

- Multiple languages, including non-English scripts like Korean.

- Special characters and emojis.

- Case sensitivity and positional variations in words.

As we delve deeper into the inner workings of tokenization, it becomes increasingly clear that this aspect of language modeling is a linchpin for the success of LLMs. The design of tokenizers has a profound effect on a model’s ability to understand and generate text, emphasizing the need for ongoing research and optimization in this area.

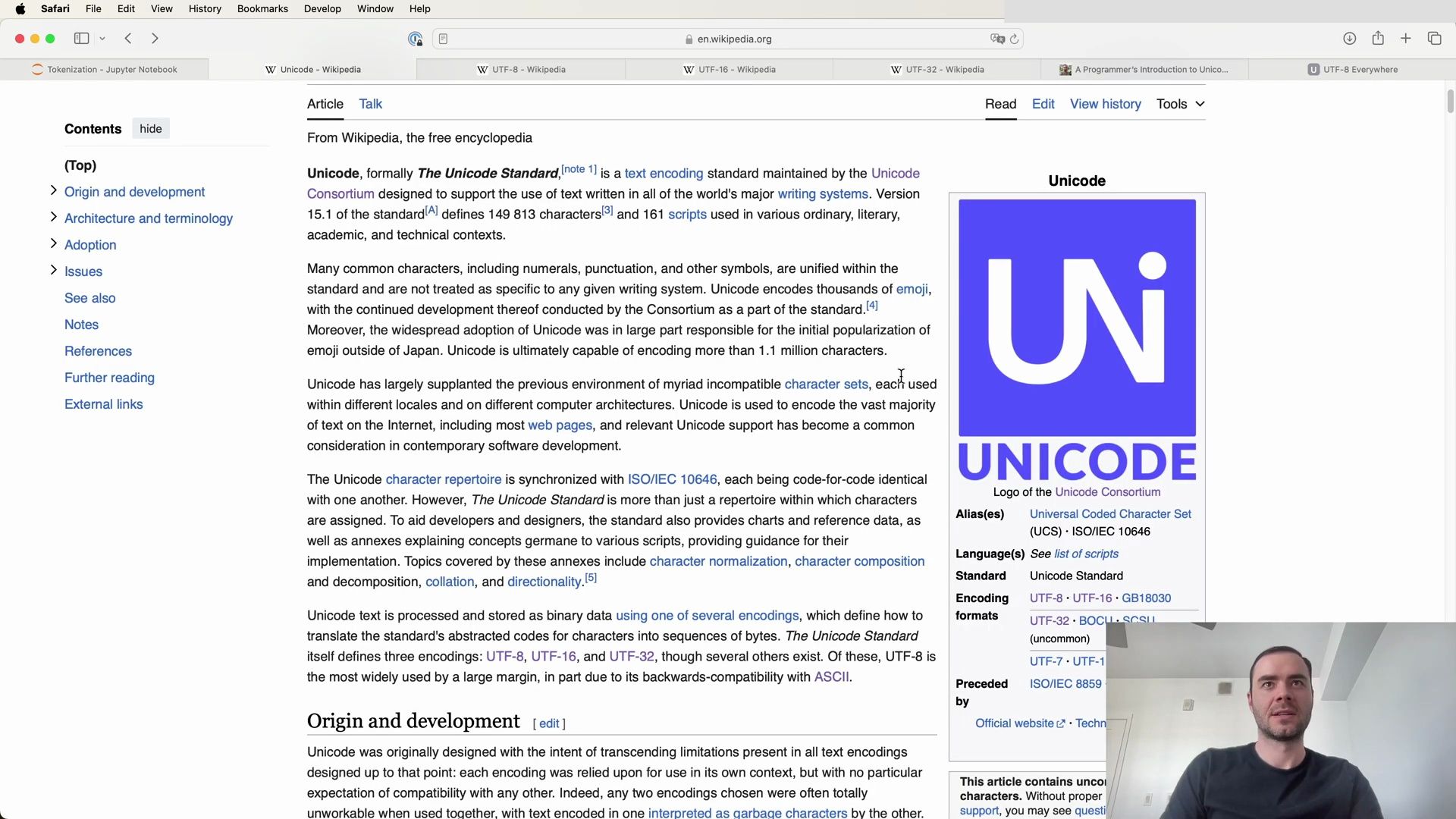

Understanding Unicode Code Points

Unicode code points are the backbone of modern text encoding and are crucial for processing text in Large Language Models (LLMs). They provide a unique number for every character, no matter the platform, program, or language.

The Unicode Standard Explained

The Unicode Standard is maintained by the Unicode Consortium and supports text in all of the world’s major writing systems. The key aspects of Unicode include:

- Character Definition: As of Version 15.1, the Unicode Standard defines 149,813 characters across 161 scripts.

- Scripts: These characters serve a wide range of uses, from ordinary text to literary, academic, and technical contexts.

- Unification: Many common characters, such as numerals, punctuation, and other symbols, are unified within the standard and not specific to any writing system.

- Emojis: Thousands of emojis are encoded, with ongoing development by the Consortium.

- Adoption: Unicode has been widely adopted for encoding the majority of text on the Internet, including web pages.

- Synchronization with ISO/IEC 10646: The Unicode character repertoire is synchronized with ISO/IEC 10646, ensuring compatibility.

The standard also offers guidance for implementation, including topics like character normalization, composition and decomposition, and directionality.

Unicode’s Role in Technology

Unicode has replaced many incompatible character sets that were previously used in different locales and computer architectures. Its support has become essential in software development, allowing consistent representation and data exchange across different platforms and locales.

The latest version, 15.1, was released in September 2023, indicating that the standard is actively maintained and updated to accommodate new characters and scripts.

Ligatures and Script-Specific Rules

Many scripts, including Arabic and Devanagari, use ligatures—a combination of letterforms into specialized shapes—according to complex orthographic rules. These require special technologies such as:

- ACE (Arabic Calligraphic Engine): Used for Arabic examples in The Unicode Standard.

- OpenType: Developed by Adobe and Microsoft.

- Graphite: Developed by SIL International.

- AAT (Apple Advanced Typography): Developed by Apple.

These technologies ensure that characters and ligatures are displayed correctly across different systems.

Standardized Subsets of Unicode

Unicode includes standardized subsets to support specific language groups:

- WGL-4: 657 characters designed to support European languages using Latin, Greek, or Cyrillic script.

- MES (Multilingual European Subsets): Ranging from MES-1 for Latin scripts to MES-3B for larger subsets.

- DIN 91379: A standard specifying a subset of Unicode to allow consistent representation of names and simplify data exchange in Europe.

Accessing Unicode Code Points in Python

In Python, the ord() function can be used to find a character’s Unicode code point. For example:

# Example of accessing Unicode code point of a character

print(ord('H')) # Output: 104

This can also be applied to emojis and other characters:

# Example of accessing Unicode code point of an emoji

print(ord('😀')) # Output: 128512 (decimal representation of the emoji's code point)

Keep in mind that ord() can only take single Unicode characters, not entire strings. Here’s how we could encode a string into Unicode code points:

# Encoding a string into Unicode code points

string = "Hello, World!"

code_points = [ord(character) for character in string]

print(code_points)

The output will be a list of integers representing the Unicode code points for each character in the string.

The Challenge of Using Unicode Code Points for Tokenization

The direct use of Unicode code points for tokenization in LLMs is impractical for a number of reasons:

- Vocabulary Size: The Unicode standard can encode over 1.1 million characters, leading to an unwieldy vocabulary size for any model.

- Sparse Representation: Many code points would rarely or never be used, leading to inefficiencies in the model’s representation of data.

Thus, while Unicode provides a way to represent characters from a wide array of languages and symbols, its direct use in tokenization is not feasible for LLMs. Tokenization strategies must strike a balance between the granularity of representation and the practical constraints of model architecture.

Exploring the Limits of Unicode for LLMs

The Unicode standard, while comprehensive and continuously evolving, presents challenges when applied directly to tokenization for Large Language Models (LLMs) due to the sheer volume of 150,000 different code points. In addition to the expansive size, the fluid nature of the Unicode standard makes it an unstable foundation for server-side usage. Therefore, a more sophisticated approach to encoding is necessary.

Delving into Python’s Encoding Capabilities

When working with Unicode in Python, we often utilize list comprehensions to convert a string into its corresponding code points:

# Converting a string to Unicode code points

string_to_encode = 'Hello, World!'

unicode_points = [ord(x) for x in string_to_encode]

print(unicode_points)

Understanding UTF Encodings

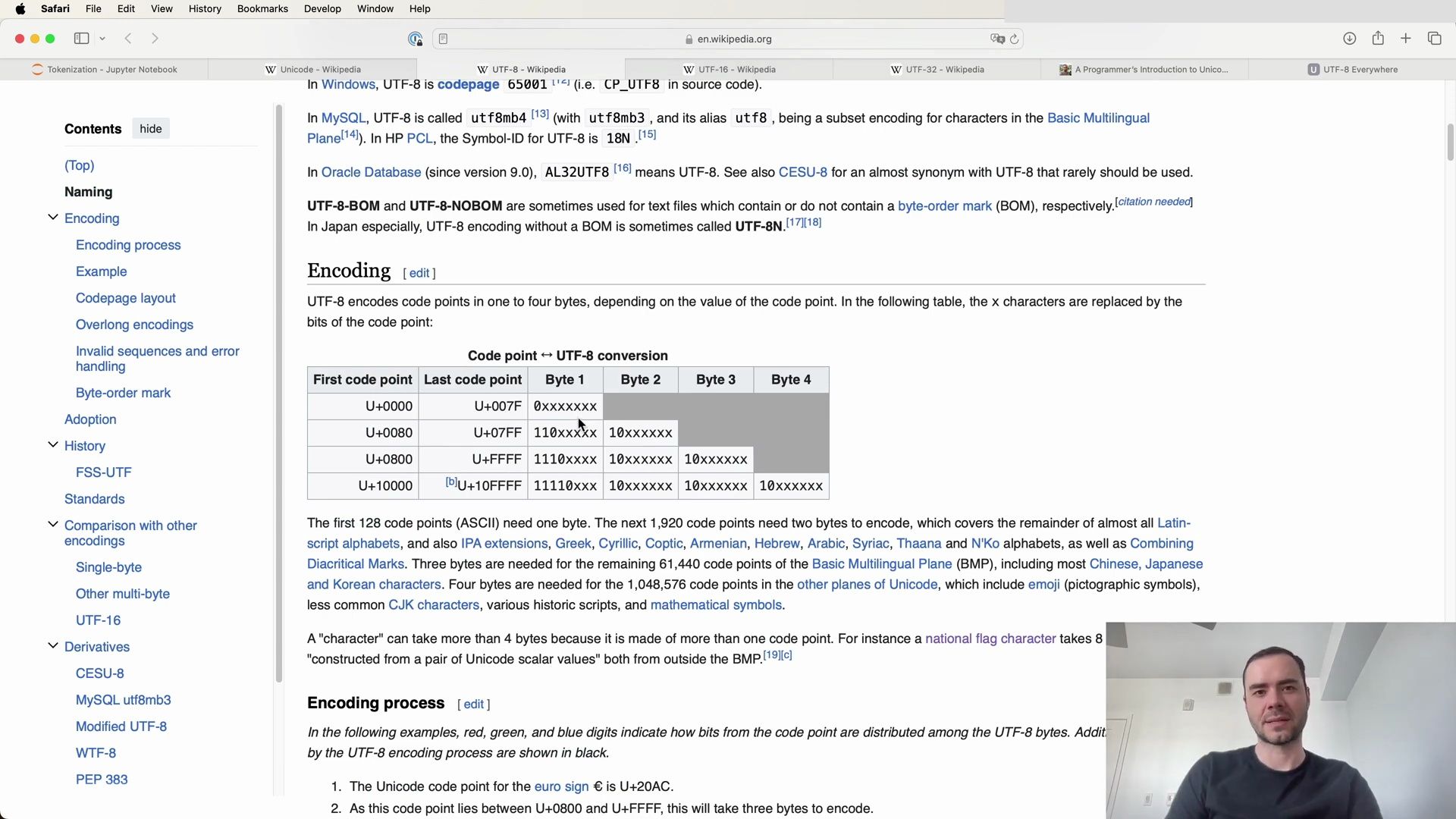

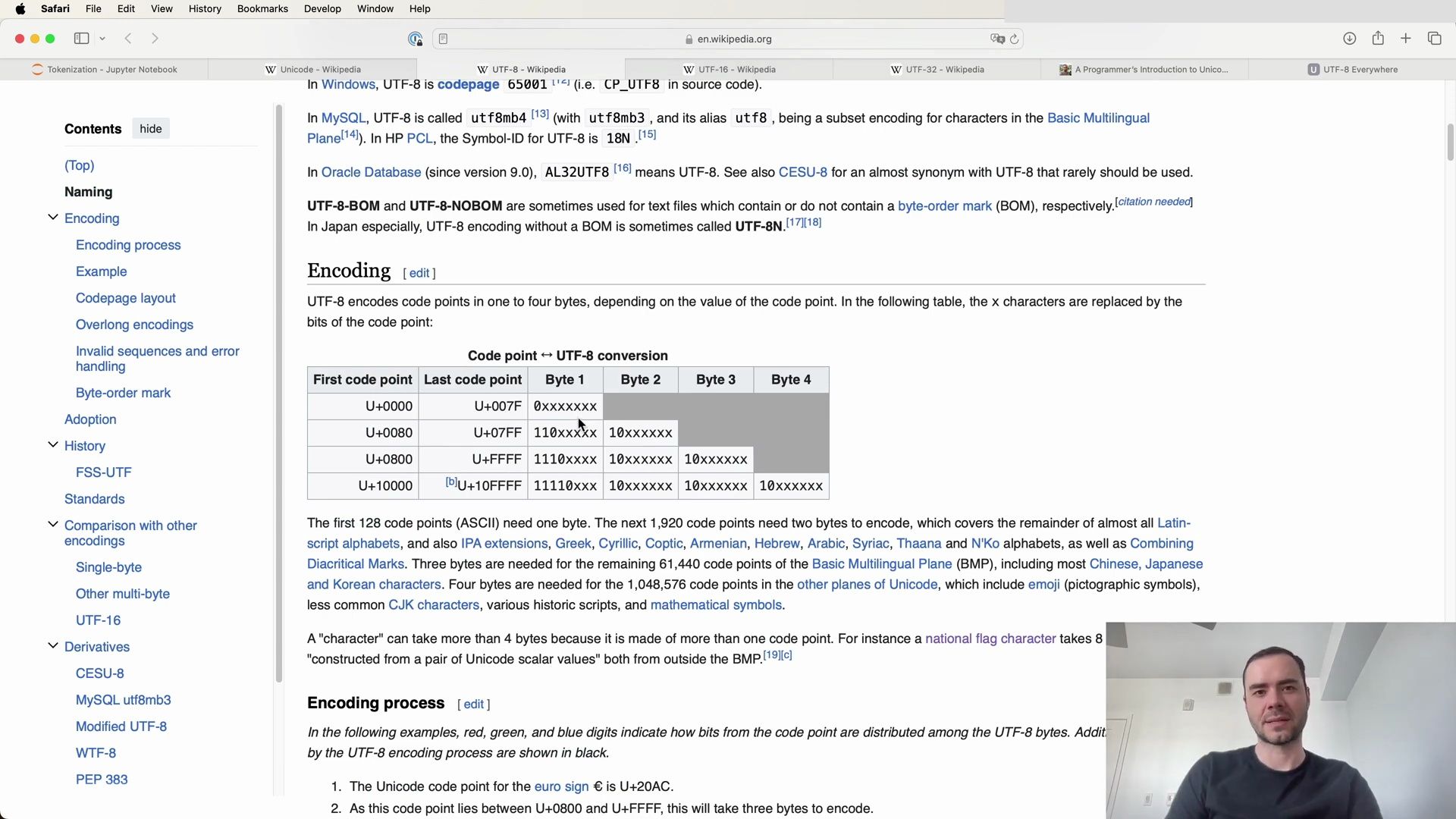

The Unicode Consortium defines three primary types of encodings: UTF-8, UTF-16, and UTF-32. These encodings allow the conversion of Unicode text into binary data or byte streams, with UTF-8 being the most prevalent.

UTF-8 is a variable-length character encoding standard used for electronic communication. It has the following features:

- Capable of encoding all 1,112,064 valid Unicode code points using one to four one-byte (8-bit) code units.

- Designed for backward compatibility with ASCII.

- Accounts for 98.1% of all web pages and up to 100% for many languages as of 2024.

To illustrate how UTF-8 encodes different Unicode code points, consider the euro sign (€), which is U+20AC:

- The code point lies between U+0800 and U+FFFF, requiring three bytes to encode.

- The three bytes can be concisely written in hexadecimal as E2 82 AC.

Here is a summary of the conversion process for UTF-8:

- A three-byte encoding starts with three 1s followed by a 0 (1110…).

- The four most significant bits of the code point are stored in the remaining lower four bits of this byte (1110 0010), leaving 12 bits yet to be encoded.

- Continuation bytes contain six bits from the code point, marked with high order two bits as 10 (10 000010).

- The final six bits of the code point are stored in the low order six bits of the final byte (10 101100).

UTF-16 and UTF-32: Alternatives to UTF-8

While UTF-8 is widely adopted, it is not without alternatives. UTF-16 and UTF-32 are other encoding formats, each with its own set of advantages and disadvantages.

UTF-16 is a variable-length character encoding capable of encoding all valid Unicode code points using one or two 16-bit code units. It is primarily used by systems like the Microsoft Windows API, Java, and JavaScript/ECMAScript. However, it has not gained popularity on the web and is declared by under 0.004% of web pages.

On the other hand, UTF-32 is a fixed-length encoding that uses exactly 32 bits per code point. Its primary advantage is direct indexing of Unicode code points, enabling constant-time operations for finding the Nth code point in a sequence. Despite this, UTF-32 is space-inefficient, often resulting in larger data sizes compared to UTF-8 or UTF-16.

Additional Resources for Unicode and Encoding

For those seeking to deepen their understanding of Unicode and encodings, several resources are available:

- The Unicode Standard: The definitive guide to Unicode specifications.

- UTF-8 Everywhere Manifesto: Advocating for the widespread adoption of UTF-8.

- International Components for Unicode (ICU): Libraries implementing Unicode algorithms.

- Python 3 Unicode Howto: A guide to Unicode handling in Python 3.

- Google Noto Fonts: A collection of fonts covering all assigned Unicode code points.

Programmer’s Perspective on Unicode

From a programmer’s perspective, Unicode represents a significant leap in complexity. It’s not just about using wchar_t for strings; it involves understanding the character set, working with strings and files of Unicode text, and handling diverse encodings like UTF-8 and UTF-16.

Here’s a summary of key points a programmer must consider:

- Diversity and Complexity: Unicode accommodates a vast range of characters and scripts, adding layers of complexity to text handling.

- Codespace Allocation: Understanding how Unicode allocates space for different scripts and characters.

- Encodings: Choosing the right encoding (UTF-8, UTF-16, or UTF-32) based on the application’s needs.

For a comprehensive introduction to Unicode from a coder’s viewpoint, Nathan Reed’s blog “A Programmer’s Introduction to Unicode” is an invaluable resource.

In the next section, we’ll delve into the specific algorithms and techniques used for tokenization in LLMs, which address the challenges posed by Unicode’s complexity and the limitations of direct encoding methods.

UTF-8 Everywhere Manifesto

The discussion of Unicode would be incomplete without mentioning the “UTF-8 Everywhere Manifesto”. The manifesto advocates strongly for the adoption of UTF-8 as the universal character encoding, emphasizing that it should be the default choice for encoding text strings in memory, on disk, for communication, and for all other uses. The manifesto outlines several reasons for this:

- Performance: UTF-8 has been shown to improve software performance.

- Simplicity: It reduces the complexity of software development.

- Bug Prevention: The use of UTF-8 helps to prevent many Unicode-related bugs.

- UTF-16 Critique: The manifesto criticizes UTF-16, which is often mistakenly referred to as ‘widechar’ or simply ‘Unicode’ in Windows environments, and suggests its use should be limited to specialized text processing libraries.

The manifesto also contains recommendations for string handling in Windows applications and argues against the use of ‘ANSI codepages’. It states that developers should not have to be concerned with encoding complexities when creating applications that are not specialized in text processing. The manifesto advises that iterating over Unicode code points should not be considered an important task in text processing as many developers mistakenly regard code points as successors to ASCII characters.

UTF-8: The Preferred Encoding

UTF-8 is the only encoding standard that is backward compatible with ASCII, making it significantly preferred and widely used on the internet. This backward compatibility is one of the major advantages of UTF-8, as it allows for a seamless transition from older technologies that use ASCII to the more expansive Unicode standard.

Encoding Strings in Python

Python’s string class provides a straightforward way to encode strings into various formats, including UTF-8, UTF-16, and UTF-32. Here’s an example of encoding a string into UTF-8:

# Encoding a string into UTF-8

unicode_string = '안녕하세요 👋 (hello in Korean!)'

utf8_encoded = unicode_string.encode('utf-8')

utf8_encoded_list = list(utf8_encoded)

print(utf8_encoded_list)

When encoded into UTF-8, we see a bytes object that represents the string according to the UTF-8 encoding standards. However, if we were to look at the UTF-16 encoding of the same string, we would notice a pattern of zero bytes interspersed with the encoded characters, hinting at the inefficiency of UTF-16 for certain types of text:

# Encoding a string into UTF-16

utf16_encoded = unicode_string.encode('utf-16')

utf16_encoded_list = list(utf16_encoded)

print(utf16_encoded_list)

Similarly, encoding into UTF-32 would reveal even more wastefulness in terms of space:

# Encoding a string into UTF-32

utf32_encoded = unicode_string.encode('utf-32')

utf32_encoded_list = list(utf32_encoded)

print(utf32_encoded_list)

The inefficiency of UTF-16 and UTF-32, particularly for ASCII or English characters, reinforces the argument for the widespread adoption of UTF-8 for most applications.

The Challenge of Encoding for LLMs

Despite the efficiency of UTF-8, using it directly in language models is not without its problems. If we were to use UTF-8 byte streams as tokens, we would be limited to a vocabulary size of only 256 possible tokens, which is very small. This would result in our text being represented as very long sequences of bytes, which is not ideal. Long sequences would make the attention mechanism in Transformers computationally expensive and inefficient, as it would restrict the context length available for token prediction tasks.

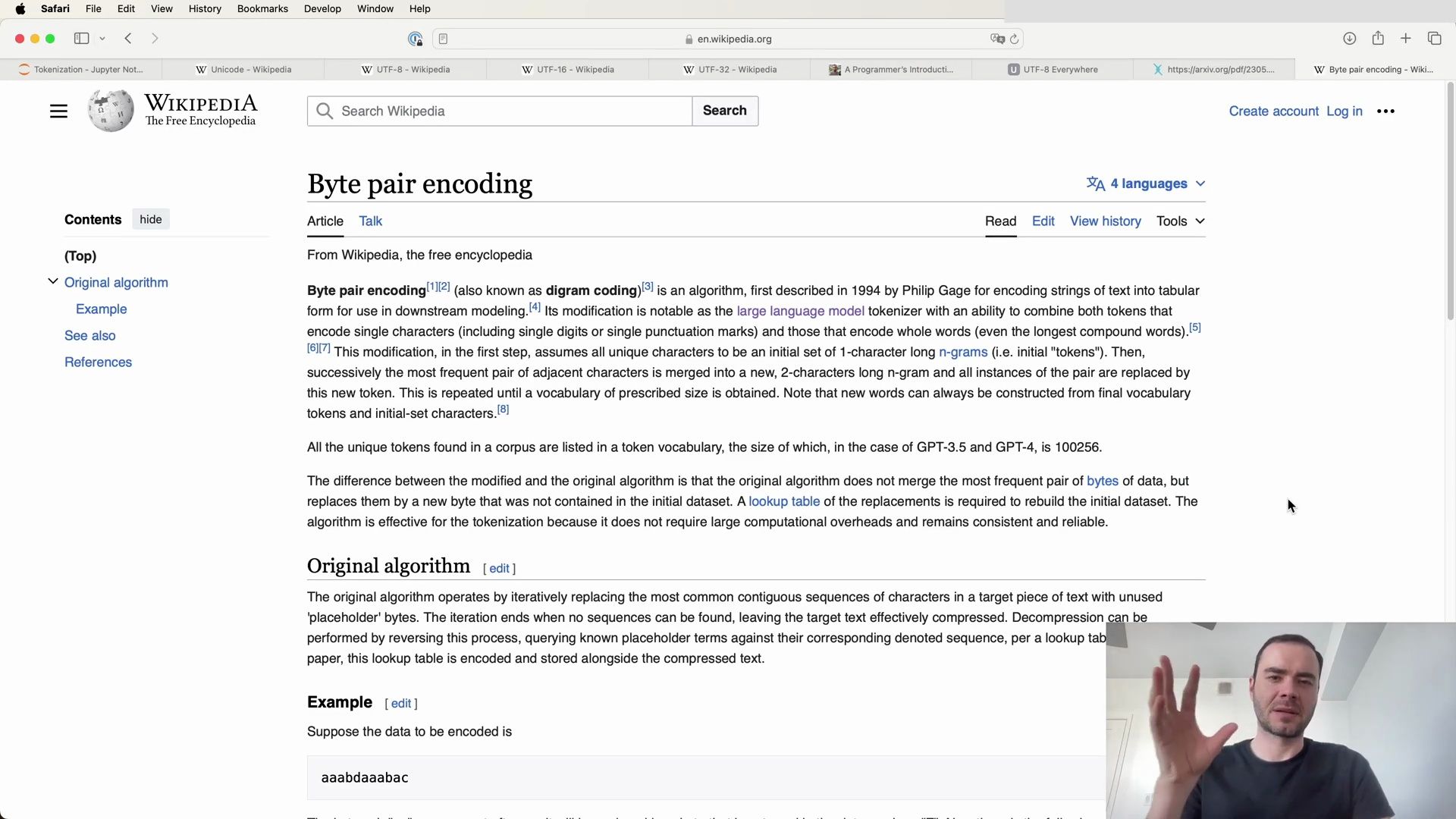

Byte Pair Encoding (BPE) Algorithm

To address the challenge of encoding for LLMs, the Byte Pair Encoding (BPE) algorithm is employed. BPE allows us to compress byte sequences significantly. The algorithm works by iteratively finding the most frequent pair of tokens in the text and replacing that pair with a single new token that is added to the vocabulary.

Let’s take a closer look at how the BPE algorithm works with an example:

# Example of Byte Pair Encoding

original_sequence = ['A', 'A', 'B', 'C', 'A', 'A', 'D', 'A', 'A', 'B', 'C']

# Step 1: Identify the most frequent pair ('A', 'A') and replace with 'Z'

step_1_sequence = ['Z', 'B', 'C', 'Z', 'D', 'Z', 'B', 'C']

# Step 2: Identify the next most frequent pair ('B', 'C') and replace with 'Y'

final_sequence = ['Z', 'Y', 'Z', 'D', 'Z', 'Y']

The process is repeated until no further compression is possible or until a desired vocabulary size is reached. The BPE algorithm is an essential component in the tokenization process for LLMs, as it allows for a tunable vocabulary size while retaining the efficiency of UTF-8 encoding.

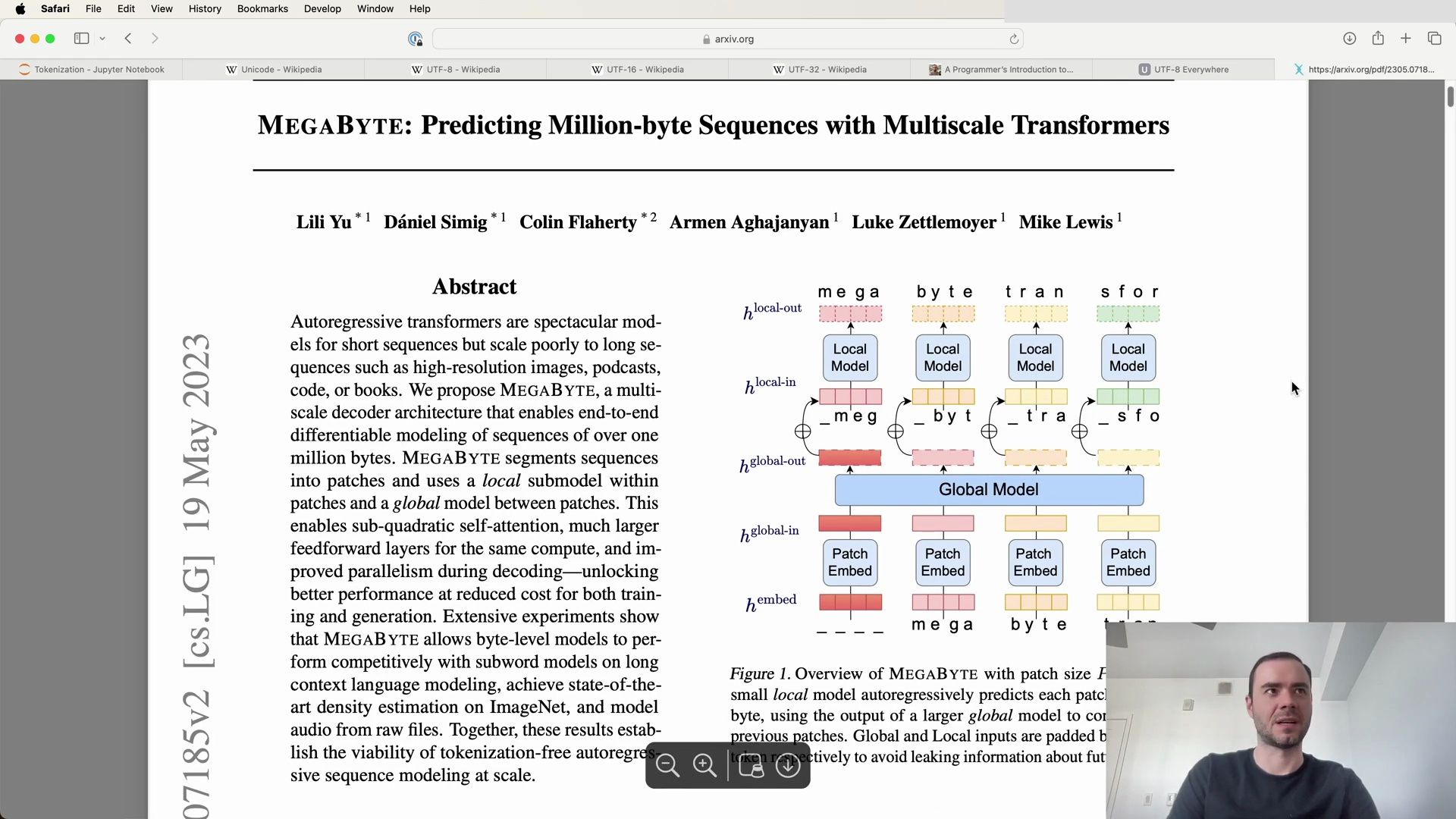

Hierarchical Transformers

The idea of feeding raw byte streams directly into language models is fascinating and has been explored in research. The paper titled “Tokenization Free: How Byte-Level Models Can Represent Code” from OpenAI discusses a hierarchical structuring of the Transformer architecture that could allow for the processing of raw bytes without tokenization. This approach could potentially enable aggressive sequence modeling at scale. However, this concept is still in the experimental phase and has yet to be widely adopted or proven efficient at scale.

Conclusion on BPE and LLM Encoding

In conclusion, while the prospect of tokenization-free sequence modeling is intriguing, current language models rely on compression techniques like the BPE algorithm to manage the balance between vocabulary size and sequence length. These methods allow for efficient encoding while enabling the model to handle a wide variety of text data.

Continuation of Byte Pair Encoding (BPE) Algorithm

As we delve further into the Byte Pair Encoding algorithm, it becomes evident that the vocabulary size continues to grow with each iteration. We begin with a fixed initial vocabulary size based on our byte sequences but, as the algorithm progresses, we mint new tokens and append them to our vocabulary. This process iteratively compresses the sequence while expanding the vocabulary.

Let’s continue with our example. We find the most common pair ‘ZY’ in our sequence and replace it with a new token ‘X’. After this replacement, we get the following sequence:

# Continuing BPE example with new replacements

step_3_sequence = ['X', 'D', 'X', 'Y']

The original sequence of 11 tokens now becomes a sequence of 5 tokens, but our vocabulary size has expanded to 7. The BPE algorithm has compressed the original sequence by more than 50% while only adding two new tokens to the vocabulary.

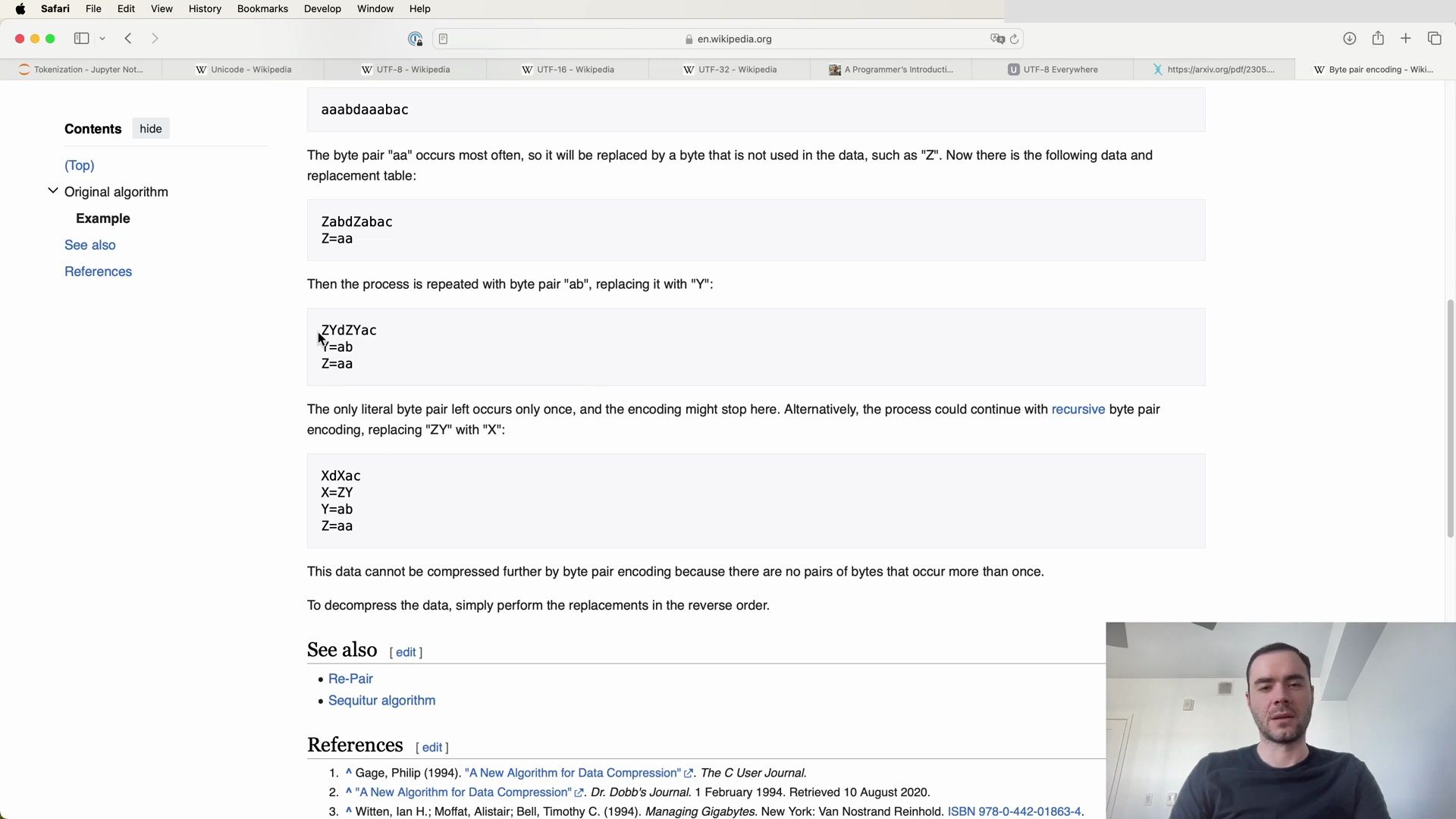

Example of BPE in Practice

Consider the following string to be encoded using BPE:

aaabdaaabac

The byte pair ‘aa’ is the most frequent, so we replace it with a new token ‘Z’:

ZabdZabac

Continuing this process, we end up with a compressed sequence and an expanded vocabulary. This iterative method is how we prepare a training dataset for a language model, and create an algorithm for encoding and decoding arbitrary sequences.

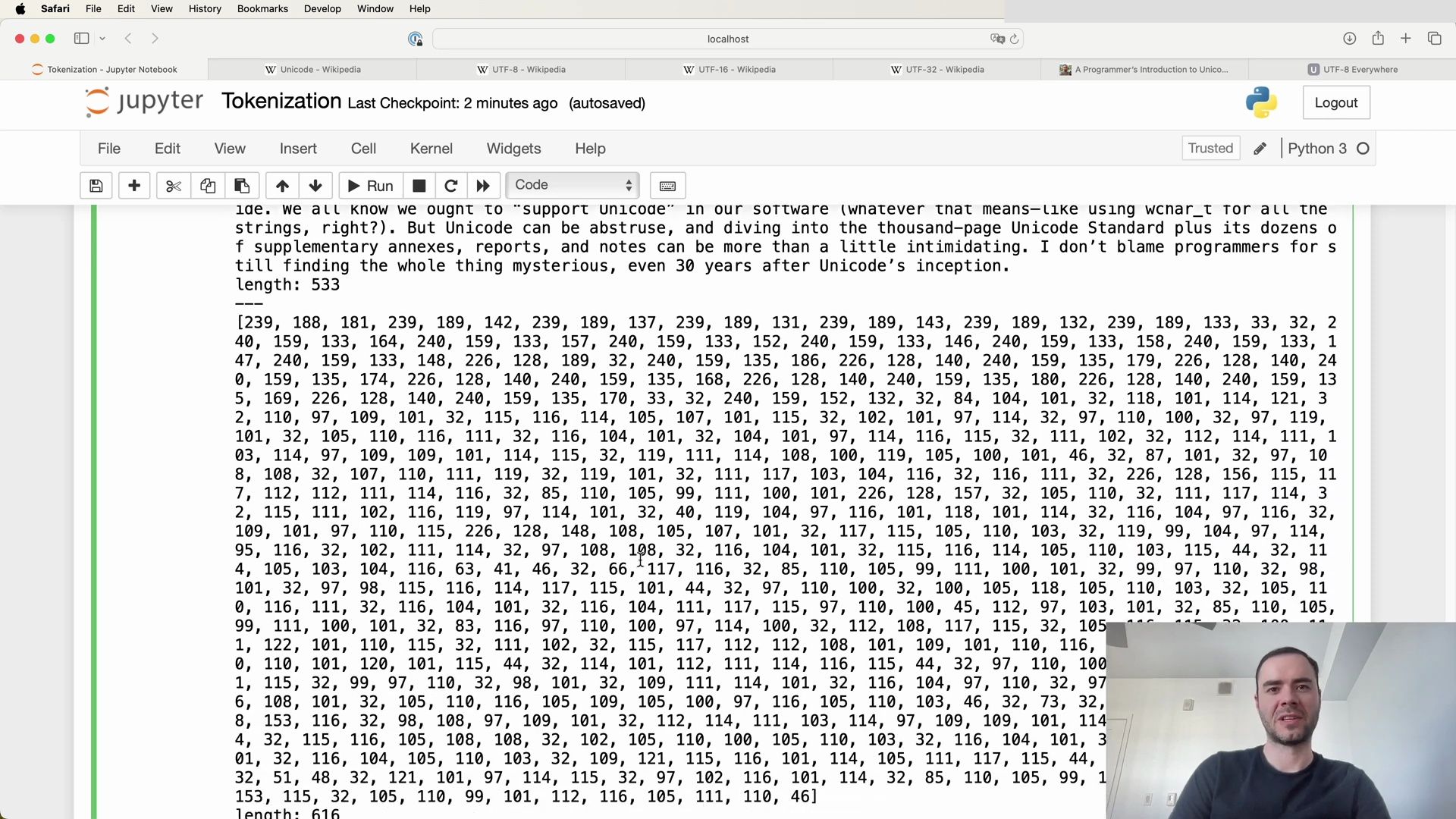

Tokenization in Python

Using Python, we can encode our text into UTF-8, thereby converting it to a stream of bytes. For convenience in manipulation, we can then convert these bytes into integers and create a list. Below is an example of converting a paragraph into UTF-8 encoded bytes and then to a list of integers:

# Convert paragraph text into UTF-8 encoded bytes

text = "Example paragraph text..."

utf8_encoded = text.encode('utf-8')

# Convert UTF-8 encoded bytes to a list of integers

tokens = list(map(int, utf8_encoded))

print(tokens)

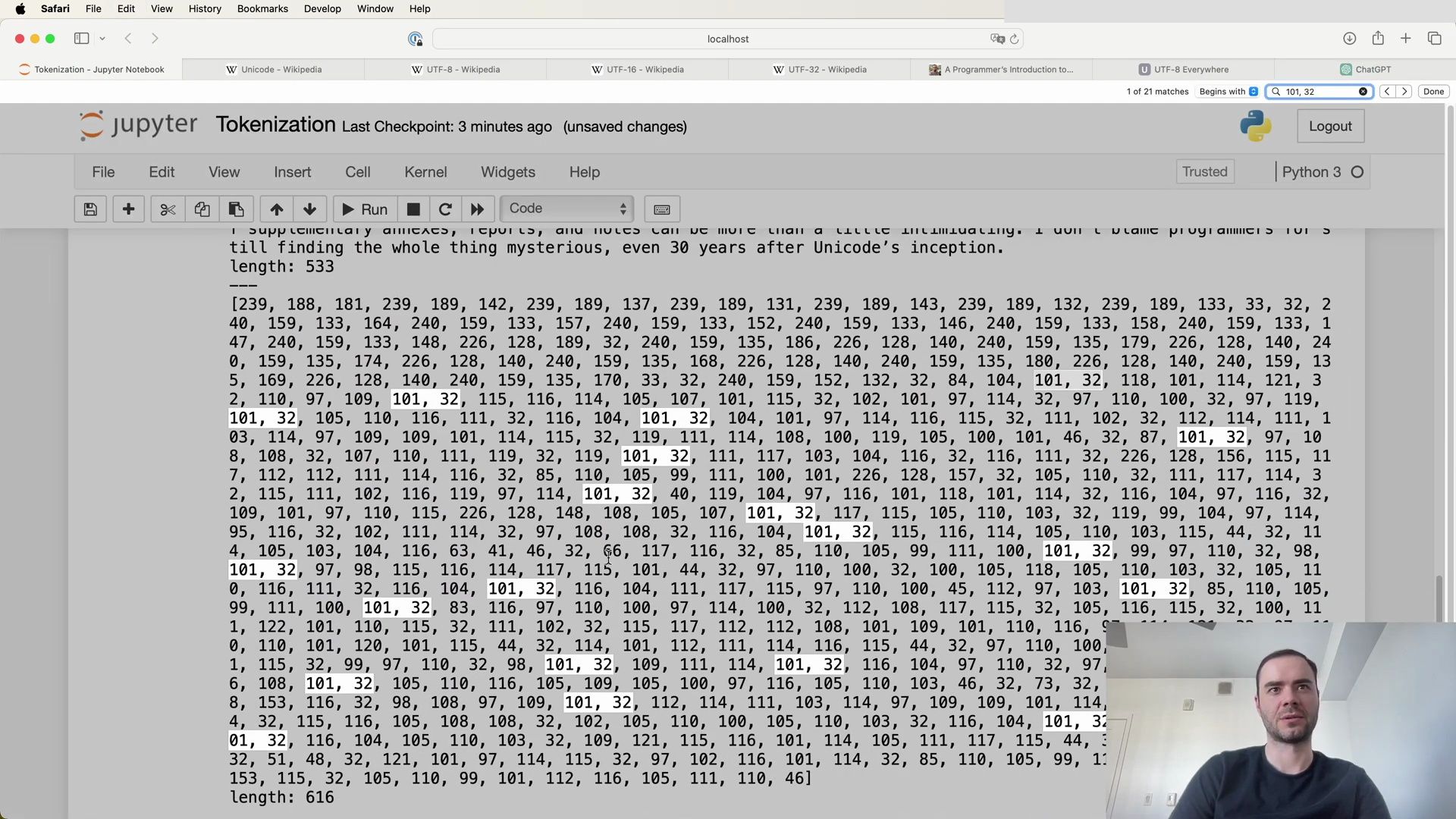

The length of the paragraph in code points might be 533, but after encoding to UTF-8, we get a length of 616 bytes, or 616 tokens. More complex Unicode characters can expand into multiple bytes, which increases the token count.

Finding the Most Common Byte Pair

To effectively use BPE, we need to identify the most common pair of bytes in our data. Here is a snippet of how we might implement a function to find the most common byte pair in Python:

# Function to get statistics of byte pair frequencies

def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Iterate over consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

# Example usage of get_stats function

tokens = [239, 188, 181, ...] # This is a shortened example list of tokens

stats = get_stats(tokens)

print(stats)

This function, get_stats, calculates the frequency of each byte pair in the token list. We can then sort these to find the most common pair and proceed with the BPE algorithm.

Implementing BPE Tokenization

Now let’s implement the BPE tokenization process. We start by defining the get_stats function to collect statistics on byte pairs. Then, we can use these statistics to iteratively merge the most frequent pairs into new tokens. Here’s a simplified version of how you might write that function:

# Function to find the most common byte pair in a list of tokens

def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Pythonic way to iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

# Retrieve the byte pair frequencies for our tokens

stats = get_stats(tokens)

print(stats)

By iterating over the byte pairs and updating the frequency in counts, we can identify which pairs to merge. This is just the first step in the BPE algorithm, which would continue until a specified vocabulary size is reached or no further significant compression can be achieved.

The process of BPE not only aids in compressing the dataset but also provides a systematic approach for encoding and decoding sequences that the language model can learn from. It’s this iterative refinement that enables language models to efficiently process and understand the vast array of human languages and symbols encoded in text.

Improving Byte Pair Encoding Understanding

To further grasp the inner workings of the Byte Pair Encoding (BPE) algorithm, let’s look at how we might implement a function to track the frequency of byte pairs in a sequence of tokens. This function is key in identifying which byte pairs are the most common and therefore should be merged together in the BPE process.

Here’s the Python code that achieves this:

def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Pythonic way to iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

tokens = [239, 188, 181, ...] # This is a shortened example list of tokens

stats = get_stats(tokens)

print(stats)

In this code, get_stats goes through a list of tokens, ids, and counts the occurrences of each consecutive byte pair. The zip function is used to iterate over pairs of consecutive elements in the list.

To display the counts in a more readable format, we can sort the dictionary and print the results as follows:

print(sorted(((v, k) for k, v in stats.items()), reverse=True))

This snippet sorts the byte pairs according to their frequency in descending order, allowing us to easily identify the most common pairs.

Identifying Frequent Byte Pairs

Let’s examine the actual output of the get_stats function for a token list. By sorting the dictionary of counts, we can see which byte pairs occur most frequently:

# Output from the sorted stats

[

(20, (101, 32)),

(15, (240, 159)),

(12, (226, 228)),

(12, (105, 110)),

# ... truncated for brevity

]

In this example, the pair (101, 32) occurred 20 times, making it the most frequent byte pair in the tokens list. To understand what characters these numbers represent, we can use Python’s chr function, which returns a string representing a character whose Unicode code point is the integer passed.

print(chr(101), chr(32))

# Output: e

Here, chr(101) corresponds to the letter ‘e’ and chr(32) corresponds to a space. This suggests that the combination of ‘e’ followed by a space is particularly common in the text being analyzed, which is a pattern we might expect in English text.

Byte Pairs in Context

Understanding the context in which byte pairs appear is crucial. For instance, the frequent occurrence of the pair (101, 32) tells us that many words in the analyzed text end with the letter ‘e’ before a space, indicating the end of a word. This is a common pattern in English, where many words have an ‘e’ at the end, such as ‘the’, ‘be’, ‘are’, and so on.

The BPE algorithm takes advantage of these patterns to efficiently encode text by merging frequently occurring byte pairs into single tokens. This not only helps in compressing the data but also ensures that the language model can learn from these common patterns.

Byte Pair Encoding in Action

In practice, once we have identified the most common byte pairs, we would replace instances of these pairs in our data with a new token. This process is repeated iteratively, each time identifying and replacing the most common byte pair, until the vocabulary reaches a desired size or no significant compression can be achieved.

This method of tokenization is not just a theoretical exercise; it is used in real-world applications to prepare training datasets for language models. It is an essential step in creating an encoding and decoding mechanism that models can learn and leverage to understand and generate text accurately.

Code Example: Tokenization Process

Here is a comprehensive example of a tokenization process, including the use of the get_stats function and character conversion with chr:

# Define the get_stats function for byte pair frequencies

def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

# Obtain token statistics

tokens = [239, 188, 181, 239, 189, 142, ...] # Example token list

stats = get_stats(tokens)

# Print sorted byte pair frequencies

print(sorted(((v, k) for k, v in stats.items()), reverse=True))

# Convert byte pairs to their corresponding characters

print(chr(101), chr(32)) # Output: ('e', ' ')

By executing this code, we would see a list of the most common byte pairs alongside their frequencies, helping us understand which pairs to merge during BPE tokenization. The character conversion using chr gives us insight into what the byte pairs actually represent in human-readable text.

Implementing Token Merging

and this is the most common pair. So now that we’ve identified the most common pair we would like to

# Print sorted byte pair frequencies

stats = get_stats(tokens)

print(sorted(((v, k) for k, v in stats.items()), reverse=True))

iterate over the sequence. We’re going to mint a new token with the ID of 256 right because

def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Pythonic way to iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

# Print sorted byte pair frequencies

stats = get_stats(tokens)

print(sorted(((v, k) for k, v in stats.items()), reverse=True))

these tokens currently go from 0 to 255. So when we create a new token it will have an ID of

In [99]: def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Pythonic way to iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

256 and we’re going to iterate over this entire list and every time we see 101 comma 32

Tokenization Last Checkpoint: a few seconds ago (autosaved)

File Edit View Insert Cell Kernel Widgets Help

Python 3 Trusted Logout

In [99]: def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Pythonic way to iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

stats = get_stats(tokens)

#print(stats)

print(sorted(((v, k) for k, v in stats.items()), reverse=True))

we’re going to swap that out for 256. So let’s implement that now and feel free to do that yourself

Tokenization Last Checkpoint: a few seconds ago (autosaved)

at all. So first I commented this just so we don’t pollute the notebook too much.

Tokenization Last Checkpoint: a few seconds ago (autosaved)

length: 616

def get_stats(ids):

counts = {}

for pair in zip(ids, ids[1:]): # Pythonic way to iterate consecutive elements

counts[pair] = counts.get(pair, 0) + 1

return counts

stats = get_stats(tokens)

# print(stats)

# print(sorted(((v, k) for k, v in stats.items()), reverse=True))

top_pair = max(stats, key=stats.get)

top_pair

# (101, 32)

This is a nice way of in Python obtaining the highest ranking pair. We’re basically calling the max on this dictionary stats and this will return the maximum key and then the question is how does it rank keys so you can provide it with a function that ranks keys and that function is just stats that get. Stat that gets would basically return the value. And so we’re ranking by the value and getting the maximum key. So it’s 101 comma 32 as we saw. Now to actually merge 101 32

In [111]: top_pair = max(stats, key=stats.get)

Out[111]: top_pair

(101, 32)

In [114]: def merge(ids, pair, idx):

# in the list of ints (ids), replace all consecutive occurrences of pair with the new token idx

newids = []

i = 0

while i < len(ids):

# if we are not at the very last position AND the pair matches, replace it

if i < len(ids) - 1 and ids[i] == pair[0] and ids[i+1] == pair[1]:

newids.append(idx)

i += 2

else:

newids.append(ids[i])

i += 1

return newids

print(merge([5, 6, 6, 7, 9, 1], (6, 7), 99))

#tokens2 = merge(tokens, top_pair, 256)

#print(tokens2)

#print(

this is the function that I wrote but again there are many different versions of it. So we’re going to take a list of IDs and the pair that we want to replace and that pair will be replaced with the new index IDX. So iterating through IDs if we find the pair swap it out for IDX. So we create this new list and then we start at zero and then we go through this entirely sequentially from left to right. And here we are checking for equality at the current position with the pair. So here we are checking that the pair matches. Now here’s a bit of a tricky condition that you have to append if you’re trying to be careful and that is that you don’t want

Tokenization Last Checkpoint: 2 minutes ago (autosaved)

In [111]: top_pair = max(stats, key=stats.get)

Out[111]: (101, 32)

In [114]: def merge(ids, pair, idx):

# in the list of ints (ids), replace all consecutive occurrences of pair with the new token idx

newids = []

i = 0

while i < len(ids):

# if we are not at the very last position AND the pair matches, replace it

if i < len(ids) - 1 and ids[i] == pair[0] and ids[i+1] == pair[1]:

newids.append(idx)

i += 2

else:

newids.append(ids[i])

i += 1

return newids

print(merge([5, 6, 6, 7, 9, 1], (6, 7), 99))

#[5, 6, 99, 9, 1]

#tokens2 = merge(tokens, top_pair, 256)

#print(tokens2)

#print(

this here to be out of bounds at the very last position when you’re on the right-most element of this list otherwise this would give you an out of bounds error. So we have to make sure that we’re not at the very very last element. So this would be false for that. So if we find a match we append to this new list that replacement index and we increment the position by two so we skip over that entire pair. But otherwise, if we haven’t found a matching pair we just sort of copy over the element of the position and increment by one in the return list. So here’s a very small toy example if we have a list 566791 and we want to replace the occurrences of 67 with 99 then

def merge(ids, pair, idx):

# in the list of ints (ids), replace all consecutive occurrences of pair with the new token idx

newids = []

i = 0

while i < len(ids):

# if we are not at the very last position AND the pair matches, replace it

if i < len(ids) - 1 and ids[i] == pair[0] and ids[i+1] == pair[1]:

newids.append(idx)

i += 2

else:

newids.append(ids[i])

i += 1

return newids

print(merge([5, 6, 5, 7, 9, 1], (6, 7), 99))

#[5, 6, 99, 9, 1]

calling this on that will give us what we’re asking for. So here the 677 is replaced with 99. So now I’m going to uncomment this for our actual use case where we want to take our tokens we want to

Tokenization Last Checkpoint: 3 minutes ago (unsaved changes)

In [114]: def merge(ids, pair, idx):

# in the list of ints (ids), replace all consecutive occurrences of pair with the new token idx

newids = []

i = 0

while i < len(ids):

#if we are not at the very last position AND the pair matches, replace it

if i < len(ids) - 1 and ids[i] == pair[0] and ids[i+1] == pair[1]:

newids.append(idx)

i += 2

else:

newids.append(ids[i])

i += 1

return newids

print(merge([5, 6, 6, 7, 9, 1], (6, 7), 99))

tokens2 = merge(tokens, top_pair, 256)

print(tokens2)

print(

take the top pair here and replace it with 256 to get tokens2 if we run this we get the following.

Tokenization Last Checkpoint: 3 minutes ago (unsaved changes)

In [114]: def merge(ids, pair, idx):

# in the list of ints (ids), replace all consecutive occurrences of pair with the new token idx

newids = []

i = 0

while i < len(ids):

# if we are not at the very last position AND the pair matches, replace it

if i < len(ids) - 1 and ids[i] == pair[0] and ids[i+1] == pair[1]:

newids.append(idx)

i += 2

else:

newids.append(ids[i])

i += 1

return newids

print(merge([5, 6, 6, 7, 9, 1], (6, 7), 99))

tokens2 = merge(tokens, top_pair, 256)

print(tokens2)

print(

Tokenization Last Checkpoint: 3 minutes ago (unsaved changes)

[5, 6, 99, 9, 1]

[239, 188, 181, 239, 189, 142, 239, 189, 137, 239, 189, 131, 239, 189, 143, 239, 189, 132, 239, 189, 133, 32, 240, 159, 133, 164, 240, 159, 133, 157, 240, 159, 133, 152, 240, 159, 133, 146, 240, 159, 133, 158, 240, 159, 133, 147, 240, 159, 133, 148, 226, 128, 189, 32, 240, 159, 133, 135, 188, 226, 128, 140, 240, 159, 135, 179, 226, 128, 140, 240, 159, 135, 174, 240, 159, 135, 140, 240, 159, 135, 150, 240, 159, 135, 180, 240, 159, 135, 140, 240, 159, 135, 179, 226, 128, 140, 240, 245, 169, 226, 128, 140, 240, 159, 135, 170, 33, 32, 240, 159, 152, 132, 32, 84, 104, 256, 118, 101, 114, 121, 32, 115, 0, 97, 109, 256, 115, 116, 256, 114, 105, 105, 105, 105, 115, 256, 32, 240, 159, 107, 97, 114, 109, 256, 105, 110, 114, 256, 32, 105, 110, 116, 111, 32, 116, 104, 256, 105, 104, 101, 97, 114, 116, 115, 32, 111, 102, 32, 116, 104, 101, 32, 97, 110, 100, 32, 105, 109, 101, 114, 32, 105, 116, 32, 105, 32, 116, 104, 101, 32, 105, 32, 105, 256, 32, 101, 114, 256, 97, 105, 105, 105, 32, 105, 32, 105, 119, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 85, 110, 105, 99, 111, 100, 101, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 7, 114, 256, 40, 119, 105, 97, 97, 105, 116, 105, 110, 105, 107, 32, 105, 105, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 8, 148, 108, 105, 107, 256, 117, 105, 105, 105, 105, 105, 32, 105, 32, 105, 97, 114, 114, 105, 105, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 32, 105, 108, 108, 32, 105, 116, 105, 105, 105, 105, 105, 105, 105, 105, 105, 44, 32, 105, 105, 105, 63, 41, 41, 44, 32, 105, 32, 105, length: 596

Through the use of the merge function, we can see that a new token, 256, has been introduced to replace the frequent pair (101, 32). The output of the merge function shows the new list of tokens, tokens2, where this replacement has occurred. This process of token merging and vocabulary expansion is fundamental to the BPE algorithm and paves the way for more efficient tokenization in language models.

The critical aspect of this process is to iteratively merge the most frequent pairs, thereby condensing the text into fewer tokens that contain more information per token. This iterative process is the cornerstone of modern tokenization techniques used in large language models, enabling them to manage vast amounts of text while maintaining a workable vocabulary size.

Continued Token Merging and Vocabulary Expansion

So recall that previously we had a length of 616 in this list, and now we have a length of 596, right so this decrease by 20 which makes sense because there are 20 occurrences. Moreover, we can try to find the 256 here, and we see plenty of occurrences of it, and moreover, just double check there should be no occurrence of (101, 32) so this is the original array plenty of them and in the second array there are:

print('length:', len(tokens2))

print(tokens2)

no occurrences of (101, 32). So we’ve successfully merged this single pair and now we just iterate this so we are going to go over the sequence again, find the most common pair, and replace it. So let me write a while loop that uses these functions to do this sort of iteratively, and how many times do we do this? Well, how many merges do we want to do?

Let’s look at some code:

while True:

stats = get_stats(tokens2)

if not stats:

break

top_pair = max(stats, key=stats.get)

if stats[top_pair] < THRESHOLD:

break

tokens2 = merge(tokens2, top_pair, new_token_idx)

new_token_idx += 1

In this loop, THRESHOLD would be a hyperparameter that you choose based on when you want to stop merging tokens based on their frequency. This step is crucial since it directly influences the size of the final vocabulary. Too large of a vocabulary might not offer the compact representation you desire, whereas too small might not capture enough nuances in the text.

Reflecting on the Tokenization Method

Tokenization is not just a mere preliminary step in text analysis; it’s a determining factor in the performance of LLMs. Different tokenization methods can produce vastly different results, and the choice of method should be aligned with the intended downstream tasks. As you can see from the iterative process we’ve been through, the methodology behind tokenization is anything but trivial. It requires careful consideration and understanding of the language and the model’s needs.

To illustrate further, consider the following points extracted from our exploration:

- The initial list length was 616, and after one round of merging, this was reduced to 596.

- The new token

256replaced the pair(101, 32)throughout the token list, demonstrating successful merging. - The iterative process is controlled by a while loop, which continues to merge the most common pairs until a set threshold is reached.

- The

THRESHOLDhyperparameter is significant because it determines when the merging process stops, influencing the final vocabulary size.

As we dive deeper into the nuances of tokenization, it’s clear that this step is more than just a formality. It affects every aspect of the LLM, from the way it understands text to its performance on various tasks. The transformation of raw text into tokens, and the subsequent processing of these tokens, is a cornerstone of modern natural language processing.

Conclusion

In summary, tokenization is a critical step in the functioning of LLMs. It impacts the model’s ability to efficiently process text and understand its nuances. The methods used for tokenization, such as the BPE algorithm and its iterations, are complex but necessary for the creation of powerful language models. As we have seen, the choices made during tokenization, such as when to stop merging tokens, can have significant effects on the final outcomes. It’s a fascinating and intricate process that underpins much of the success in the field of natural language processing.

Deciding on Vocabulary Size

As we venture deeper into the intricacies of tokenization, we come across a critical hyperparameter: the final vocabulary size. This parameter is pivotal as it determines the balance between the granularity of the tokens and the length of the sequences we will eventually process. It’s a delicate balance that must be struck, as a larger vocabulary size leads to shorter sequences, but may also lead to a more sparse representation that could hinder the model’s performance.

When deciding on the final vocabulary size, one must take into account the nature of the language being tokenized and the computational resources at hand. For instance, GPT-4 uses around 100,000 tokens, which has been empirically determined to yield robust performance across a variety of tasks.

Let’s examine a practical example to illustrate the concept of vocabulary size and the process of merging tokens:

# Desired final vocabulary size

vocab_size = 276

# Number of merges to reach the desired vocabulary size

num_merges = vocab_size - 256

# Copy the original list to preserve the original tokens

ids = list(tokens)

# Dictionary to record merges

merges = {} # (int, int) -> int

# Perform the merges

for i in range(num_merges):

stats = get_stats(ids)

pair = max(stats, key=stats.get)

idx = 256 + i

print(f"Merging {pair} into new token {idx}")

ids = merge(ids, pair, idx)

merges[pair] = idx

In this code, we set a final vocabulary size of 276, which means we need to perform 20 merges starting from the 256 tokens representing raw bytes. We iterate through the most common byte pairs, create a new token for each merge, and replace all occurrences of that pair with the new token. The merges dictionary keeps track of these changes, which will be useful for encoding and decoding sequences later on.

Iterative Token Merging

The merging process is not a one-time event but an iterative procedure that builds upon itself. As we merge tokens, the newly created tokens become candidates for subsequent merges. This leads to a hierarchical structure of tokens, akin to a forest rather than a single tree, as each merge connects two “leaves” to form a new “branch.”

Here are some of the merges that occurred during our example process:

- Merging (101, 32) into new token 256

- Merging (105, 110) into new token 257

- Merging (115, 32) into new token 258

- …

- Merging (259, 256) into new token 275

After executing 20 merges, we can observe the evolution of our token list. It’s essential to note that individual tokens like 101 and 32 may still appear independently in the sequence; they only form the merged token when they appear consecutively.

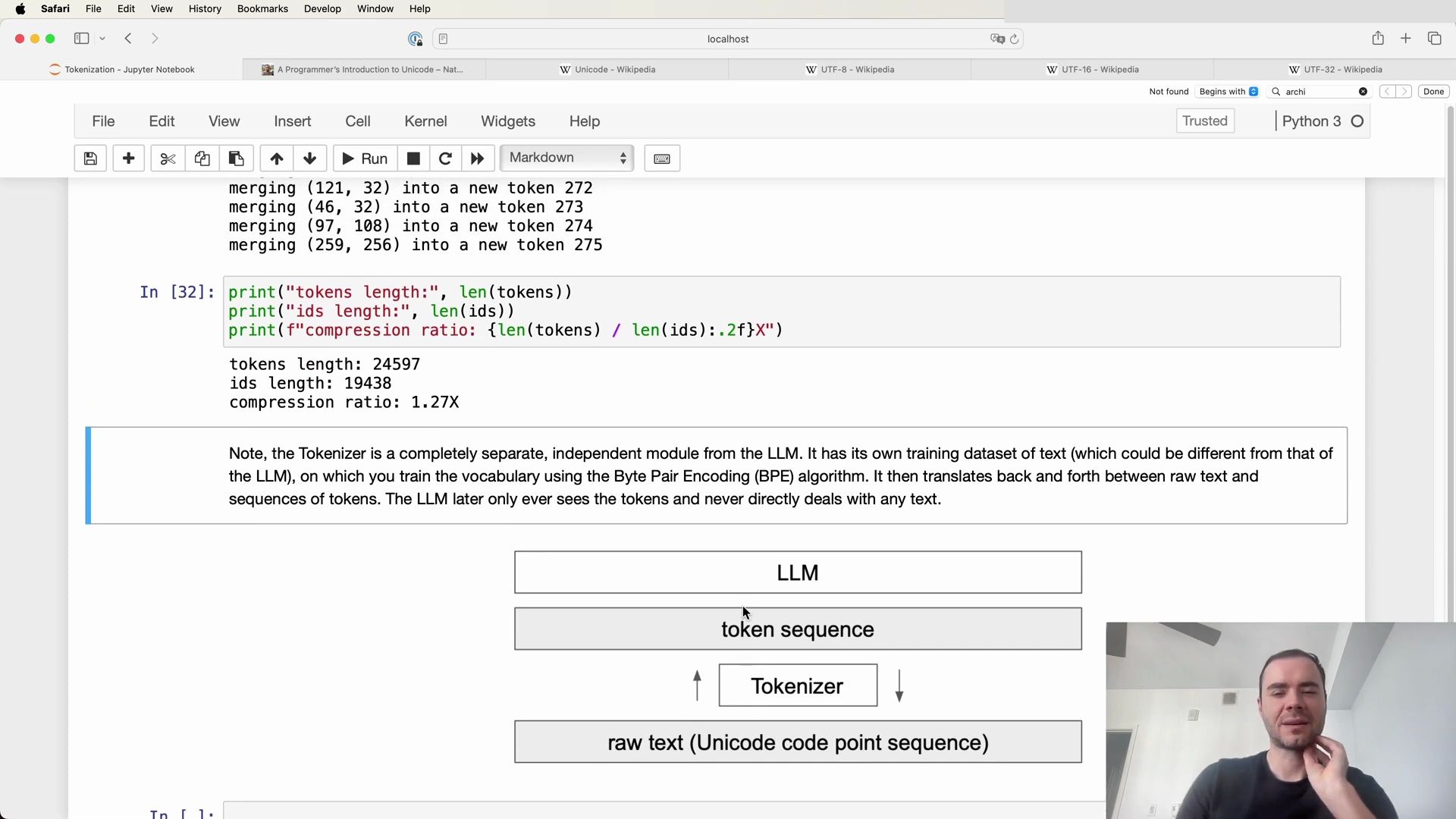

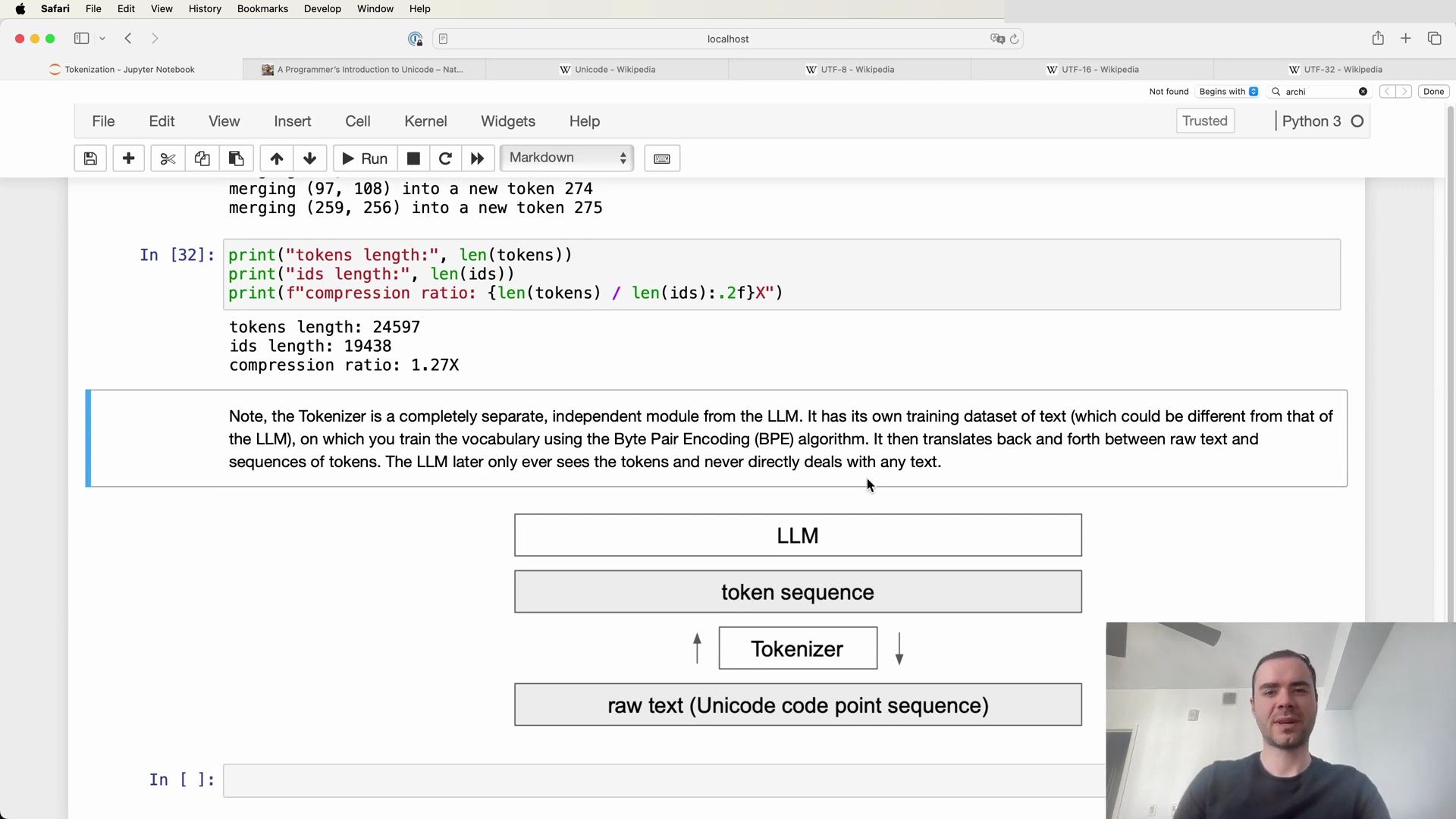

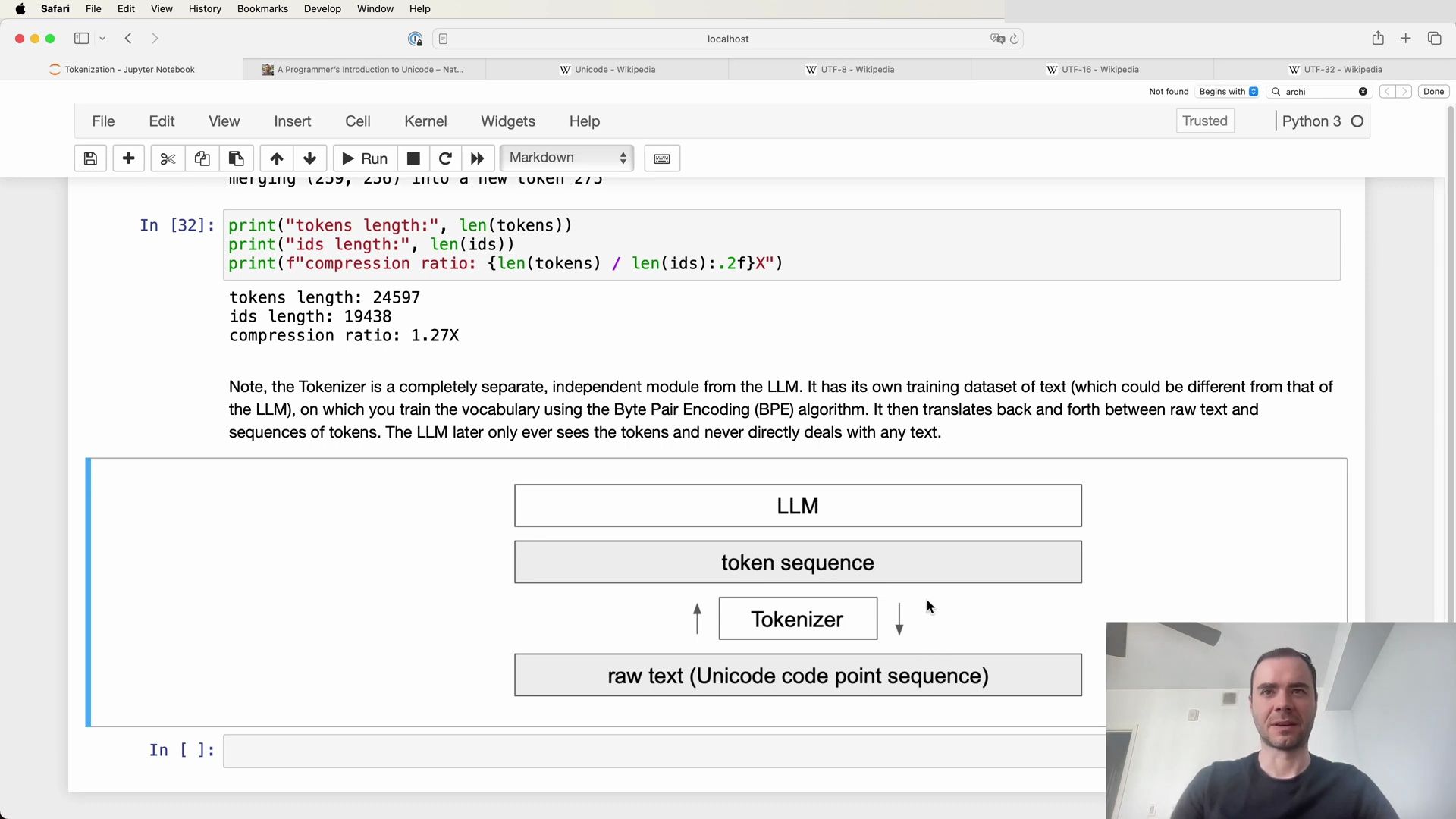

Analyzing Compression Ratio

One of the benefits of tokenization is the compression of the original text data. By examining the compression ratio, we can gauge the efficiency of our tokenization process. In our example, we started with 24,000 bytes and reduced it to 19,000 tokens after 20 merges, achieving a compression ratio of approximately 1.27.

# Starting bytes

starting_bytes = 24000

# Tokens after merges

final_tokens = 19000

# Calculate compression ratio

compression_ratio = starting_bytes / final_tokens

print(f"Compression Ratio: {compression_ratio:.2f}")

The resulting compression ratio indicates the degree of compactness we’ve achieved with our tokenization process. It’s a straightforward calculation, but it provides valuable insight into the efficiency of our approach.

Tokenizer as a Separate Entity

It is crucial to recognize that the tokenizer is an entirely separate component from the large language model (LLM) itself. The tokenizer has its dedicated training set, which may differ from the training set of the LLM. The tokenizer’s role is to preprocess text data, a task that is performed once before the LLM’s training begins.

The Byte Pair Encoding (BPE) algorithm is employed to train the tokenizer’s vocabulary, and once trained, the tokenizer can perform both encoding and decoding of text data. It acts as a translation layer between the raw text, which consists of a sequence of Unicode code points, and the token sequence.

The following diagrams help visualize the tokenizer’s function and its place within the LLM ecosystem:

Encoding and Decoding with the Tokenizer

With the tokenizer trained and the merges dictionary established, we can now focus on the encoding and decoding steps. Encoding involves converting raw text into a sequence of tokens, while decoding is the reverse process, transforming a sequence of tokens back into human-readable text.

These processes are foundational for interfacing with the LLM, as they allow us to convert between the model’s input/output format and the text data we naturally understand. The next phase of our exploration will delve into the practical implementation of these encoding and decoding operations, demonstrating how they bridge the gap between raw text and the LLM’s tokenized representation.

Understanding Encoding and Decoding

After discussing the intricacies of tokenization and its importance in the realm of language models, we now turn our attention to the practical aspects of encoding and decoding. Encoding is the process of converting raw text into a sequence of tokens, while decoding translates a sequence of tokens back into text. This translation is crucial as the large language model (LLM) operates exclusively on token sequences and does not directly interact with raw text.

To understand this process better, let’s visualize the flow of data:

- Raw text, which is a sequence of Unicode code points, is first processed by the tokenizer.

- The tokenizer converts the raw text into a sequence of tokens, which are then fed into the LLM.

- The LLM performs its computations and outputs a sequence of tokens.

- Finally, the tokenizer takes this token sequence and decodes it back into human-readable text.

Here is an example to illustrate the decoding process, which is the reverse of encoding:

# Define the vocabulary for the initial byte-level tokens

vocab = {idx: bytes([idx]) for idx in range(256)}

# Apply the merges learned by the BPE algorithm

for (p0, p1), idx in merges.items():

vocab[idx] = vocab[p0] + vocab[p1]

# The decoding function converts a sequence of token IDs back to text

def decode(ids):

# Given ids (a list of integers), return a Python string

tokens = b''.join(vocab[idx] for idx in ids)

text = tokens.decode('utf-8')

return text

This code snippet provides a fundamental decoding function. We start by defining a vocabulary mapping integers to byte objects for the initial token set. Then we apply the merges learned during the BPE training process to this vocabulary. The decode function takes a list of token IDs (ids) and returns the corresponding text string.

Decoding Pitfalls

While the implementation above seems straightforward, it can encounter issues when dealing with certain sequences of token IDs. Let’s delve into a potential problem:

Imagine we try to decode a token sequence that includes the token 128. Since 128 is outside the ASCII range, trying to decode it as a single byte using the UTF-8 standard will result in an error:

print(decode([128]))

# This will raise a UnicodeDecodeError

The UnicodeDecodeError occurs because the UTF-8 encoding expects the byte corresponding to the token ID to be part of a valid UTF-8 sequence. If it’s not, the decoding will fail.

Encoding with UTF-8

To better understand this error, we need to examine how UTF-8 encoding works. UTF-8 encodes code points into a sequence of one to four bytes, depending on the value of the code point. For example:

- The first 128 code points (ASCII) need only one byte.

- Code points from U+0080 to U+07FF require two bytes.

- Code points from U+0800 to U+FFFF (covering the Basic Multilingual Plane) need three bytes.

- Code points from U+10000 to U+10FFFF, which include supplementary characters and emoji, require four bytes.

This structure ensures that UTF-8 is backwards compatible with ASCII but also capable of representing every character in the Unicode standard.

Correcting the Decoding Function

To address the decoding issue mentioned earlier, we need to ensure that each token ID corresponds to a valid UTF-8 sequence before attempting to decode it. Here’s how we can modify our decode function to handle this correctly:

def decode(ids):

# Given ids (list of integers), return a Python string

tokens = []

for idx in ids:

if idx < 128:

# Directly convert ASCII tokens to their character representation

tokens.append(chr(idx).encode('utf-8'))

else:

# For non-ASCII tokens, fetch the corresponding byte sequence from the vocab

tokens.append(vocab[idx])

text = b''.join(tokens).decode('utf-8')

return text